Projects

I work on a number of computer systems and programming language research projects, and in recent years with a bias towards topics relevant to sustainability. Read on to learn more about them and relevant publications, ideas and notes.

Conservation Evidence Copilots

2024–present

The Conservation Evidence team at the University

of Cambridge has spent years screening 1.6m+ scientific papers on conservation, as

well as manually summarising 8600+ studies relating to conservation actions.

However, progress is limited by the specialised skills needed to screen and

summarise relevant studies -- it took more than 75 person years to manually

curate the current database and only a few 100 papers can be added each year!

We are working on AI-driven techniques to accelerate addition of robust

evidence to the CE database via automated literature scanning,

Recent papers

Remote Sensing of Nature

2023–present

Measuring the world's forest carbon and biodiversity is made possible by remote

sensing instruments, ranging from satellites in space (Landsat, Sentinel, GEDI)

to ground-based sensors (ecoacoustics, camera traps, moisture sensors) that

take regular samples and are processed into time-series metrics and actionable

insights for conservation and human development. However, the algorithms for

processing this data are challenging as the data is highly multimodal

(multispectral, hyperspectral, synthetic aperture radar, or lidar), often

sparsely sampled spatially, and not in a continuous time series. I work on

various algorithms and software and hardware systems we are developing to

improve the datasets we have about the surface of the earth, in close

collaboration with the

Recent papers

Mapping LIFE on Earth

2023–present

Human-driven habitat loss is recognised as the greatest cause of biodiversity loss, but we lack robust, spatially explicit metrics quantifying the impacts of anthropogenic changes in habitat extent on species' extinctions. LIFE is our new metric that uses a persistence score approach that combines ecologies and land-cover data whilst considering the cumulative non-linear impact of past habitat loss on species' probability of extinction. We apply large-scale computing to map ~30k species of terrestrial vertebrates and provide quantitative estimates of the marginal changes in the expected number of extinctions caused by converting remaining natural vegetation to agriculture, and also by restoring farmland to natural habitat. We are also investigating many of the conservation opportunities opened up via its estimates of the impact on extinctions of diverse actions that change land cover, from individual dietary choices through to global protected area development.

Recent papers

Planetary Computing

2022–present

Planetary computing is our research into the systems required to handle the

ingestion, transformation, analysis and publication of global data products for

furthering environmental science and enabling better informed policy-making. We

apply computer science to problem domains such as forest carbon and

biodiversity preservation (see

Recent papers

Trusted Carbon Credits

2021–present

The Cambridge Centre for Carbon Credits is an initiative I started with

Recent papers

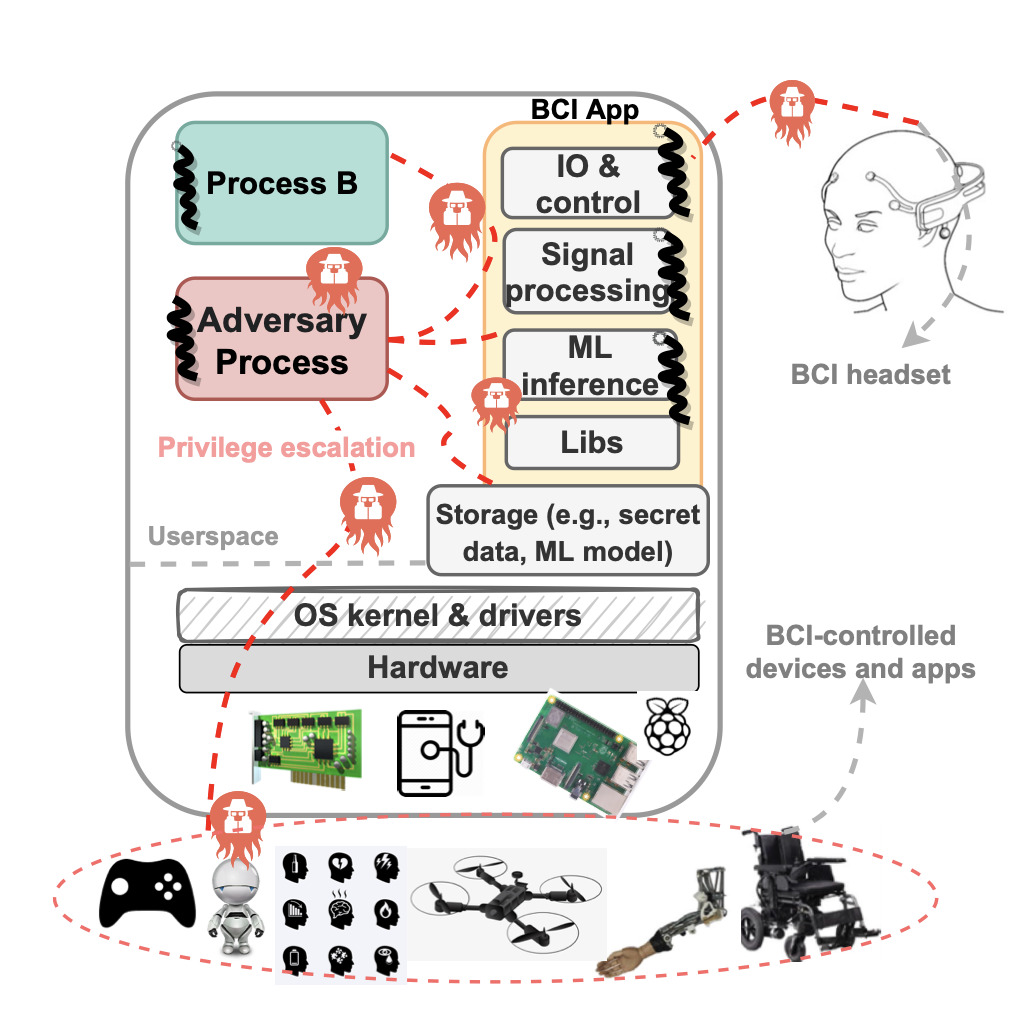

Information Flow for Trusted Execution

2020–present

There is now increased hardware support for improving the security and performance of privilege separation and compartmentalization techniques such as process-based sandboxes, trusted execution environments, and intra-address space compartments. We dub these "hetero-compartment environments" and observe that existing system stacks still assume single-compartment models (i.e. user space processes), leading to limitations in using, integrating, and monitoring heterogeneous compartments from a security and performance perspective. This project explores how we might deploy techniques such as fine-grained information flow control (DIFC) to allow developers to securely use and combine compartments, define security policies over shared system resources, and audit policy violations and perform digital forensics across hetero-compartments.

Interspatial OS

2018–present

Digital infrastructure in modern urban environments is currently very Internet-centric, and involves transmitting data to physically remote environments. The cost for this is data insecurity, high response latency and unpredictable reliability of services. I am working on Osmose -- a new OS architecture that inverts the current model by building an operating system designed to securely connect physical spaces with extremely low latency, high bandwidth local-area computation capabilities and service discovery.

Recent papers

OCaml Labs

2012–2021

I founded a research group called OCaml Labs at the University of Cambridge, with the goal of pushing OCaml and functional programming forward as a platform, making it a more effective tool for all users (including large-scale industrial deployments), while at the same time growing the appeal of the language, broadening its applicability and popularity. Over a decade, we retrofitted multicore parallelism into the mainline OCaml manager, wrote a popular book on the language, and helped start and grow an OCaml package and tooling ecosystem that is thriving today.

Recent papers

Unikernels

2010–2019

I proposed the concept of "unikernels" -- single-purpose appliances that are compile-time specialised into standalone bootable kernels, and sealed against modification when deployed to a cloud platform. In return they offer significant reduction in image sizes, improved efficiency and security, and reduce operational costs. I also co-founded the MirageOS project which is one of the first complete unikernel frameworks, and also integrated them to create the Docker for Desktop apps that are used by hundreds of millions of users daily.

Recent papers

Personal Containers

2009–2015

As cloud computing empowered the creation of vast data silos, I investigated how decentralised technologies might be deployed to allow individuals more vertical control over their own data. Personal containers was the prototype we built to learn how to stem the flow of our information out to the ad-driven social tarpits. We also deployed personal containers in an experimental data locker system at the University of Cambridge in order to incentivise lower-carbon travel schemes.

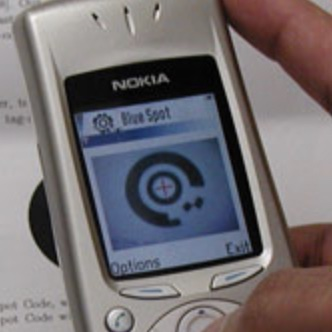

Ubiquitous Interaction Devices

2003–2008

I investigated how to interface the new emerging class of smartphone devices (circa 2002) with concepts from ubiquitous computing such as location-aware interfaces or context-aware computing. I discovered the surprisingly positive benefits of piggybacking on simple communications medium such as audible sound and visual tags. Our implementations of some of these ended up with new audio ringtone and visual smart tags that worked on the widely deployed mobile phones of the era.

In 2003, the mobile phone market had grown tremendously and given the average consumer access to cheap, small, low-powered and constantly networked devices that they could reliably carry around. Similarly, laptop computers and PDAs became a common accessory for businesses to equip their employees with when on the move. The research question then, was how to effectively interface them with existing digital infrastructure and realise some of the concepts of ubiquitous computing such as location-aware interfaces or context-aware computing.

Recent papers

Functional Internet Services

2003–2008

My PhD dissertation work proposed an architecture for constructing new implementations of standard Internet protocols with integrated formal methods such as model checking and functional programming that were then not used in deployed servers. A more informal summary is "rewrite all the things in OCaml from C!", which lead to a merry adventure into implementing many networks protocols from scratch in a functional style, and learning lots about how to enforce specifications without using a full blown proof assistant.

Xen Hypervisor

2002–2009

I was on the original team at Cambridge that built the Xen hypervisor in 2002 -- the first open-source "type-1" hypervisor that ushered in the age of cloud computing and virtual machines. Xen emerged from the Xenoservers project at the CL SRG, where I started my PhD and hacked on the emerging codebase and subsequently worked on the development of the commercial distribution of XenServer.