Ubiquitous Interaction Devices

I investigated how to interface the new emerging class of smartphone devices (circa 2002) with concepts from ubiquitous computing such as location-aware interfaces or context-aware computing. I discovered the surprisingly positive benefits of piggybacking on simple communications medium such as audible sound and visual tags. Our implementations of some of these ended up with new audio ringtone and visual smart tags that worked on the widely deployed mobile phones of the era.

In 2003, the mobile phone market had grown tremendously and given the average consumer access to cheap, small, low-powered and constantly networked devices that they could reliably carry around. Similarly, laptop computers and PDAs became a common accessory for businesses to equip their employees with when on the move. The research question then, was how to effectively interface them with existing digital infrastructure and realise some of the concepts of ubiquitous computing such as location-aware interfaces or context-aware computing.

Ubiquitous Interaction Devices (see original webpages) was a project I started with Dave Scott and Richard Sharp in 2003 to work on this at the Cambridge Computer Laboratory and Intel Research Cambridge (who had just set up a "lablet" within our building and was a great source of free coffee).

1 Audio Networking

The project kicked off when we randomly experimented with our fancy Nokia smartphones and discovered that they didn't have anti-aliasing filters on the microphones. This allowed us to record and decode ultrasound between the phones. The 2003 Context-Aware Computing with Sound in Ubicomp describes some of the applications it allowed.

In a nutshell, audio networking used ubiquitously available sound hardware (i.e, speakers, soundcards and microphones) for low-bandwidth wireless networking. It has a number of characteristics which differentiate it from existing wireless technologies:

- fine-grained control over the range of transmission by adjusting the volume.

- walls of buildings are typically designed to attenuate sound waves, making it easy to contain transmission to a single room (unlike, for example, Wifi or Bluetooth).

- existing devices can play or record audio to be networked easily (e.g. voice recorders).

The Audionotes video below demonstrates an Audio Pick and Drop Interface: an Internet URL is recorded into a PDA via a standard computer speaker. The URL is later retrieved from the PDA by playing it into the computer, and also printed by carrying the PDA to a print server and playing back the recorded identifier. A great advantage of this is that any cheap voice recorder is capable of carrying the audio information (e.g. mobile phone, PDA, dictaphone, etc).

One of the more interesting discoveries during this research is that most computer soundcards do not have good anti-aliasing filters fitted, resulting in them being able to send and receive inaudible sound at upto 24 Khz (assuming a 48 KHz soundcard). We used this to create a set of inaudible location beacons that would allow laptop computers to simply listen using their microphones and discover their current location without any advanced equipment being required! The location video below demonstrates this.

We then devised a scheme of encoding data into mobile phone ringtones while still making them sound pleasant to the ear. This allows us to use the phone network for the video example above: an SMS 'capability' is transmitted to a phone, which can be played back to gain access to a building. Since the data is just a melody, this allows for uses such as parents purchasing cinema tickets online for their children (who dont own a credit card), yet still allowing the children to gain access to the cinema by playing the ringtone capability back (via their mobile phones, common among children).

We observed that audio networking allows URLs to be easily sent over a telephone conversation, and retrieved over a normal high bandwidth connection by the recipient. This gets over the real awkwardness of "locating" someone on the Internet, which is rife with restrictive firewalls and Network Address Translation which is destroying end-to-end connectivity. In contrast, it is trivial to locate someone on the phone network given their telephone number, but harder to perform high bandwidth data transfers (which the Internet excels at). There's more detail on these usecases in Using smart phones to access site-specific services.

2 SpotCode Interfaces

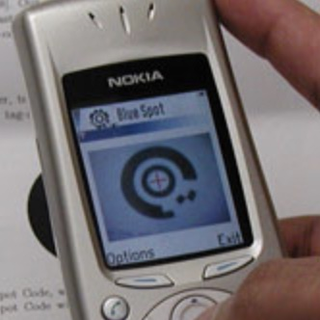

Once we'd had such success with audible networking, a natural next step was to use the new fancy cameras present on smartphones. Eben Upton joined our project and knocked up a real-time circular barcode detector for Symbian operating system phones in short order that we demonstrated in Using Camera-Phones to Enhance Human-Computer Interaction.

The phone app we built could detect our circular barcodes in realtime, unlike the ungainly "click and wait" experiences of the time. Since the phone computes the relative rotation angle of detected tags in real-time, an intuitive "volume control" was possible by simple locking onto a tag and physically rotating the phone. The videos demonstrates a volume control in the "SpotCode Jukebox", and how further interfaces could be "thrown" to the phone for detailed interactions.

We then built some more elaborate public applications (such as a travel booking shop) in Using camera-phones to interact with context-aware mobile services and Using smart phones to access site-specific services). When Eleanor Toye Scott joined the project, we subsequently conducted structured user studies to see how effective the tags are in Interacting with mobile services: an evaluation of camera-phones and visual tags. As a side-note, the whole zooming interface was written using OCaml and OpenGL. We spun out a startup company called High Energy Magic Ltd., and got into the New York Times and Wired (alongside a decaying prawn sandwich).

It also became obvious that the technology was also really robust, since it worked fine on printed (and crumpled) pieces of paper, making it ideal for public signage. We used this to experiment with more robust device discovery in Using visual tags to bypass Bluetooth device discovery. This subsequently lead us to show how smartphones could be trusted side-channels in Enhancing web browsing security on public terminals using mobile composition, an idea that is now (as of 2020) becoming realised with trusted computing chips in modern smartphones.

3 Towards smarter environments

At the Computer Laboratory, we also happen to have one of the world's best indoor location systems (the Active Bat), which we inherited from AT&T Research when that shut down in Cambridge. Ripduman Sohan, Alastair Tse and Kieran Mansley joined forces with us to investigate how commodity hardware could interface with this smart location system to make really futuristic buildings possible.

A few really fun projects resulted from this:

- We used the BAT system to help with Bluetooth-based indoor location inference, in A Study of Bluetooth Propagation Using Accurate Indoor Location Mapping.

- Interfaced the audio networking with the AT&T "broadband phones" in The Broadband Phone Network: Experiences with Context-Aware Telephony.

- We constructed a real-time capture the flag game in the Computer Lab with augmented reality "windows" into the game in Feedback, latency, accuracy: exploring tradeoffs in location-aware gaming.

- I worked with my Recoil-in-chief Nick Ludlam on digital TV interfaces in Ubiquitious Computing needs to catch up with Ubiquitous Media.

In around 2005, we sold High Energy Magic Ltd. to a Dutch entrepreneur so that Richard Sharp, Dave Scott and I could join the Xen Hypervisor startup company. However, the ubiquitous computing ideals that drove much of our work continue to persist, and in 2018 I started thinking about this again as part of my Interspatial OS project. The idea of building truely ubiquitous environments (without smartphones) is resurrected again there, and you can start reading about it in An architecture for interspatial communication.