Royal Society's Future of Scientific Publishing meeting / Jul 2025

I was a bit sleepy getting into the Royal Society Future of Scientific Publishing conference early this morning, but was quickly woken up by the dramatic passion on show as publishers, librarians, academics and funders all got together for a "frank exchange of views" at a meeting that didn't pull any punches!

These are my hot-off-the-press livenotes and only lightly edited; a more cleaned up version will be available from the RS in due course.

Mark Walport sets the scene

Sir Mark Walport was a delightful emcee for the proceedings of the day, and opened how important the moment is for the future of how we conduct science. Academic publishing faces a perfect storm: peer review is buckling under enormous volume, funding models are broken and replete with perverse incentives, and the entire system groans with inefficiency.

The Royal Society is the publisher of the world's oldest continuously published scientific journal Philosophical Transactions (since 1665) and has convened this conference for academies worldwide. The overall question is: what is a scientific journal in 2025 and beyond? Walport traced the economic evolution of publishing: for centuries, readers paid through subscriptions (I hadn't realised that the early editions of the RS used to be sent for free to libraries worldwide until the current commercial model arrived about 80 years ago).. Now, the pendulum has swung to open access that creates perverse incentives that prioritize volume over quality. He called it a "smoke and mirrors" era where diamond open access models obscure who actually pays for the infrastructure of knowledge dissemination: is it the publishers, the governments, the academics, the libraries, or some combination of the above? The profit margins of the commercial publishers answers that question for me...

He then identified the transformative forces that are a forcing function:

- LLMs have entered the publishing ecosystem

- The proliferation of journals has created an attention economy rather than a knowledge economy

- Preprint archives are reshaping how research is shared quickly

The challenges ahead while dealing with these are maintaining metadata integrity, preserving the scholarly archive into the long term, and ensuring systematic access for meta-analyses that advance human knowledge.

Historical Perspectives: 350 Years of Evolution

The opening pair of speakers were unexpected: they brought a historical and linguistic perspective to the problem. I found both of these talks the highlights of the day! Firstly Professor Aileen Fyfe drew upon her research from 350 years of the Royal Society archives. Back in the day, there was no real fixed entity called a "scientific journal". Over the centuries, everything from editorial practices to publication methods over to dissemination means have transformed repeatedly, so we shouldn't view the status quo as set in stone.

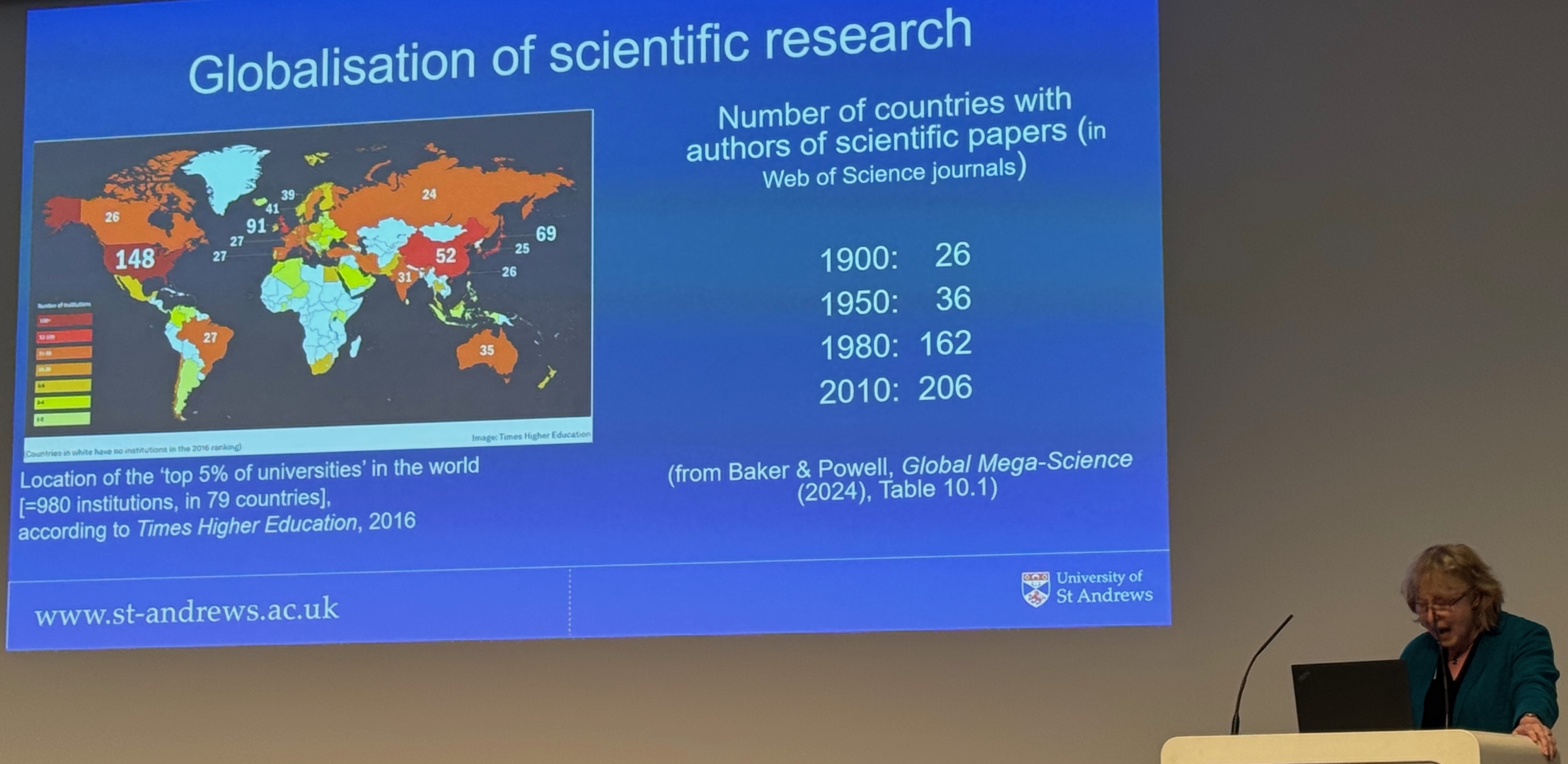

While the early days of science were essentially people writing letters to each other, the post-WWII era of journals marked the shift to "scale". The tools for distance communication (i.e. publishing collected issues) and universities switching from being teaching focused over to today's research-centric publishing ecosystem were both key factors. University scientists used to produce 30% of published articles in 1900; by 2020, that figure exceeded 80%. This parallels the globalization of science itself in the past century; research has expanded well beyond its European origins to encompass almost all institutions and countries worldwide.

Amusingly, Prof Fyfe pointed out that a 1960 Nature editorial asked "How many more new journals?" even back then! The 1950s did bring some standardization efforts (nomenclature, units, symbols) also though citation formats robustly seem to resist uniformity. English was also explicitly selected as the "default language for science, and peer review was also formalised via papers like "Uniform requirements for manuscripts submitted to biomedical journals" (in 1979). US Congressional hearings with the NSF began distinguishing peer review from other evaluation methods.

All of this scale was then "solved" by financialisation after WWII. At the turn of the 20th century, almost no journals generated any profit (the Royal Society distributed its publications freely). By 1955, financial pressures and growing scale of submissions forced a reckoning, leading to more self-supporting models by the 1960s. An era of mergers and acquisitions among journals followed, reshaping the scientific information system.

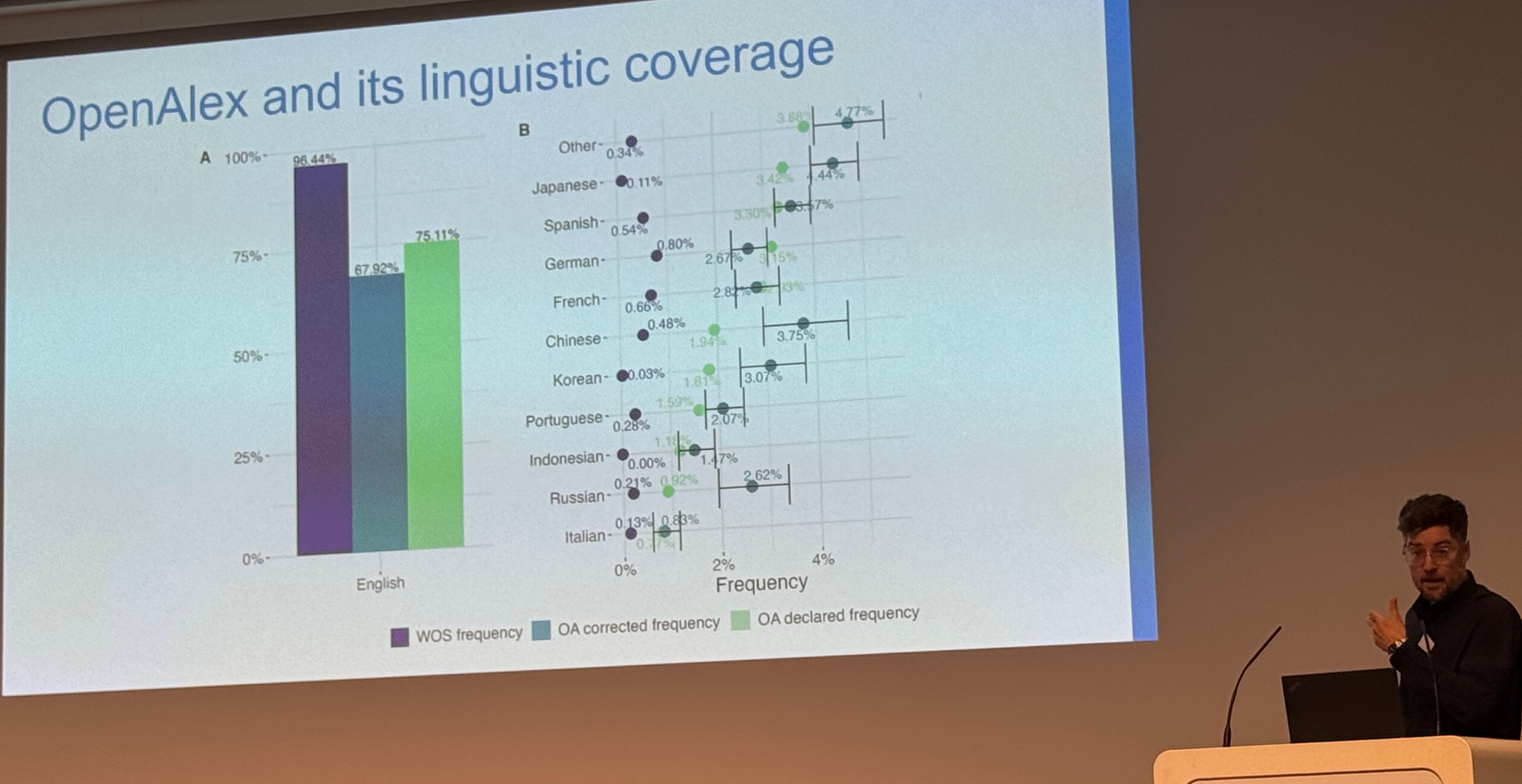

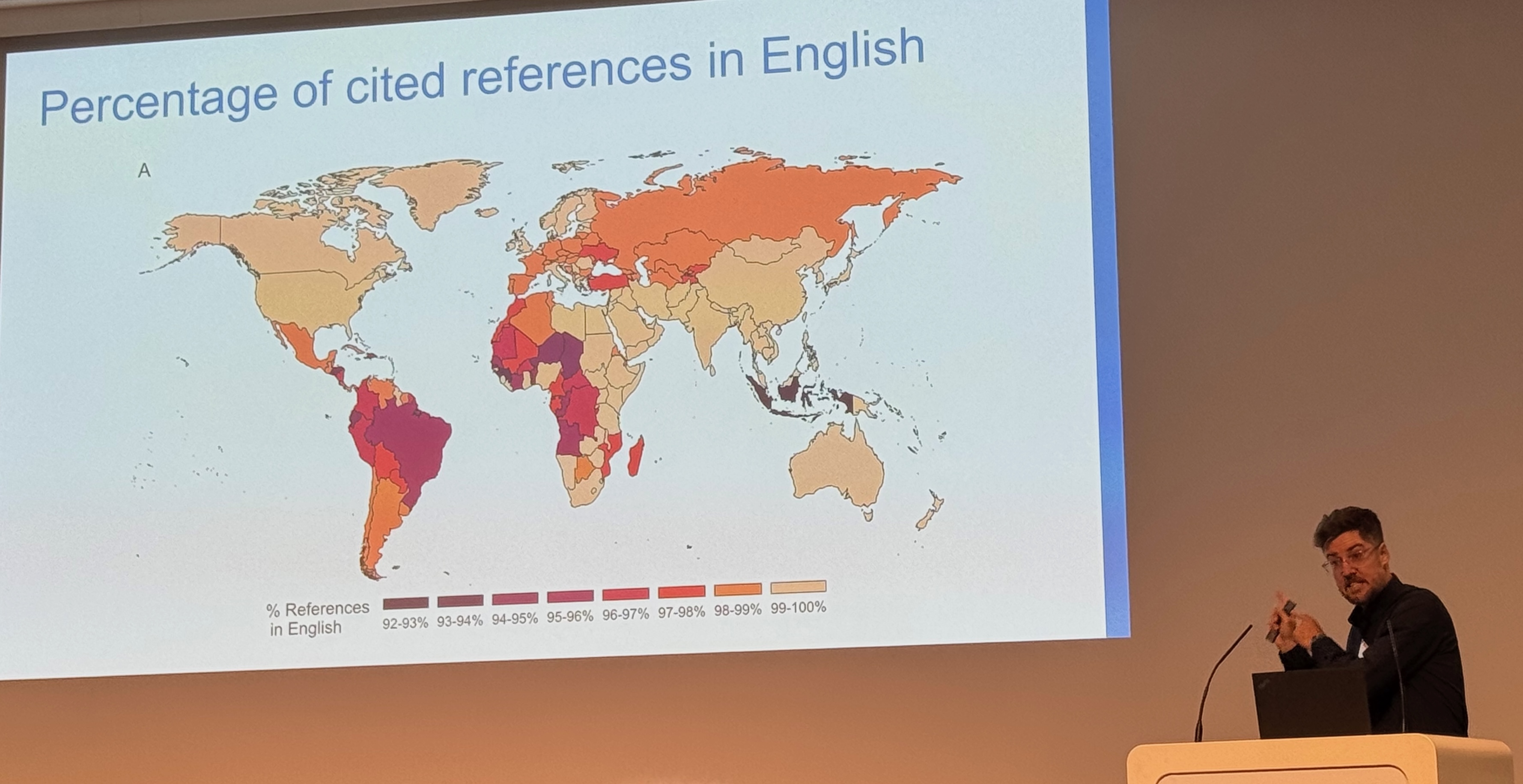

Professor Vincent Larivière then took the stage to dispel some myths of English monolingualism in scientific publishing. While English offers some practical benefits, the reality at non-Anglophone institutions (like his own Université de Montréal) reveals that researchers spend significantly more time reading, writing, and processing papers as non-native language speakers, and often face higher rejection rates as a result of this. This wasn't always the case though; Einstein published primarily in German, not English!

He went on to note that today's landscape for paper language choices is more diverse than is commonly assumed. English represents only 67% of publications, a figure whic itself has been inflated by non-English papers that are commonly published with English abstracts. Initiatives like the Public Knowledge Project has enabled growth in Indonesian and Latin America for example. Chinese journals now publish twice the volume of English-language publishers, but are difficult to index which makes Lariviere's numbers even more interesting: a growing majority of the world is no longer publishing in English! I also heard this in my trip in 2023 to China with the Royal Society; the scholars we met had a sequence of Chinese language journals they submitted too, often before "translating" the outputs to English journals.

All this leads us to believe that the major publisher's market share is smaller than commonly believed, which gives us reason for hope to change! Open access adoption worldwide currently varies fairly dramatically by per-capita wealth and geography, but reveals substantive greenspace for publishing beyond the major commercial publishers. Crucially, Larivière argued that research "prestige" is a socially constructed phenomenon, and not intrinsic to quality.

In the Q&A, Magdalena Skipper (Nature's Editor-in-Chief) noted that the private sector is reentering academic publishing (especially in AI topics). Fyfe noted the challenge of tracking private sector activities; e.g. varying corporate policies on patenting and disclosure mean they are hard to infdex. A plug from Coherent Digital noted they have catalogued 20 million reports from non-academic research; this is an exciting direction (we've got 30TB of grey literature on our servers, still waiting to be categorisd).

What researchers actually need from STEM publishing

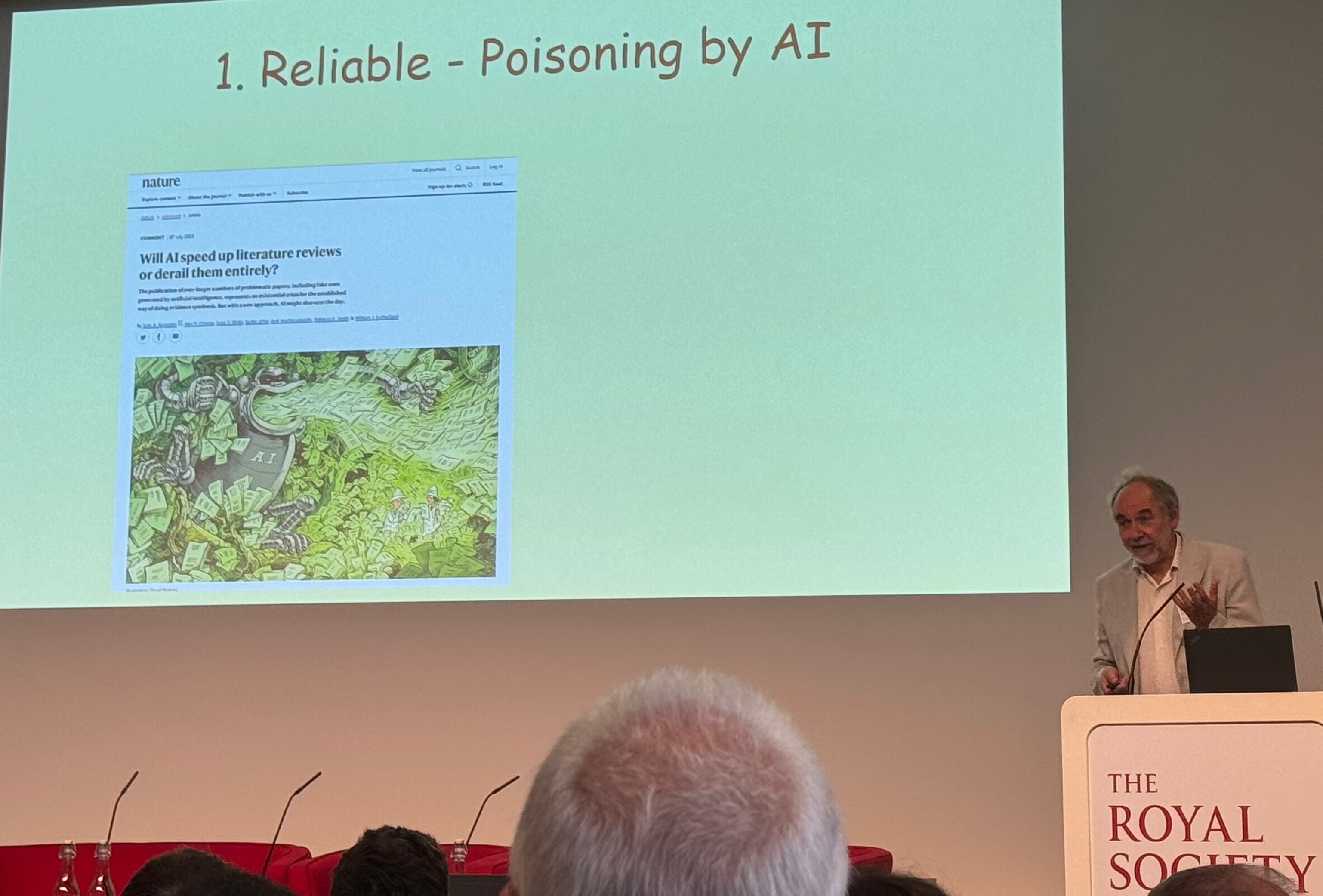

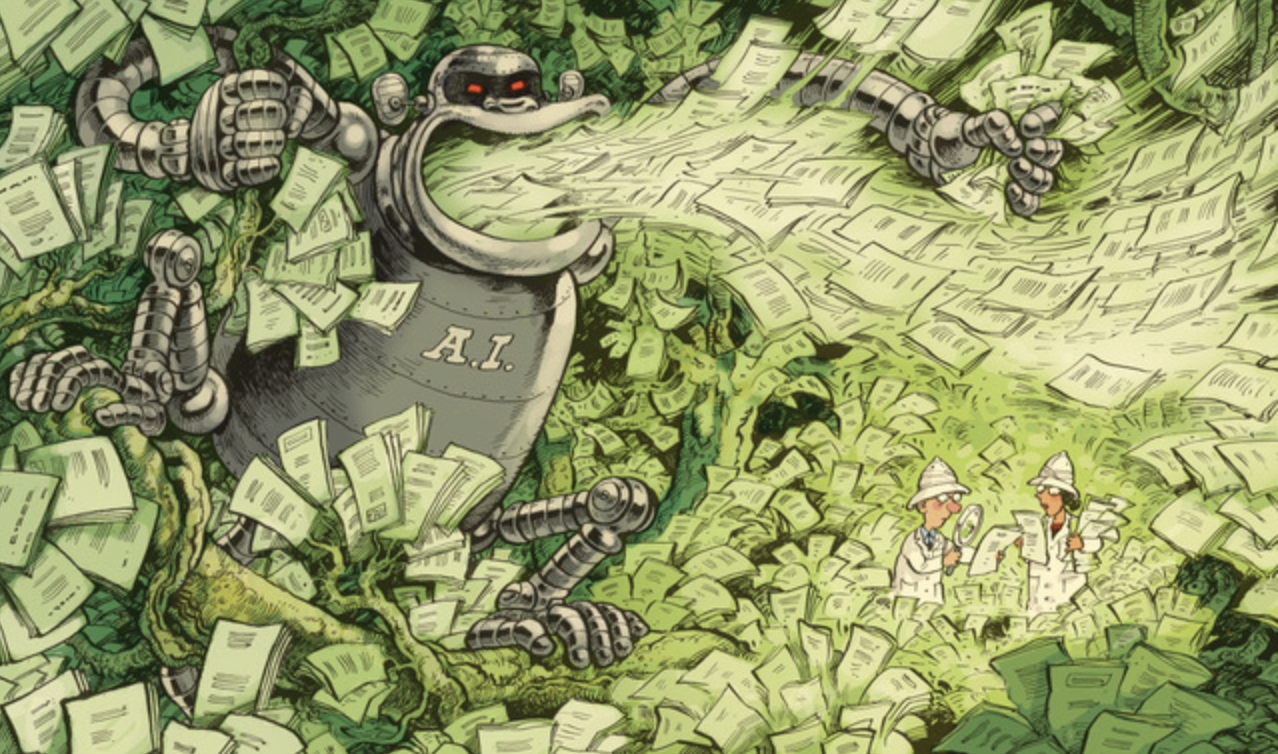

Our very own Bill Sutherland opened with a sobering demonstration of "AI poisoning" in the literature, referencing our recent Nature comment. He did the risky-but-catchy generation of a plausible-sounding but entirely fabricated conservation study using an LLM and noted how economically motivated rational actors might quite reasonably use these tools to advance their agendas via the scientific record. And recovering from this will be very difficult indeed once it mixes up with real science.

Bill then outlined our emerging approach to subject-wide synthesis via:

- Systematic reviews: Slow, steady, comprehensive

- Rapid reviews: Sprint-based approaches for urgent needs

- Subject-wide evidence synthesis: Focused sectoral analyses

- Ultrafast bespoke reviews: AI-accelerated with human-in-the-loop

Going back to what ournals are for in 2025, Bill then discussed how they were originally vehicles for exchanging information through letters, but now serve primarily as stamps of authority and quality assurance. In an "AI slop world," this quality assurance function becomes existentially important, but shouldn't necessarily be implemented in the current system of incentives. So then, how do we maintain trust when the vast majority of submissions may soon be AI-generated? (Bill and I scribbled down a plan on the back of a napkin for this; more on that soon!)

Early Career Researcher perspectives

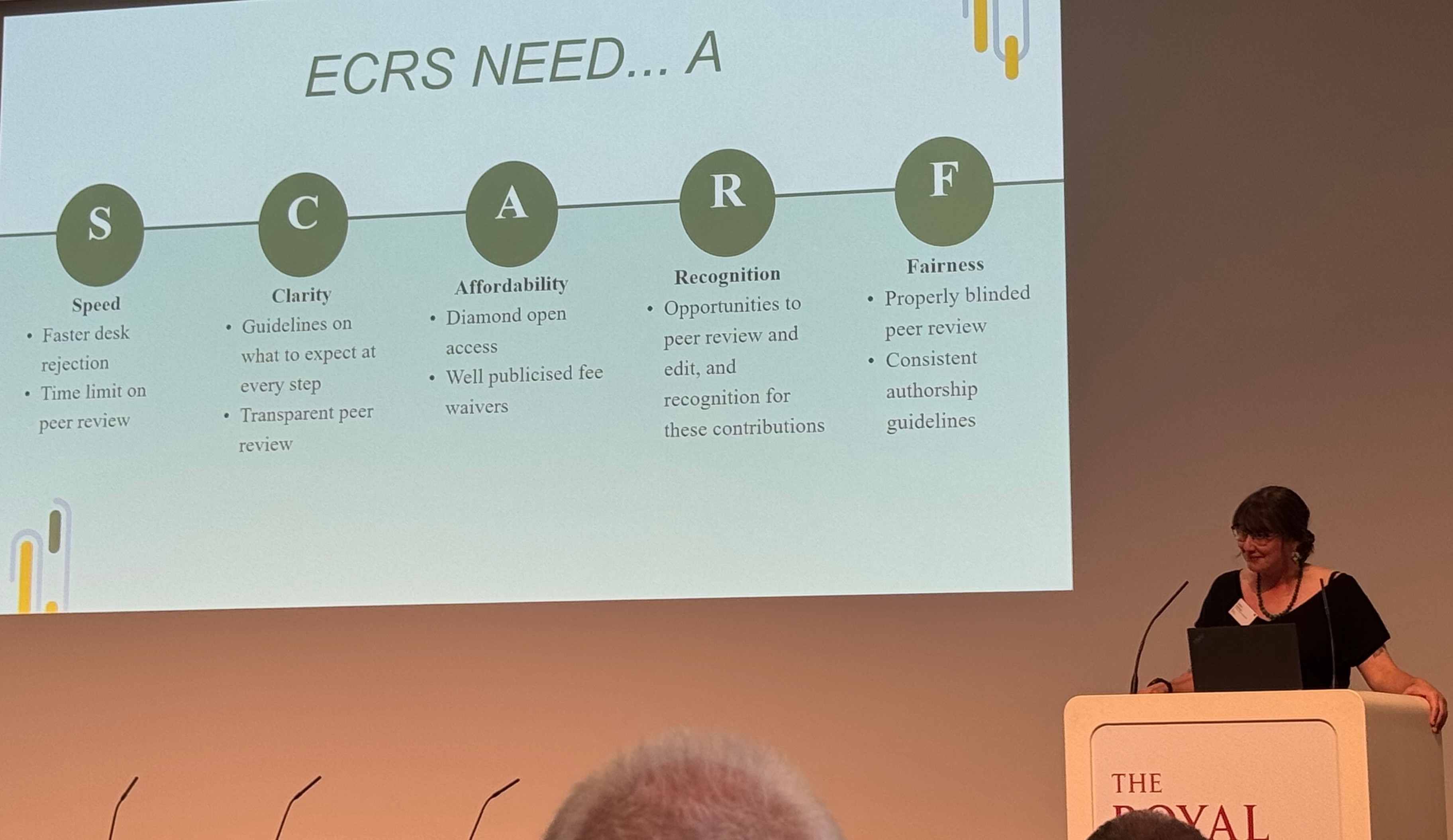

Dr. Sophie Meekings then took the stage to discuss the many barriers facing early career researchers (ECRs). They're on short-term contracts, are dependent on others people's grant funding, and yet are the ones conducting the frontline research that drives scientific progress. And this is after years spent on poorly paid PhD stipends!

ECRs require:

- clear, accessible guidelines spelling out each publishing stage without requiring implicit knowledge of the "system"

- constructive, blinded peer review** that educates rather than gatekeeps

- consistent authorship conventions like CRediT (Contributor Roles Taxonomy)

Dr. Meekings then noted how the precarious nature of most ECR positions creates cascading complications for individuals. When job-hopping between short-term contracts, who funds the publication of work from previous positions? How do ECRs balance completing past research with new employers' priorities? Eleanor Toye Scott also had this issue when joining my group a few years ago, as it took a significant portion of her time in the first year to finish up her previous publication from her last research contract.

If we're going to fix the system itself, then ECRs need better incentives for PIs to publish null results and exploratory work, the councils need to improve support for interdisciplinary research that doesn't fit traditional journal boundaries (as these as frontiers between "conventional" science where many ECRs will work), and recognition that ECRs often lack the networks for navigating journal politics where editors rule supreme.

Dr. Meekings summarized ECR needs with an excellent new acronym (SCARF) that drew a round of applause!

- Speed in publication processes

- Clarity in requirements and decisions

- Affordability of publication fees

- Recognition of contributions

- Fairness in review and credit

The audience Q&A was quite robust at this point. The first question was about how might we extend the evidence synthesis approach widely? Bill Sutherland noted that we are currently extending this to education working with Jenny Gibson. Interconnected datasets across subjects are an obvious future path for evidence datasets, with common technology for handling (e.g.) retracted datasets that can be applied consistently. Sadiq Jaffer and Alec Christie are supervising projects on evidence synthesis this summer on just this topic here in Cambridge.

Another question was why ECRs feel that double blind review is important. Dr. Meekings noted that reviewers may not take ECR peer reviews as seriously, but this coul dbe fixed by opening up peer review and assigning credit after the process is completed and not during. Interestingly, the panel all like double-blind, which is the norm in computer science but not in other science journals. Some from the BMJ noted there exists a lot of research into blinding; they summarised it that blinding doesn't work on the whole (people know who it is anyway) and open review doesn't cause any of the problems that people think it causes.

A really interesting comment from Mark Walport was that a grand scale community project could work for the future of evidence collation, but this critically depends on breaking down the current silos since it doesn't work unless everyone makes their literature available. There was much nodding from the audience in support of this line of thinkin.g

Charting the future for scientific publishing

The next panel brought together folks from across the scientific publishing ecosystem, moderated by Clive Cookson of the Financial Times. This was a particularly frank and pointed panel, with lots of quite direct messages being sent between the representatives of libraries, publishers and funders!

Amy Brand (MIT Press) started by delivered a warning about conflating "open to read" with "open to train on". She pointed out that when MIT Press did a survey across their authors, many of them raised concerns about the reinforcement of bias through AI training on scientific literature. While many of the authors acknowledged a moral imperative to make science available for LLM training, they also wanted the choice of making their own work used for this. She urged the community to pause and ask fundamental questions like "AI training, at what cost?" and "to whose benefit?". I did think she made a good point by drawing parallels with the early internet, where Brand pointed out that lack of regulation accelerated the decline of non-advertising-driven models. Her closing question asked if search engines merely lead to AI-generated summaries, why serve the original content at all? This is something we discuss in our upcoming Aarhus paper on an Internet ecology.

Danny Kingsley from Deakin University Library then delivered a biting perspective as a representative of libraries. She said that libraries are "the ones that sign the cheques that keeps the system running", which the rest of the panel all disagreed with in the subsequent discussion (they all claimed to be responsible, from the government to the foundations). Her survey of librarians was interesting; they all asked for:

- Transparent peer review processes

- Unified expectations around AI declarations and disclosures

- Licensing as open as possible, resisting the "salami slicing" of specific use. We also ran across this problem of overly precise restrictions on use while building our paper corpus for CE.

Kingsley had a great line that "publishers re monetizing the funding mandate", which Charlotte Deane later also said was the most succinct way she had heard to describe the annoyance we all have with the vast profit margins of commercial publishers. Kingsley highlighted this via the troubling practices in the IEEE and the American Chemical Society by charging to place repositories under green open access. Her blunt assessment was that publishers are not negotiating in good faith. Her talk drew the biggest applause of the day by far.

After this, John-Arne Røttingen (CEO of the Wellcome Trust) emphasised that funders depend on scientific discourse as a continuous process of refutations and discussions. He expressed concern about overly depending on brand value as a proxy for quality, calling it eventually misleading even if it works sometimes in the short term. Key priorities the WT have is ensuring that reviewers have easy access to all literature, to supporting evidence synthesis initiatives to translate research into impact, and controlling the open body of research outputs through digital infrastructure to manage the new scale. However, his challenge lies in maintaining sustainable financing models for all this research data; he noted explicitly that the Wellcome would not cover open access costs for commercial publishers.

Røttingen further highlighted the Global Biodata Coalition (which he was a member of) concerns about US data resilience and framed research infrastructure as "a global public good" requiring collective investment and fair financing across nations. Interestingly, he explicitly called out UNESCO as a weak force in global governance for this from the UN; I hadn't even realised that UNESCO was responsible for this stuff!

Finally, Prof Charlotte Deane from the EPSRC also discussed what a scientific journal is for these days. It's not for proofreading or typesetting anymore and (as Bill Sutherland also noted earlier), the stamp of quality is key. Deane argued that "research completion" doesn't happen until someone else can read it and reasonably verify the methods are sound; not something that can happen without more open access. Deane also warned of the existential threat of AI poisoning since "AI can make fake papers at a rate humans can't imagine. It won't be long before mose of the content on the Internet will be AI generated".

The audience Q&A was very blunt here. Stefanie Haustein pointed out that we are pumping of billions of dollars into the publishing industry, many of which are shareholder companies, and so we are losing a significant percentage of each dollar spent. There is enough money in the system, but it's very inefficiently deployed right now!

Richard Sever from openRxiv asked how we pay for this when major funders like the NIH have issued a series of unfunded open data mandates over recent years. John-Arne Rottingen noted that UNESCO is a very weak global body and not influential here, but that we need coalitions of the willing to build such open data approaches from the bottom up. Challenging the publisher hegemony can only be done as a pack, which lead nicely onto the next session after lunch where the founder of OpenAlex would be present!

Who are the stewards of knowledge ?

After lunch (where sadly, the vegetarian options were terrible but luckily I had my trustly Huel bar!), we reconvened with a panel debating who the stewards of the scientific record should be. This brought together perspectives from commercial publishers (Elsevier), open infrastructure advocates (OpenAlex), funders (MRC), and university leadership (pro-VC of Birmingham).

Victoria Eva (SVP from Elsevier) opened by describing the "perfect storm" facing their academic publishing business as they had 600k more submissions this year than the previous year. There was a high level view on how their digital pipeline "aims to insert safeguards" throughout the publication process to maintain integrity. She argued in general terms to view GenAI through separate lenses of trust and discoverability and argud that Elsevier's substantial technological investments position them to manage both challenges well. I was predisposed to dislike excuses from staggeringly profitable commercial publishers, but I did find her answers to providing bulk access to their corpus unsatisfying. While she highlighted their growing open access base of papers, she also noted that the transitionon to open access cannot happen overnight (my personal translation is that this means slow-walking). She mentioned special cases in place for TDM in the Global South and healthcare access (presumably at the commercial discretion of Elsevier).

Jason Priem from OpenAlex (part of OurResearch) then offered a radically different perspective. I'm a huge fan of OpenAlex, as we use it extensively in the CE infrastructure. He disagreed with the conference framing of publishers as "custodians" or "stewards," noting that these evoke someone maintaining a static, old lovely house. Science isn't a static edifice but a growing ecosystem, with more scientists alive today than at any point in history. He instead proposed a "gardener" as a better metaphor; the science ecosystem needs to nourish growth rather than merely preserving what exists. Extending the metaphor, Priem contrasted French and English garden styles: French gardens constrain nature into platonic geometric forms, while English gardens embrace a more rambling style that better represents nature's inherent diversity. He argued that science needs to adopt the "English garden" approach and that we don't have an information overload problem but rather "bad filters" (to quote Clay Shirky).

Priem advocated strongly for open infrastructures since communities don't just produce papers: also software, datasets, abstracts, and things we don't envision yet. If we provide them with the "digital soil" (open infrastructure) then they will prosper. OpenAlex and Zenodo are great examples of how such open infrastructure hold up here. I use both all the time; I'm a huge fan of Jason's work and talk.

Patrick Chinnery from the Medical Research Council brought the funder perspective with some numbers: publishing consumes 1 to 2% of total research turnover funds (roughly £24 million for UKRI) . He noted that during the pandemic, decision-makers were reviewing preprint data in real-time to determine which treatments should proceed to clinical trials and decisions had to be reversed after peer review revealed flaws. He emphasised the the need for more real time quality assurance in rapid decision-making contexts.

Adam Tickell from the University of Birmingham declared the current model "broken", and not that each attempt at reform fails to solve the basic problem of literature access (something I've faced myself). He noted that David Willetts (former UK Minister for Science) couldn't access paywalled material while minister of science in government (!) which significantly influenced subsequent government policy towards open access. Tickell was scathing about the oligopolies of Elsevier and Springer, arguing their profit margins are out of proportion with the public funding for science. He noted that early open access attempts from the Finch Report were well-intentioned but ultimately insufficient to break the hegemony. Perhaps an opportunity for a future UK National Data Library... Tickell closed his talk with an observation about the current crisis of confidence in science. This did make me think of a recent report on British confidence in science, which shows the British public still retains belief in scientific institutions. So at least we're doing better than the US in this regard for now!

The Q&A session opened with Mark Walport asked how Elsevier manages to publish so many articles. Victoria Eva from Elsevier responded that they receive 3.5m articles annually with ~750k published. Eva mentioned something about "digital screening throughout the publication process" but acknowledged that this was a challenge due to the surge from paper mills. A suggestion of paying peer reviewers was raised from the audience but not substantively addressed. Stefanie Haustein once again made a great point from the audience about how Elsevier could let through AI generated rats with giant penises with all this protection in place; clearly, some papers have been published by them with no humans ever reading it. This generated a laugh from the audience, and an acknowlegment from the Elsevier rep that they needed to invest more and improve.

How to make open infrastructure sustainable

My laptop power ran out at this point, but the next panel was an absolute treat as it had both Kaitlin Thaney and Jimmy Wales of Wikipedia fame on it!

Jimmy Wales pointed out an interesting point from his "seven rules of trust" is that a key one is to be personal with human-to-human contact and not run too quickly to technological solutions. Rather than, for example, asking what percentage of academic papers showed evidence of language from ChatGPT, it's more fruitful to ask whether the science contained within the paper is good instead of how it's written. There are many reasons why someone might have used ChatGPT (non-native speakers etc) but also many reasons unrelated why the science might be bad.

Kaitlin Thaney pointed out the importance of openness given the US assault on science means that the open data repositories can be replicated reasonably as well.

Ian Mulvaney pointed out that Nature claims to have invested $240m in research infrastructure, and this is a struggle for a medium sized publisher (like his own BMJ). Open infrastructure allows sharing and creation of value to make it possible to let these smaller organisations survive.

When it comes to policy recommendations, what did the panel have to say about a more trustworthy literature?

- The POSI principles came up as important levels.

- Kaitlin mentioned the FOREST framework funded by Arcadia and how they need to manifest in concrete infrastructure. There's an implicit reliance on infrastructure that you only notice when it's taken away! Affordability of open is a key consideration as well.

- Jimmy talked about open source software, and what generally works is not one-size-fits-all. Some are run by companies (their main product and they sell services), and others by individuals. If we bring this back to policy, we need to look at preserving whats already working sustainably but support it. Dont try to find a general solution but adopt targeted, well thought through interventions instead.

I'm updating this as I go along but running out of laptop battery too!

Is AI poisoning the scientific literature? Our comment in Nature / Jul 2025

For the past few years, Sadiq Jaffer and I been working with our colleagues in Conservation Evidence to do analysis at scale on the academic literature. Getting local access to millions of fulltext papers has not been without drama, but made possible thanks to huge amounts of help from our University Library who helped us navigate our relationships with scientific publishers. We have just published a comment in Nature about the next phase of our research, where are looking into the impact of AI advances on evidence synthesis.

Will AI speed up literature reviews or derail them entirely? / Jul 2025

Will AI speed up literature reviews or derail them entirely?

Sam Reynolds, Alec Christie, Lynn Dicks, Sadiq Jaffer, Anil Madhavapeddy, and Bill Sutherland.

Journal paper in Nature (vol 643 issue 8071).

EEG internships for the summer of 2025 / Jun 2025

The exam marking is over, and a glorious Cambridge summer awaits! This year, we have a sizeable cohort of undergraduate and graduate interns joining us from next week.

This note serves as a point of coordination to keep track of what's going on, and I'll update it as we get ourselves organised. If you're an intern, then I highly recommend you take the time to carefully read through all of this, starting with who we are, some ground rules, where we will work, how we chat, how to get paid, and of course social activities to make sure we have some fun!

[…1359 words]BIOMASS launches to measure forest carbon flux from space / Jun 2025

The BIOMASS forest mission satellite was successfully boosted into space a couple of days ago, after decades of development from just down the road in Stevenage. I'm excited by this because it's the first global-scale P-band SAR instrument that can penetrate forest canopys to look underneath. This, when combined with hyperspectral mapping will give us a lot more insight into global tree health.

Weirdly, the whole thing almost never happened because permission to use the P-band was blocked because it might interfere with US nuclear missile warning radars back in 2013.

Meeting in Graz, Austria, to select the the 7th Earth Explorer mission to be flown by the 20-nation European Space Agency (ESA), backers of the Biomass mission were pelted with questions about how badly the U.S. network of missile warning and space-tracking radars in North America, Greenland and Europe would undermine Biomass’ global carbon-monitoring objectives.

Europe's Earth observation satellite system may be the world's most dynamic, but as it pushes its operating envelope into new areas, it is learning a lesson long ago taught to satellite telecommunications operators: Radio frequency is scarce, and once users have a piece of it they hold fast. -- Spacenews (2013)

Luckily, all this got sorted by international frequency negotiators, and after being built by Airbus in Stevenage (and Germany and France, as it's a complex instrument!) it took off without a hitch. Looking forward to getting my hands on the first results later in the year over at the Centre for Earth Observation.

Check out this cool ESA video about the instrument to learn more, and congratulations to the team at ESA. Looking forward to the next BIOSPACE where there will no doubt be initial buzz about this.

Update 28th June 2025: See also this beautiful BBC article about the satellite, via David Coomes.

Steps towards an ecology of the Internet / Jun 2025

Every ten years, the city of Aarhus throws a giant conference to discuss new agendas for critical action and theory in computing. Back in 2016, Hamed Haddadi, Jon Crowcroft and I posited the idea of personal data stores, a topic that is just now becoming hot due to agentic AI. Well, time flies, and I'm pleased to report that our second dicennial thought experiment on "Steps towards an Ecology for the Internet" will appear at the 2025 edition of Aarhus this August!

This time around, we projected our imaginations forward a decade to imagine an optimistic future for the Internet, when it has exceeded a trillion nodes. After deciding in the pub that this many nodes was too many for us to handle, we turned to our newfound buddies in conservation to get inspiration from nature. We asked Sam Reynolds, Alec Christie, David Coomes and Bill Sutherland first year undergraduate questions about how natural ecosystems operate across all levels of scale: from DNA through to cells through to whole populations. We spent hours discussing the strange correspondences between the seeming chaos in the low-level interactions between cells through to the extraordinary emergent discipline through which biological development typically takes place.

Then, going back to the computer scientists in our group and more widely (like Cyrus Omar who I ran into at Bellairs), it turns out that this fosters some really wild ideas for how the Internet itself could evolve into the future. We could adopti biological process models within the heart of the end-to-end principle that has driven the Internet architecture for decades!

[…623 words]Under the hood with Apple's new Containerization framework / Jun 2025

Apple made a notable announcement in WWDC 2025 that they've got a new containerisation framework in the new Tahoe beta. This took me right back to the early Docker for Mac days in 2016 when we announced the first mainstream use of the hypervisor framework, so I couldn't resist taking a quick peek under the hood.

There were two separate things announced: a Containerization framework and also a container CLI tool that aims to be an OCI compliant tool to manipulate and execute container images. The former is a general-purpose framework that could be used by Docker, but it wasn't clear to me where the new CLI tool fits in among the existing layers of runc, containerd and of course Docker itself. The only way to find out is to take the new release for a spin, since Apple open-sourced everything (well done!).

[…1934 words]Visiting National Geographic HQ and the Urban Exploration Project / Jun 2025

I stayed on for a few days extra in Washington DC after the biodiversity extravaganza to attend a workshop at legendary National Geographic Basecamp. While I've been to several NatGeo Explorers meetups in California, I've never had the chance to visit their HQ. The purpose of this was to attend a workshop organised by Christian Rutz from St Andrews about the "Urban Exploration Project":

[The UEP is a...] global-scale, community-driven initiative will collaboratively track animals across gradients of urbanization worldwide, to produce a holistic understanding of animal behaviour in human-modified landscapes that can, in turn, be used to develop evidence-based approaches to achieving sustainable human-wildlife coexistence. -- Christian Rutz's homepage

This immediately grabbed my interest, since it's a very different angle of biodiversity measurements to my usual. I've so far been mainly involved in efforts that use remote sensing or expert range maps, but the UEP program is more concerned with the dynamic movements of species. Wildlife movements are extremely relevant to conservation efforts since there is a large tension between human/wildlife coexistence in areas where both communities are under spatial pressure. Tom Ratsakatika for example did his AI4ER project on the tensions in the Romanian Carpathian mountains, and elephant/human conflicts and tiger/human conflicts are also well known.

[…1155 words]What I learnt at the National Academy of Sciences US-UK Forum on Biodiversity / Jun 2025

I spent a couple of days at the National Academy of Sciences in the USA at the invitation of the Royal Society, who held a forum on "Measuring Biodiversity for Addressing the Global Crisis". It was a packed program for those working in evidence-driven conservation:

Assessing biodiversity is fundamental to understanding the distribution of biodiversity, the changes that are occurring and, crucially, the effectiveness of actions to address the ongoing biodiversity crisis. Such assessments face multiple challenges, not least the great complexity of natural systems, but also a lack of standardized approaches to measurement, a plethora of measurement technologies with their own strengths and weaknesses, and different data needs depending on the purpose for which the information is being gathered.

Other sectors have faced similar challenges, and the forum will look to learn from these precedents with a view to building momentum toward standardized methods for using environmental monitoring technologies, including new technologies, for particular purposes. -- NAS/Royal Society US-UK Scientific Forum on Measuring Biodiversity

I was honoured to talk about our work on using AI to "connect the dots" between disparate data like the academic literature and remote observations at scale. But before that, here's some of the bigger picture stuff I learnt...

[…2343 words]ZFS replication strategies with encryption / Jun 2025

This is an idea proposed as a good starter project, and is currently being worked on by Becky Terefe-Zenebe. It is co-supervised with Mark Elvers.

We are using ZFS in much of our Planetary Computing infrastructure due to its ease of remote replication. Therefore, its performance characteristics when used as a local filesystem are particularly interesting. Some questions that we need to answer about our uses of ZFS are:

- We intend to have an encrypted remote backups in several locations, but only a few of those hosts should have keys and the rest should use raw ZFS send streams.

- Does encryption add a significant overhead when used locally?

- Is replication faster if the source and target are both encrypted vs a raw send?

- We would typically have a snapshot schedule, such as hourly snapshots with a retention of 48 hours, daily snapshots with a retention of 14 days, and weekly snapshots with a retention of 8 weeks. As these snapshots build up over time, is there a performance degradation?

- Should we minimise the number of snapshots held locally, as this would allow faster purging of deleted files?

- How does ZFS send/receive compare to a peer-to-peer backup solution like Borg Backup, given that it gives a free choice of source and target backup file system and supports encryption?

- ZFS should have the advantage of knowing which blocks have changed between two backups, but potentially, this adds an overhead to day-to-day use.

- On the other hand, ZFS replicants can be brought online much more quickly, whereas Borg backup files need to be reconstructed into a usable filesystem.

Validating predictions with ranger insights to enhance anti-poaching patrol strategies in protected areas / Jun 2025

This is an idea proposed as a good starter project, and is currently being worked on by Hannah McLoone. It is co-supervised with Charles Emogor and Rob Fletcher.

Biodiversity is declining at an unprecedented rate, underscoring the critical role of protected areas (PAs) in conserving threatened species and ecosystems. Yet, many of these are increasingly dismissed as "paper parks" due to poor management. Park rangers play a vital role in PA effectiveness by detecting and potentially deterring illegal activities. However, limited funding for PA management has led to low patrol frequency and detection rates, reducing the overall deterrent effect of ranger efforts. This resource scarcity often results in non-systematic patrol strategies, which are sub-optimal given that illegal hunters tend to be selective in where and when they operate.

The situation is poised to become more challenging as countries expand PA coverage under the Kunming-Montreal Global Biodiversity Framework—aiming to increase global PA area from 123 million km2 to 153 million km2 by 2030. Without a substantial boost in enforcement capacity, both existing and newly designated PAs will remain vulnerable. Continued overexploitation of wildlife threatens not only species survival but also ecosystem integrity and the well-being of local communities who rely on wildlife for food and income.

This project aims to combine data from rangers in multiple African protected areas and hunters around a single protected area (Nigeria) to improve the deterrence effect of ranger patrols by optimising ranger efforts and provide information on the economic impacts of improved ranger patrols on community livelihoods and well-being. We plan to deploy our models to rangers in the field via SMART, which is used in > 1000 PAs globally to facilitate monitoring and data collection during patrols.

The two main aims are to:

- develop an accessibility layer using long-term ranger-collected data

- validate the results of this layer, as well as those from other models developed, using ranger insights.

TESSERA: Temporal Embeddings of Surface Spectra for Earth Representation and Analysis / Jun 2025

TESSERA: Temporal Embeddings of Surface Spectra for Earth Representation and Analysis

Frank Feng, Sadiq Jaffer, Jovana Knezevic, Silja Sormunen, Robin Young, Madeline Lisaius, Markus Immitzer, James G. C. Ball, Clement Atzberger, David Coomes, Anil Madhavapeddy, Andrew Blake, and Srinivasan Keshav.

Working paper at arXiv.

Solving Package Management via Hypergraph Dependency Resolution / Jun 2025

Mapping urban and rural British hedgehogs / Jun 2025

This is an idea proposed as a good starter project, and is currently being worked on by Gabriel Mahler. It is co-supervised with Silviu Petrovan.

The National Hedgehog Monitoring Programme aims to provide robust population estimates for the beloved hedgehog.

Despite being the nation’s favourite mammal, there's a lot more to learn about hedgehog populations across the country. We do know that, although urban populations are faring better than their rural counterparts, overall hedgehogs are declining across Britain, so much so that they’re now categorised as Vulnerable to extinction. -- NHMP

The People's Trust for Endangered Species has been coordinating the programme. For the purposes of this project, we have access to:

- GPS data from over 100 tagged hedgehogs collected by Lauren Moore during her PhD to build predictive movement models.

- OpenStreetMap data about where hedgehogs probably shouldn't be (e.g. middle of a road) to help with species distribution modelling

- PTES also run the Hedgehog Street program which has the mapped locations of hedgehog highways across the UK to assess how effective they are.

- A new high-res map of the UK's hedgerows and stonewalls from Google DeepMind and Drew Purves.

Our initial efforts in the summer of 2025 will be to put together a high res map of UK hedgehog habitats, specifically brambles and likely urban habitats. Once that works, the plan is to apply some spatially explicit modeling, still focussing on the UK. This will involving exciting collaborating with the PTES who I'm looking forward to meeting!

Low power audio transcription with Whisper / Jun 2025

This is an idea proposed as a good starter project, and is currently being worked on by Dan Kvit. It is co-supervised with Josh Millar.

The rise of batteryless energy-harvesting platforms could enable ultra-low-power, long-term, maintenance-free deployments of sensors.

This project explores the deployment of the OpenAI Whisper audio transcription model onto embedded devices, initially starting with the rPi and moving onto smaller devices.

Displaying the 15 most recent news items out of 390 in total (see all the items)