For the past few years, Sadiq Jaffer and I been working with our colleagues in Conservation Evidence to do analysis at scale on the academic literature. Getting local access to millions of fulltext papers has not been without drama, but made possible thanks to huge amounts of help from our University Library who helped us navigate our relationships with scientific publishers. We have just published a comment in Nature about the next phase of our research, where are looking into the impact of AI advances on evidence synthesis.

Our work on literature reviews led us into assessing methods for evidence synthesis (which is crucial to rational policymaking!) and specifically about how recent advances in AI may impact it. The current methods for rigorous systematic literature review are expensive and slow, and authors are already struggling to keep up with the rapidly expanding number of legitimate papers. Adding to this, paper retractions are increasing near exponentially and already systematic reviews unknowingly cite retracted papers, with most remaining uncorrected even a year (after notification!)

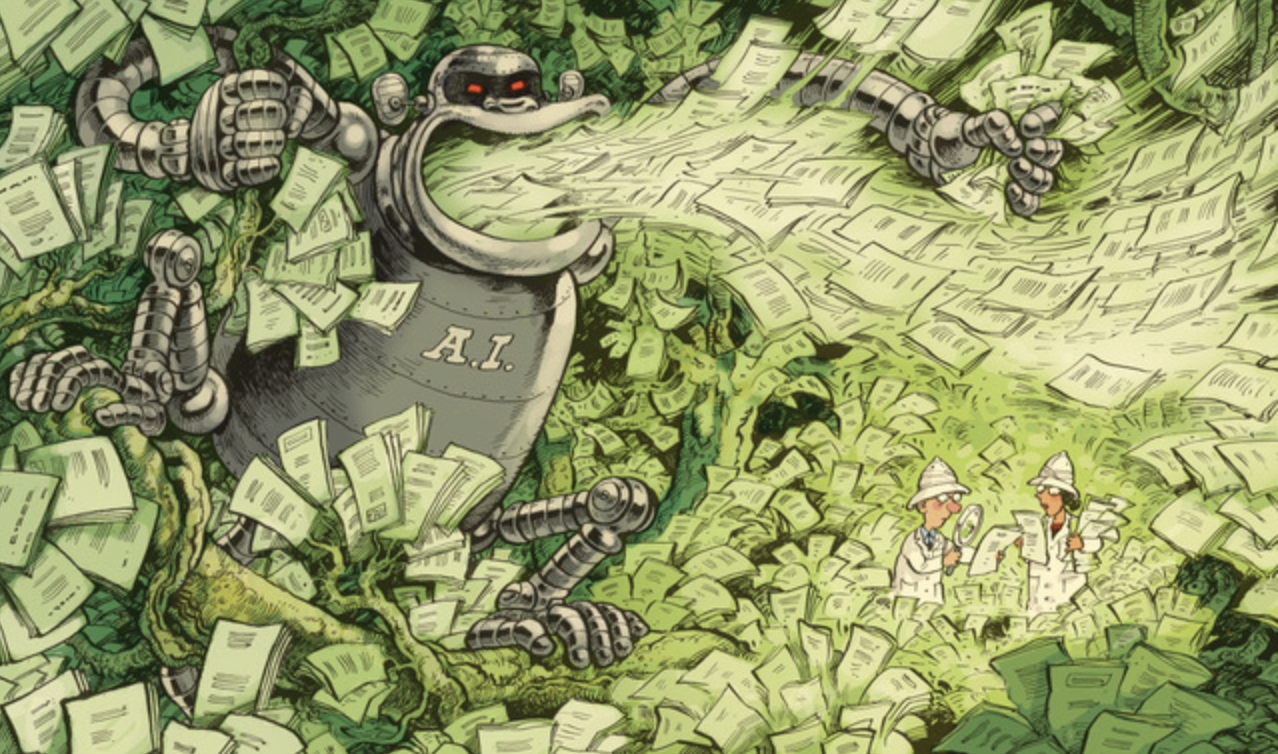

This is all made much more complex as LLMs are flooding the landscape with convincing, fake manuscripts and doctored data, potentially overwhelming our current ability to distinguish fact from fiction. Just this March, the AI Scientist formulated hypotheses, designed and ran experiments, analysed the results, generated the figures and produced a manuscript that passed human peer review for an ICLR workshop! Distinguishing genuine papers from those produced by LLMs isn't just a problem for review authors; it's a threat to the very foundation of scientific knowledge. And meanwhile, Google is taking a different tack with a collaborative AI co-scientist who acts as a multi-agent assistant.

So the landscape is moving really quickly! Our proposal for the future of literature reviews builds on our desire to move towards a more regional, federated network approach. Instead of having giant repositories of knowledge that may be erased unilaterally, we're aiming for a more bilateral network of "living evidence databases". Every government, especially those in the Global South, should have the ability to build their own "national data libraries" which represent the body of digital data that affects their own regional needs.

This system of living evidence databases can be incremental and dynamically updated, and AI assistance can be used as long as humans remain in-the-loop. Such a system can continuously gather, screen, and index literature, automatically remove compromised studies and recalculating results. We're working on this on multiple fronts this year; ranging from the computer science to figure out the distributed-nitty-gritty [1], over to working with the GEOBON folk on global biodiversity data management, and continuing to drive the core LED design at Conservation Evidence. It feels like a

Read our Nature Comment piece (comment on LI) to learn more about how we think we can safeguard evidence synthesis against the rising tide of "AI-poisoned literature" and ensure the continued integrity of scientific discovery. As a random bit of trivia, the incredibly cool artwork in the piece was drawn by the legendary David Parkins, who also drew Beano and Dennis the Menace!

Read more about Will AI speed up literature reviews or derail them entirely?.

-

My instinct is that we'll end up with something ATProto based as it's so convenient for distributed system authentication.

↩︎︎