Over the past year, Sadiq Jaffer and I have been getting an object lesson in how the modern Internet handles researcher access to data, as we've been downloading tens of millions of research papers towards our Conservation Evidence project. This is legally possible via our institutional subscriptions that give us license to fulltexts, and the incredibly helpful head of electronic services at the University Library who wields encyclopedic knowledge of each of our agreements with the hundreds of publishers out there. My thoughts on this then segwayed into recent conversations I've been having about the emerging National Data Library and also with the UK Wildlife Trusts...

1 The difficulty of access controlled bulk data downloads

In late 2023, once we got past the legal aspects of downloading closed access papers[1] it was still remarkably difficult to actually gain access to the actual paper datasets themselves. For instance, a select few hurdles include:

- Cloudflare got in the way all the time, preventing batch downloading by throwing CAPTCHAs down the wire. Each publisher has to individually allowlist our one hardworking IP, and it can take months for them to do this and it's never quite clear when we have been allowed. So I hacked up dodgy stealth downloaders even though we're meant to have access via the publisher.

- Many official text mining APIs for publishers such as Elsevier and Springer do not provide PDF access, and only give an XML equivalent which is both inconsistent in its schemas and misses diagrams. Luckily there are great projects like Grobid to normalise some of these with very responsive maintainers.

- There existing archival indices for the PDFs that point to preprints around the web, but CommonCrawl truncates downloads to their first megabyte, and the archive.org unpaywall crawls are restricted access for licensing reasons. So I built a crawler to get these ourselves (I'm glad I wrote the first cohttp now!)

- Bulk download still involves individual HTTP queries with various rate throttling mechanisms that all vary slightly, making me an expert in different HTTP 429 response headers. There's not much sign of batch query interfaces anywhere, probably because of the difficulty of access checking for each individual result.

- The NIH PMC only have one hard-working rate-throttled FTP server for PDFs, which I've been slowly mirroring using a hand-crafted OCaml FTP client since Nov 2024 (almost done!)

- Meanwhile, because this is happening through allowlisting of specific IPs, I then got my Pembroke office kicked off the Internet due to automated abuse notifications going to the UIS who turn netblocks off before checking (fair enough, it could be malware). But it would have been easier to run these downloads through dust clouds than try to do it properly by registering the addresses involved, eh?

The situation is better for open access downloads, where projects such as Core offer easier bulk access and large metadata databases like OpenAlex use 'downloader pays' S3 buckets. And in other domains like satellite data, there is still a lot of complexity in obtaining the data, but programming wrappers make implementing the (often terabyte-level) downloads much more palatable. For our recent LIFE biodiversity maps, we also make them available on services like Zenodo as they are open.

The lesson I took away from this is that it's really difficult to deal with large sensitive datasets where selective access control is required, and also that sort of data is rarely mirrored on the open web for obvious reasons. But in the current climate, it's utterly vital that we move to protect human health or biodiversity data gathered over decades that is irreplaceable once lost. And beyond data loss, if the data is present but not accessible, then what's the point in gathering it in the first place? It's also really important not to blame the existing publishers of these datasets, who are getting overwhelmed by AI bots making huge numbers of requests to their infrastructure. So I'm getting energised by the idea of a cooperative solution among all the stakeholders involved.

2 Enter the National Data Library

You can imagine my excitement late last year when I got a call from the Royal Society to show up bright and early for a mysterious speech by Rishi Sunak. He duly announced the government's AI summit that mostly focussed on safety, but a report by Onward caught my eye by recommending that "the Government should establish a British Library for Data – a centralised, secure platform to collate high-quality data for scientists and start-ups". I wasn't down for the "centralised" part of this, but I generally liked the library analogy and the curation it implied.

Then in 2025, with Sunak dispatched back to Richmond, Labour took up the reigns with their AI Action Plan. While this report started predictably with the usual need for acres of GPU-filled datacenters, it continued onto something much more intriguing via the creation of a "National Data Library":

- Rapidly identify at least 5 high-impact public datasets it will seek to make available [...] Prioritisation should consider the potential economic and social value of the data, as well as public trust, national security, privacy, ethics, and data protection considerations.

- Build public sector data collection infrastructure and finance the creation of new high-value datasets that meet public sector, academia and startup needs.

- Actively incentivise and reward researchers and industry to curate and unlock private datasets. -- AI Opportunities Action Plan, Jan 2025

This takes into account much more of the nuances of getting access to public data. It identifies the need for data curation, and also the costs of curating such private datasets and ensuring correct use. The announcement spurred on a number of excellent thoughts from around the UK web about the implications, particularly from Gavin Freeguard who wrote about how we should think about an NDL. Gavin identified one particularly difficult element of exposing private data:

[...] analogy with the National Data Library suggests that there might be some materials available to everyone, and some restricted to specialist researchers. There may be different access models for more sensitive material. There may be better and worse options — bringing together all the data in one place for accredited researchers to access [...] would be a logistical and security nightmare [...] may be possible to keep the data where it already is, but provide researchers with the ability to access different systems. -- Gavin Freeguard

Others also identified that the centralised library analogy only goes so far, and that we should focus on building trustworthy data services instead and on the real lifecycle of the data usage:

[...] this means that the latest data is already there in the "library" [...] researchers don't first need to work with the data owners to create it [...] bodies of knowledge around using these complex datasets can be built up over time.

Researchers can share code and derived data concepts, so the researchers that come after can iterate, refine, and build on what has gone before. None of this was possible with the previous "create and destroy" model of accessing these types of datasets, which was hugely inefficient -- Administrative Data Research UK

Gosh, this network effect sounds an awful lot like what I experienced as a #Docker maintainer, which had its incredible popularity fuelled by tapping into users to building and sharing their own software packaging rather than depending on third parties to do it for them. If we could unlock the power of crowds here but go one step further and enforce privacy constraints on the underlying data and code, then the technical solution could be both usable and secure. I'm still not quite sure what that balance of UI would look like, but we're working on it spearheaded by Patrick Ferris, Michael Dales and Ryan Gibb's research areas.

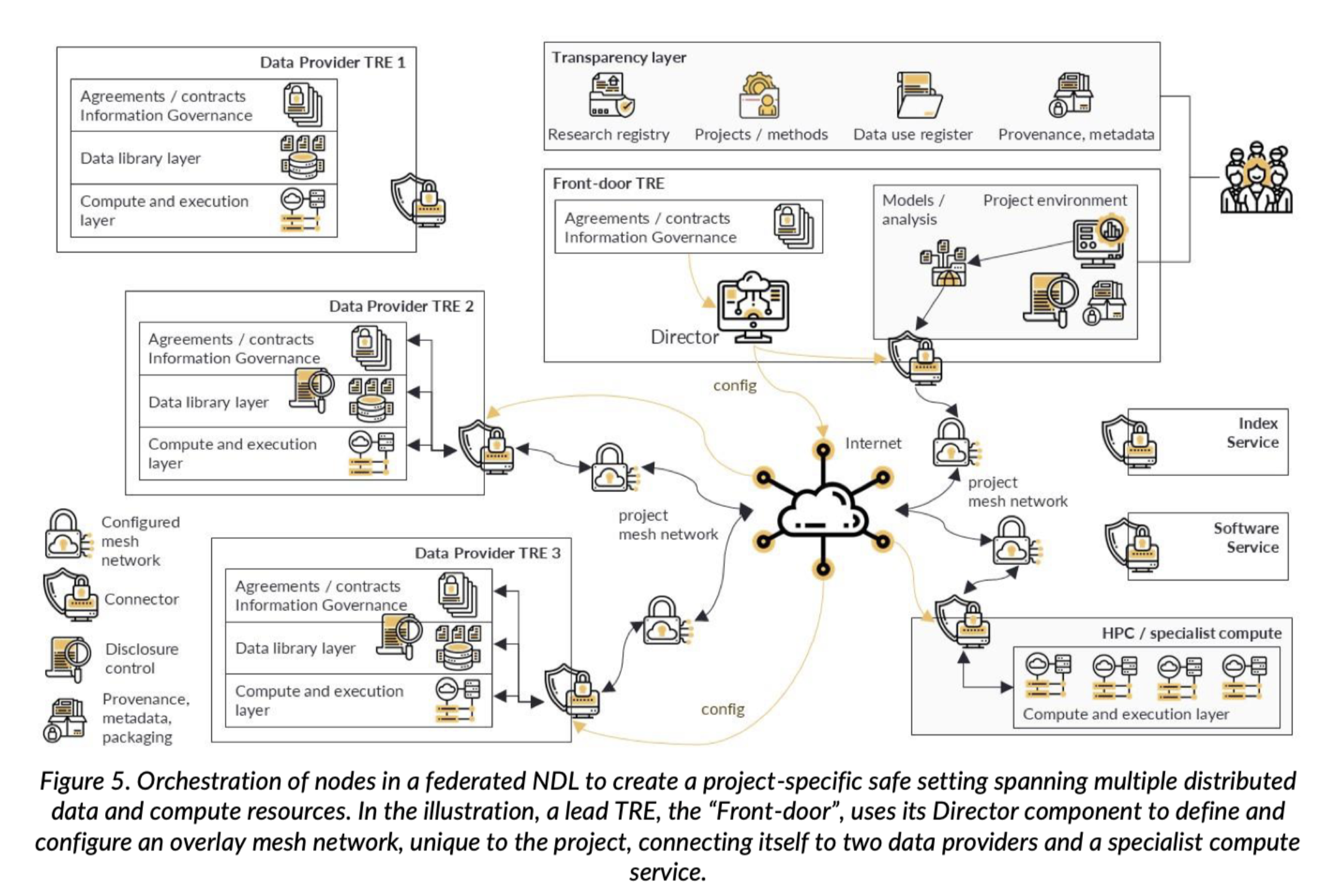

The Wellcome and ESRC have also put together a series of whitepapers about the challenges and potential approaches behind the NDL (via Nick McKeown). I'm still going through them in detail, but the modular approach paper makes sensible observations about not trying to build one enormous national database and to not outsource it all to one organisation to build. Instead, they espouse a federated architectural approach.

Since their primary (but not only) usecase focuses on health data, there is an emphasis on moving the computation and data around rather than pooling it:

The project's overlay mesh network dynamically and securely connects all the required resources. The mesh network creates a transient, project-specific, secure network boundary such that all the project’s components are within one overarching safe setting -- A federated architecture for a National Data Library

This isn't a million miles away from how we set up overlay networks on cloud infrastructure, but with the added twist of putting in more policy enforcement upfront.

- On the programming languages side, we're seeing exciting progress on formalising legal systems which encourages pair programming with lawyers to capture the nuances of policy accurately (and pronounced 'awesome' by the French government).

- At a systems level, Malte Schwarzkopf recently published Sesame which provides end-to-end privacy sandboxing guarantees, and there is classic work on DIFC that we've been using more recently in secure enclave programming.

- From a machine learning perspective, my colleague Nic Lane's work on federated learning via Flower seems to be everywhere right now with its own summit coming up.

However, it's not all plain sailing, as there is also mega-controversy ongoing with the UK government's surprising demands for an encryption backdoor into iCloud, leading to even more of a geopolitical tangle with the US. Irrespective of what happens with this particular case, it's clear that any end-to-end encryption in these federated systems will need to deal with the reality that jurisdictions will have different lawful decryption needs, so end-to-end encryption may be at an end for initiatives like the NDL. Add onto this the flagrant disregard for licensing in current pretrained language models but also the movement to revise copyright laws to legislate around this, and it's clear that technology will need to be fluid in adapting to matters of provenance tracking as well.

There's definitely a rich set of academic literature in this space, combined with interesting constraints, and so I'll pull this together into an annotated bibtex soon!

3 Who are some users of such a service?

To get some more inspiration on a technical solution, I've been looking to users of such an infrastructure to understand what easy-to-use interfaces might look like.

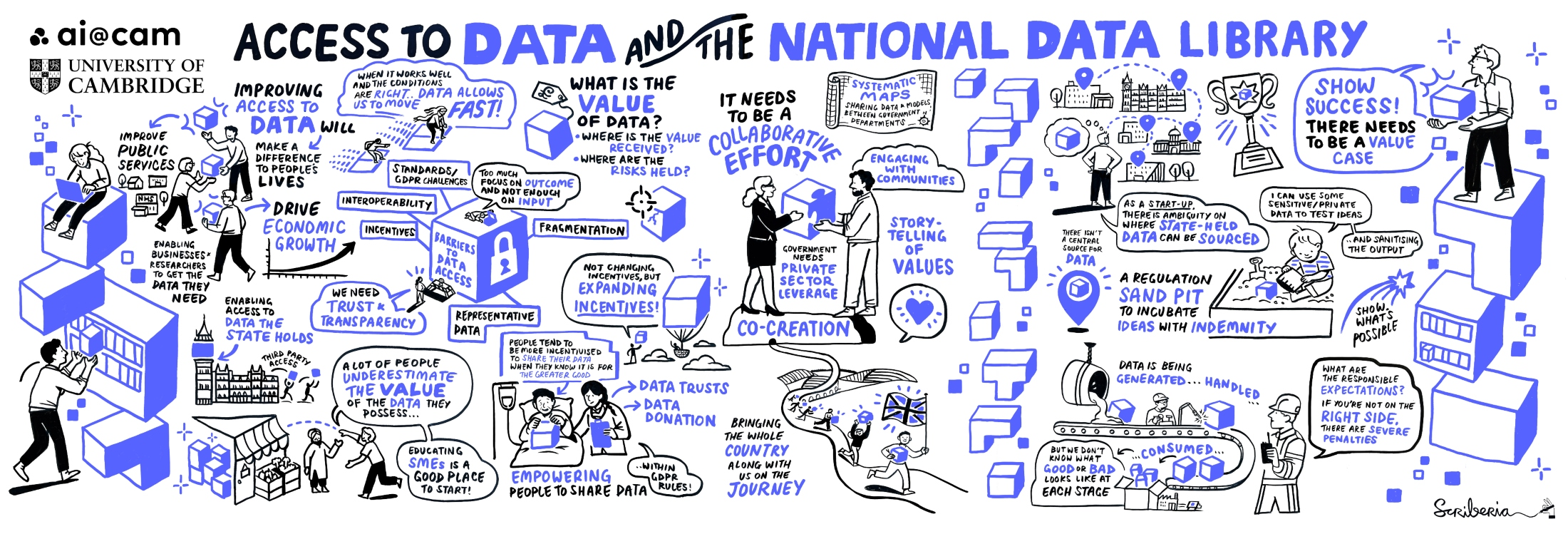

My colleague Neil Lawrence over at AI@Cam co-lead a recent report into case studies for the NDL which is very much worth a read. From a conservation perspective, Sadiq Jaffer and Alec Christie both gave input about the importance of having such infrastructure for evidence-driven landuse.

What would be helpful, according to Dr Jaffer, is more standardisation between publishers for Open Access material under permissive licences. [...] having a coherent archive for OA materials that are licensed in such a way that they can be used for data mining without any technical hurdles would be the ideal scenario for this kind of research, as well as for a National Data Library, -- Access to Data for Research, AI@CAM

Another very different group I talked to back in 2023 via Rosalind Goodfellow as part of her CSaP fellowship was the Geospatial Commission who began work on a National Underground Asset Register. The NAUR was initially restricted to "safe dig" usecases and not exposed more widely for security and other concerns. In 2024, they subsequently reported great interest in expanded usecases and are doing a discovery project on how to expose this information via APIs. This seems like an ideal usecase for some of the access control needs discussed above, as it's not only a lot of data (being geospatial) but also updated quite frequently and not necessarily something to make entirely public (although satellite pipeline monitoring is perhaps obsoleting this need).

And a month ago after reading our horizon scan for AI and conservation paper, Sam Reynolds David Coomes Emily Shuckburgh and I got invited by Craig Bennett to a remarkable dinner with the assembled leaders of all 46 of the UK's wildlife trusts. They are a collective of independent charities who together maintain wildlife areas across the UK, with most people living near one of their 2300+ parks (more than there are UK McDonald's branches!). Over the course of dinner, we heard from every single one of them, with the following gist:

- The 46 nature charities work by consensus but independently, but recently are building more central coordination around their use of systematic biodiversity data gathering across the nation. They are building a data pool across all of them, which is important as the sensing they do is very biased both spatially and across species (we know lots about birds, less about hedgehogs).

- The charities recognise that need to take more risks as the pressures on UK nature are currently immense, which means harnessing their data and AI responsibly to both accelerate action and also to recruit more participation from a broader cross-section of the UK population for citizen science input but also just to experience it.

- Conservation evidence is important to them, and sharing data from one area to replicate that action elsewhere in the UK is essential but difficult to engineer from scratch. There's a real cost to generating this data, and some confusion about appropriate licensing strategies. I gave a somewhat mixed message here reflecting my own uncertainly about the right way forward: one on hand, restricted licensing might prevent their data being hoovered up by the big tech companies who give peanuts back in return, but then again the bad actors in this space would simply ignore the licensing and the good actors probably can't afford it.

The trusts are operating on a fairly shoestring budget already, so they're a great candidate to benefit from a collective, federated National Data Library. In particular, if the NDL can nail down a collective bargaining model for data access to big tech companies, this could finance the collection costs among smaller organisations throughout the four nations. The same holds true for thousands of small organisations around the UK that could benefit from this infrastructure and kickstart more sustainable growth.

I'm organising a get-together on the topic of planetary computing next month with Nate Foster and a number of colleagues from around the world, so stay tuned for more updates in this space in the coming months! Your thoughts, as always, are most welcome.

(Thanks Sam Reynolds for the notes on what we discussed with the Wildlife Trusts)

-

This largely involved talking to individual publishers and agreeing not to directly train generative AI models and to keep them private to our own research use. Fairly reasonable stuff.

↩︎︎