This is part 1 of 5 of a series of posts[1] about ICFP 2025.

The first outing of PROPL was last year in London, and this time around Dominic Orchard and I invited KC Sivaramakrishnan to be the PC chair and held it at ICFP/SPLASH. The uptake was encouraging, and we got enough submissions to have a proper published proceedings in the ACM Digital Library for the first time! Our proceedings summary is a quick read to give you an idea of the breadth of the papers and talks this year.

The workshop itself had slightly less in-person attendance than last year, but this was due to the heavily multi-track structure of ICFP. We had less total time than last year as the morning slot was taken up by the ICFP keynote (the awesome Satnam Singh), and with six parallel sessions people were filtering in and out of all the events. The online attendance made up for it, with quite a few people tracking the SIGPLAN live stream.

The papers were exactly what I'd dreamed would happen -- a variety of practitioners describing their computational challenges mixed together with solutions. I'll summarise the day's proceedings next!

1 Computational challenges

The first batch of talks I'll cover were about how to specify some core computational models related to climate and biodiversity science.

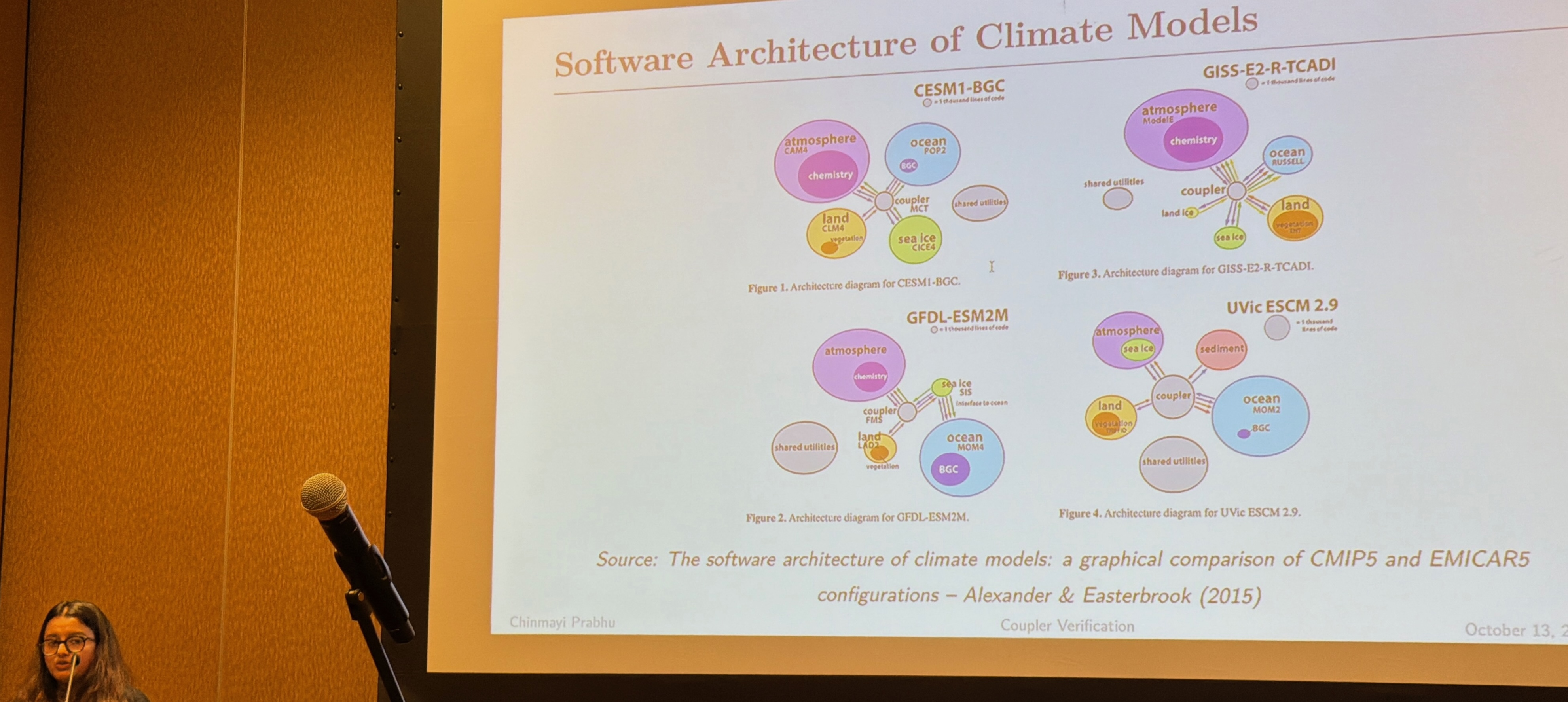

Firstly, climate modelling was up with Chinmayi Prabhu presenting a paper on climate model coupler verification that discussed the difficulty of folding multiple global climate models into combined ones, something that is normally done via (underspecified and somewhat black magic) coupler components. Chinmayi had found some bugs in production coupler code, and described a hybrid verification strategy that used both static and runtime techniques to improve the state of affairs.

The continuous exchange of data through couplers creates the risk of subtle errors propagating across components, potentially distorting scientific conclusions. In this paper, we argue for lightweight formal verification techniques applied at the coupler interface to improve both coupler and model correctness. -- Towards Modelling and Verification of Coupler Behaviour in Climate Models

Then we heard about hydrology modeling using GPUs over in India, where algorithms to trace the path of surface water flows (e.g. flow accumulation, watershed delineation or runoff simulation) are hard to execute for large areas at reasonably fine spatial and temporal resolutions.

Libraries like GDAL that use multi-threaded CPU-based implementations running on a single host may be slow, and distributed infrastructures like Google Earth Engine may not support the kind of computational primitives required by these algorithms.

We have developed a GPU-accelerated framework that re-engineers these four algorithms and is able to process areas as large as river basins of 250,000 km2 on commodity GPU workstations. -- GPU-Accelerated Hydrology Algorithms for On-Prem Computation

Aadi Seth explained not only the basics of flow algorithms, but why it's essential to GPU accelerate them to get a reasonable spatial scale and resolution. They ended up with a set of small parallelizable primitives that are either pixel independent, or a sequence of 'long pixel' operations that need more coordination.

Continuing on in the previous theme of novel computation models, Michael Dales presented "Yirgacheffe: A Declarative Approach to Geospatial Data", a new Python library that allows spatial algorithms to be implemented concisely and supports parallel execution and resource management. (read more...)

Michael used our work on the LIFE biodiversity metric as the motivating usecase for his demo, as we have petabytes of rasters processed using Yirgacheffe. He also set up a nice new homepage for the project and used PROPL to launch it, including adopting a shiny new executable notebook called Marimo which I liked the look of!

2 Geospatial data management

Switching tack from computation to managing large-scale datasets, we had a number of papers discussing how to orchestrate these both for full execution but also in a developer friendly way for local use.

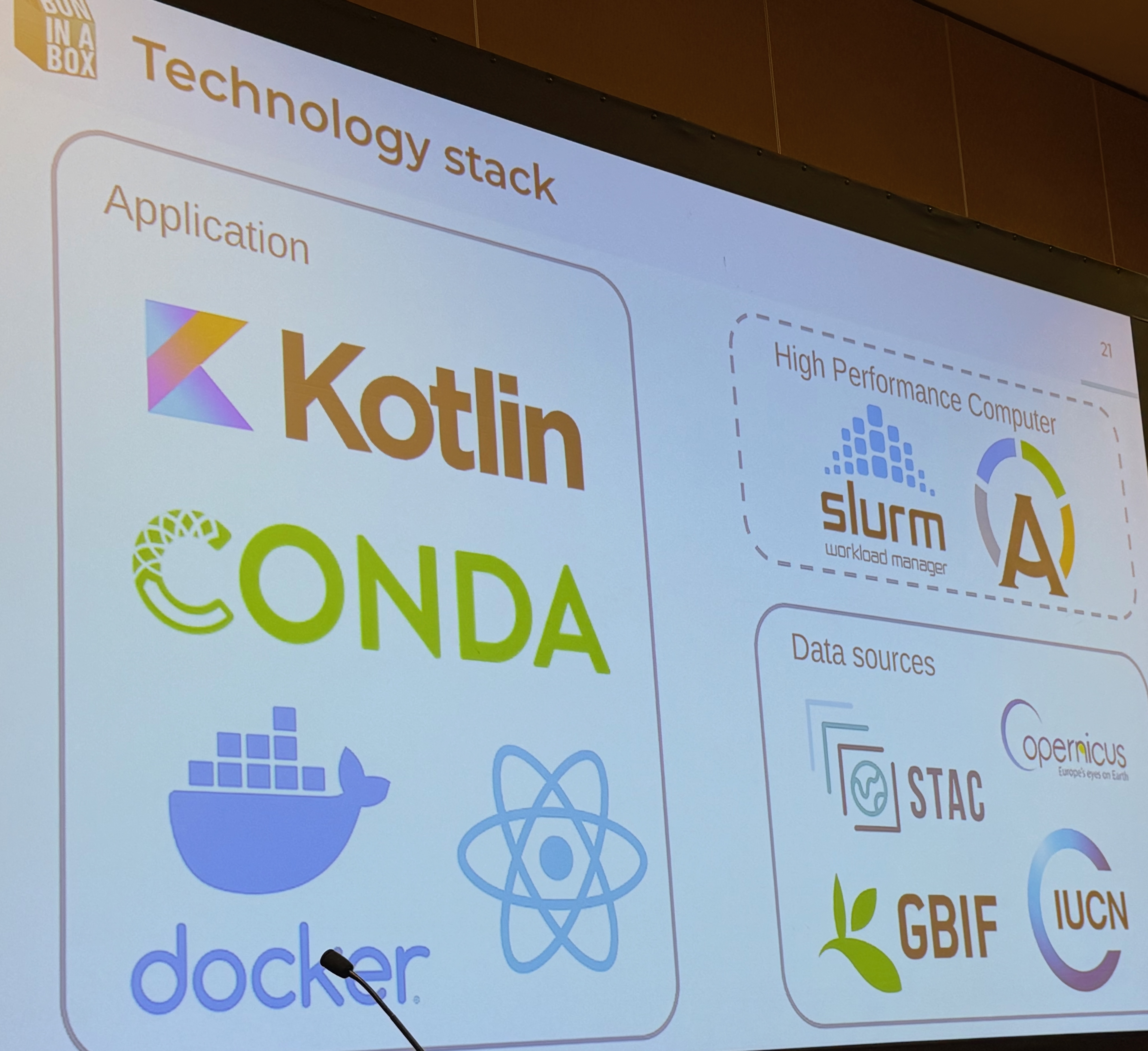

The first extremely ambitious talk was from Jean-Michel Lord who presented "Programming Opportunities for the Global Biodiversity Observation Network". GEOBON is a global network of researchers dedicated to improving the acquisition, coordination and delivery of biodiversity information at a global scale. This PROPL collaboration came up up after I met Andrew Gonzalez at the NAS earlier in the year and learnt about BON-in-a-Box, which uses Docker under the hood!

Jean-Michel described how off-the-shelf software could (almost) be enough to integrate the world's biodiversity dataset pipelines, but needed some help from their maintainers. Most notably, BON-in-a-box facilitates peer review of computation pipelines (as opposed to the science underpinning them), which is the first time I've seen peer review applied to scientific code. This "connecting the dots" across diverse biodiversity datasets is vital towards building a comprehensive model of life on this planet, and computer science is a crucial piece of the puzzle to make sense of all the data. Andrew Gonzalez also just announced that all the major players are getting together later this month at the Living Data 2025 conference in Colombia, further underlying how timely getting involved with BON-in-a-Box is.

Aadi Seth had a second paper on the challenge of building spatio-temporal catalogue dataflow graphs. These catalogues are really important in environmental science to connect the dots in the often "gappy" field datasets. The STAC-D extension proposes dataflow pipelines to help compose these together via YAML specifications that describe dependencies between the (large) datasets involved.

We propose STACD (STAC extension with DAGs), an extension to STAC specifications that incorporates Directed Acyclic Graph (DAG) representations along with defining algorithms and version changes in the workflows. We also provide a reference implementation on Apache Airflow to demonstrate STACD capabilities such as selective recomputation when some datasets or algorithms in a DAG are updated, complete lineage construction for a dataset, and opportunities for improved collaboration and distributed processing that arise with this standard. -- STAC Extension with DAGs

This one really reminded me of the work I did ages ago with Derek G Murray and Malte Schwarzkopf on the CIEL execution engine. Dynamic dataflow graphs seem to come up again and again as the beating heart of coordination languages, and we've made surprisingly little progress in making them more ergonomic. Some combination of Docker and Build Systems à la Carte would go a long way here!

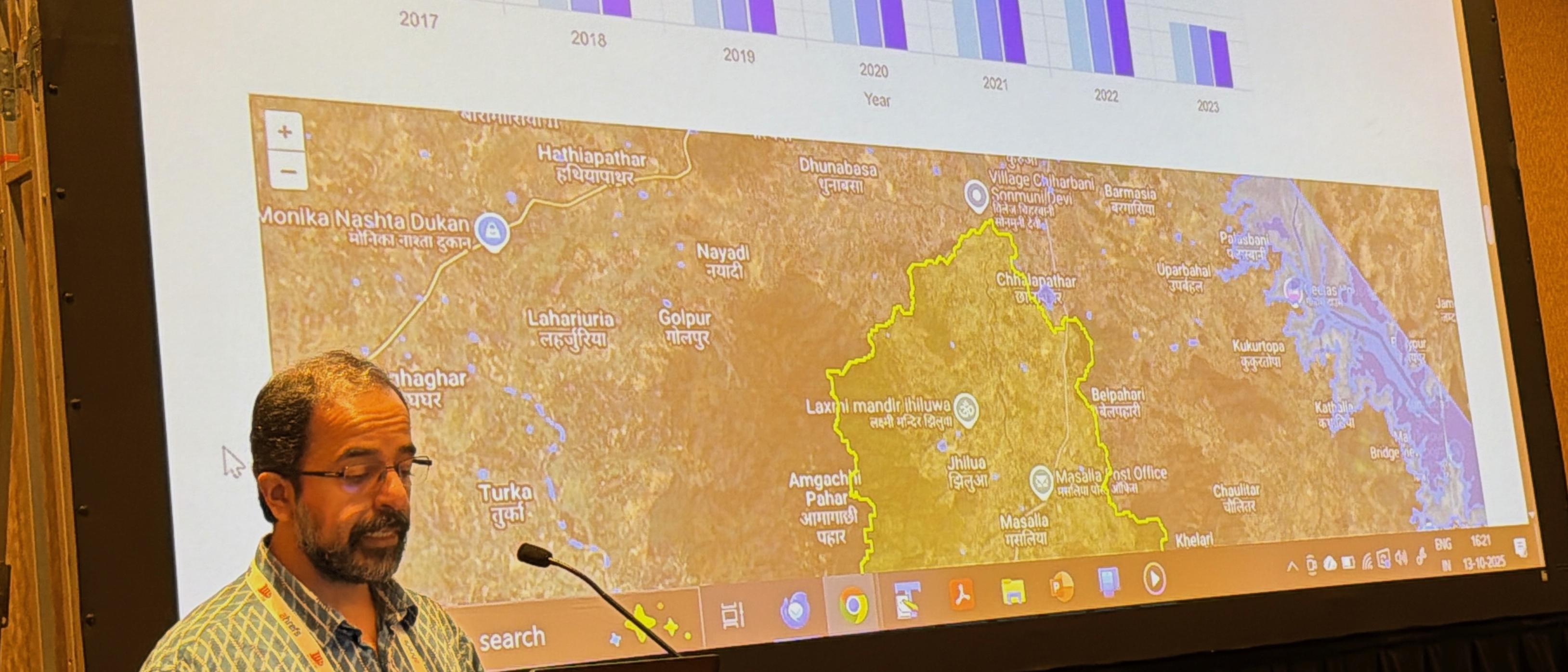

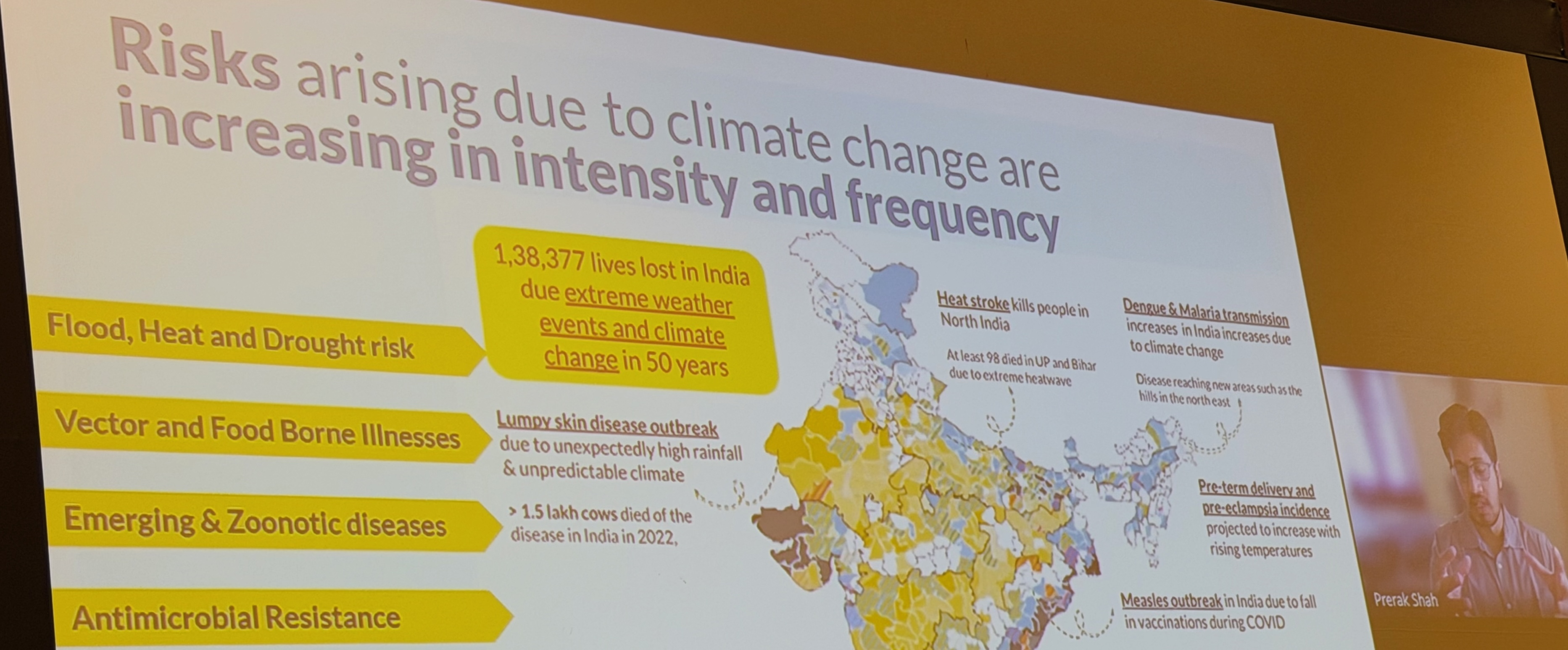

The third talk was from the the Catalysts Foundation from India, and highlighted the importance of data science for promoting health and wellbeing in some of the most vulnerable rural communities, who are at serious risks of increasing intensity due to climate change.

Climate change presents multifaceted public health challenges, from heat-related mortality and vector-borne disease expansion to water contamination and respiratory ailments. The 2022 Lancet Countdown Report demonstrates a host of health effects of climate change ranging from heat-related illness and mortality to the spread of vector-borne and water-borne pathogens, to rising food insecurity as cropping patterns change. Current public health systems lack integrated, real-time data capabilities to identify vulnerable populations and coordinate timely responses to these climate-induced health threats, particularly in resource-constrained settings. -- Precision Action Towards Climate and Health (PATCH)

Prerak talked about the difficulty of combining geospatial data with machine learning inference, and keeping track of the resulting outputs in a systematic way. What I found particularly interesting about their "PATCH" system is that it not only has core computing facilities (a health reporting platform, a spatial counterfactual map for interventions and a communications channel for different stakeholders), but they also extensively partner with local state governments in India (like the India Meteorological Department and the All India Institutes of Medical Sciences.

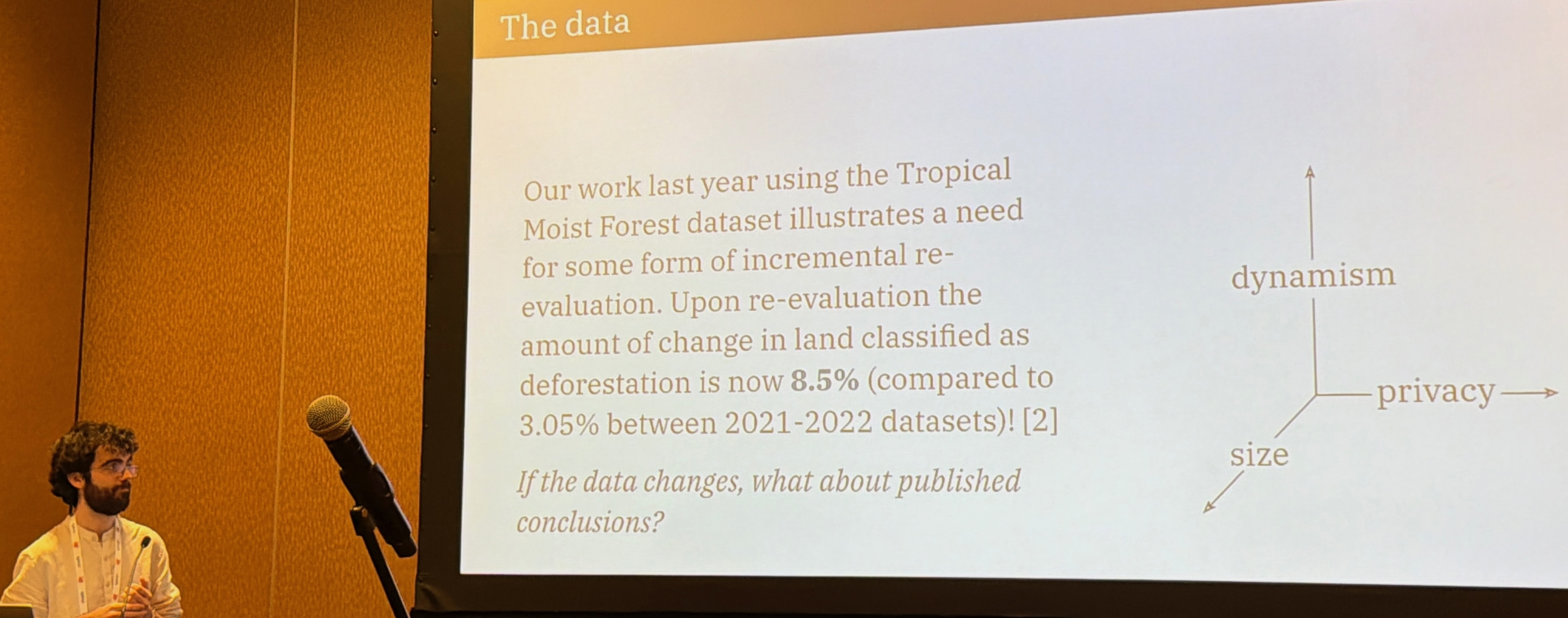

Patrick Ferris formalised many of the above problems with his thoughtful seminar on "what we talk about when we talk about scientific programming". Patrick came up with three axes along which the scientific method of hypothesis falsification needs to operate when dealing with computational data: dynamism, scale and access controls. If you'd like to read more about Patrick's thoughts on this topic, his paper last year on "Uncertainty at scale: how CS hinders climate research" is also well worth a read.

All these talks highlighted the difficulty of managing large and often very messy datasets in practise. So how do we move towards more principled platforms to fix the situation? That's what the next group of talks covered!

3 Towards a giant planetary wiki of code

By far my favourite aspect of PROPL was he sheer ambition on display when it comes to leveraging the network effects around computer technology to accelerate the pace of environmental action. The next batch of papers is all about evolving notebooks to be global scale!

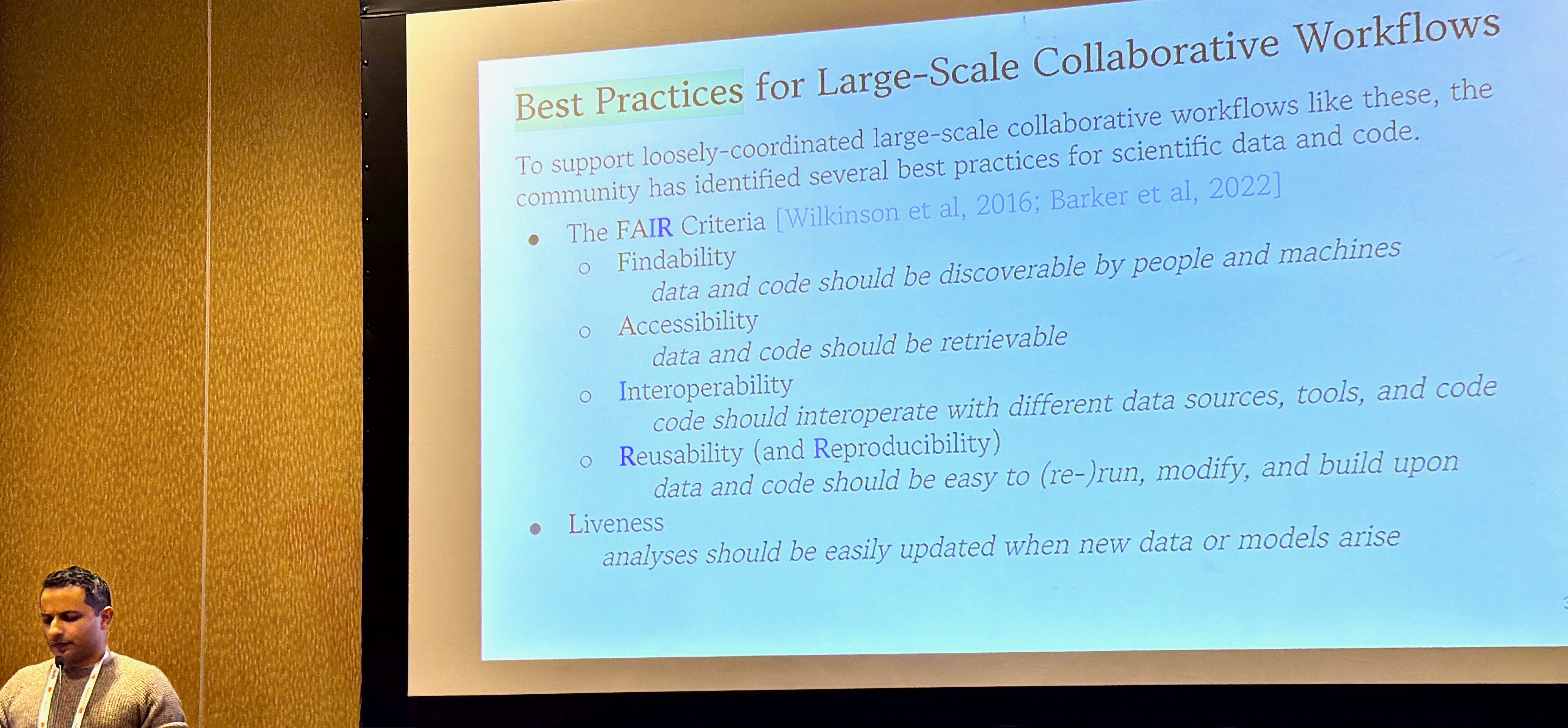

Cyrus Omar led the charge with his call to action for "A FAIR Case for a Live Computational Commons", which he described as a live planetary wiki! What is needed to turn something like Wikipedia into a live, updated computation graph with incremental, real-time visualisation? This would effectively be one giant program running and being updated by tens of thousands of contributors in real time.

This paper proposes Fairground, a computational commons designed as a collaborative notebook system where thousands of scientific artifacts are authored, collected, and maintained together in executable form in a manner that is FAIR, reproducible, and live by default. Unlike existing platforms, Fairground notebooks can reference each other as libraries, forming a single planetary-scale live program executed by a distributed scheduler. -- A FAIR Case for a Live Computational Commons, Omar et al 2025

Many of the answers to how to do this lay in the talks at this week's ICFP/SPLASH: programming languages with clean semantics for incremental compilation, purely functional with effect tracking, and mergeable semantics for managing large scale data structures. Cyrus' own Hazel language is a perfect example of an ergonomic interactive language that has clean, functional semantics while retaining usability.

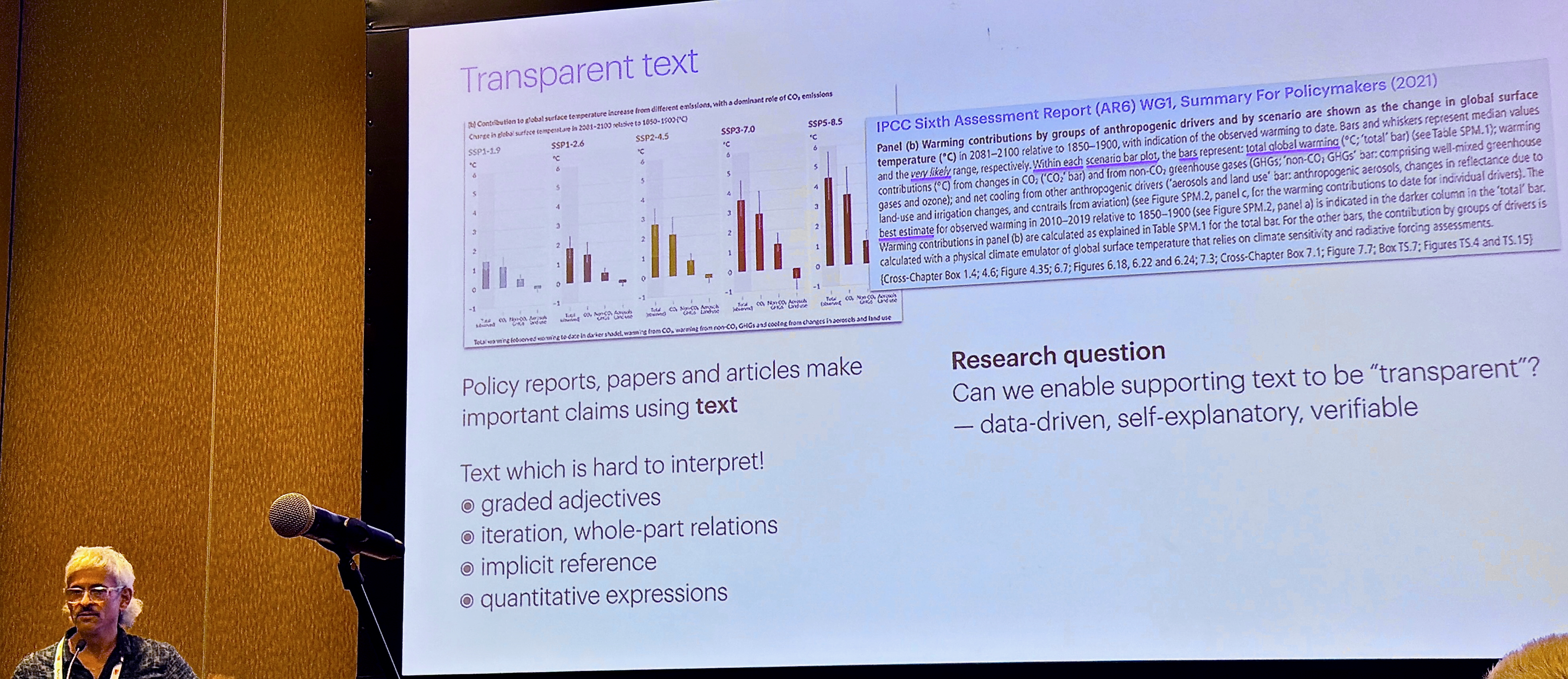

Roly Perera then switched tack and discussed how we might achieve more transparent climate reporting, via a new class of "transparent programming languages" like his own Fluid project.

With traditional print media, the figures, text and other content are disconnected from the underlying data, making them hard to understand, evaluate and trust. Digital media, such as online papers and articles, present an opportunity to make visual artifacts which are connected to data and able to reveal those fine-grained relationships to an interested user. This would enable research outputs, news articles and other data-driven artifacts to be more transparent, self-explanatory and explorable . -- fluid, explorable, self-explanatory research outputs

Roly showed in his talk how the latest advances in Fluid helped to automate providing "drill down" explanations for topics in the energy transition and decarbonisation, adaptation to climate change, or risk mitigation strategies that required policy changes justified by data.

The Fluid website has loads of interactive examples for you to explore, so continue on there if interested in this topic.

Cristian Urlea then discussed the crucial problem of Bridging Disciplinary Gaps in Climate Research, since the point of all these planetary computing systems is to make them accessible to non-computer-science-expert users (the "vernacular programmers").

Current scientific computing practices pose major barriers to entry, particularly for interdisciplinary researchers and those in low and middle-income countries (LMICs). Challenges include steep learning curves, limited access to expert support, and difficulties with legacy or under-documented software. Drawing on real-world experiences, we identify recurring obstacles in the usability, accessibility, and sustainability of scientific software. -- Bridging Disciplinary Gaps in Climate Research, 2025

I greatly enjoyed the framing of this paper of "reimagining scientific programming as a shared public good", a point also made by Roberto Di Cosmo recently in his Nature comment that we must stop treating code like an afterthought, and instead record, share and value it.

4 Onto Programming the Planet!

Once we have all these planetary scale notebooks, what sorts of new programs might we run on them? The last group of talks covered some radically different ideas here, and I was involved with all of them!

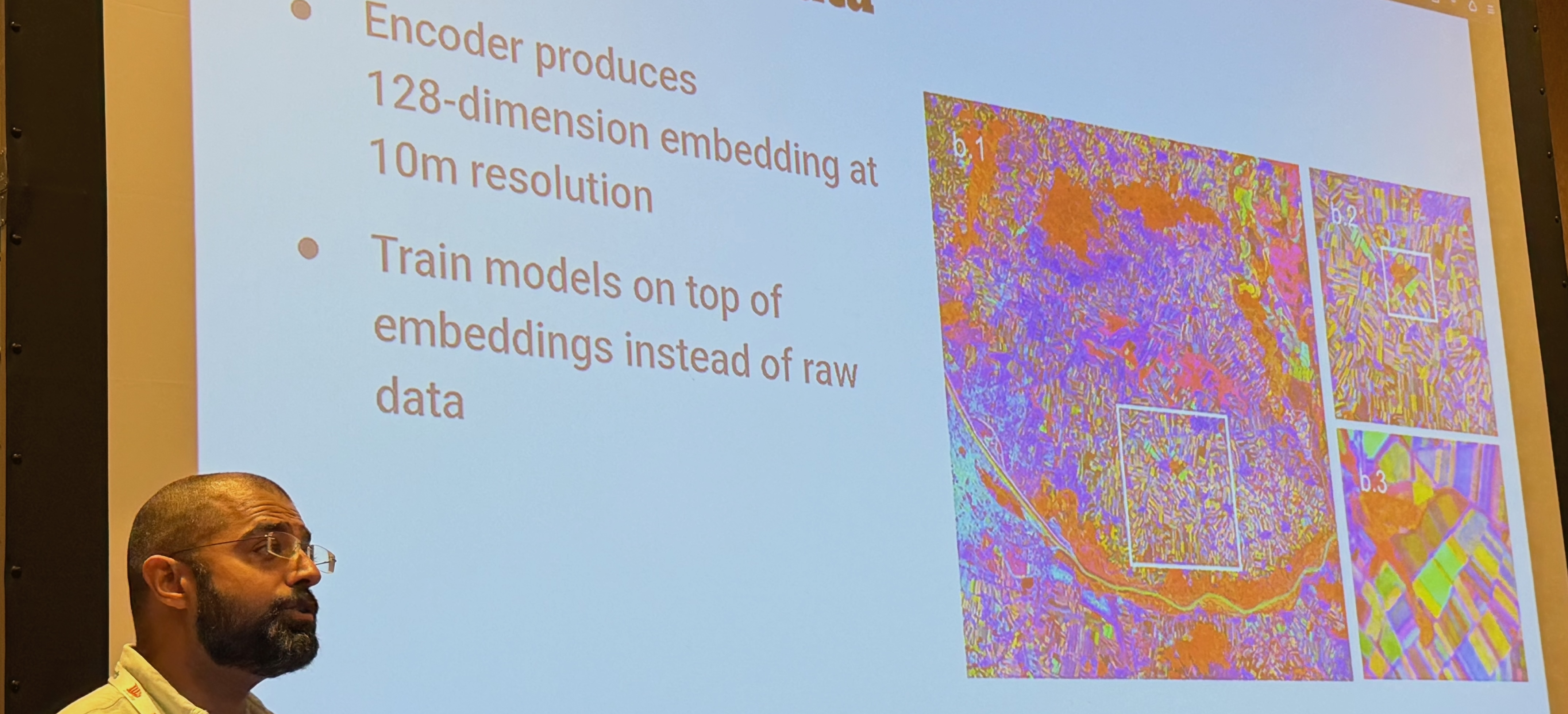

First, Sadiq Jaffer and Robin Young presented our own TESSERA project, which is a new geospatial foundation model for programming with observations of the planet from space. This is pretty scifi stuff!

Remote sensing observations from satellites are critical for scientists to understand how our world is changing in the face of climate change, biodiversity loss, and desertification. However, working directly with this data is difficult. For any given satellite constellation, there are a multitude of processed products, data volume is considerable, and for optical imagery, users must contend with data sparsity due to cloud cover. This complexity creates a significant barrier for domain experts who are not specialists.

Pre-trained, self-supervised foundation models such as TESSERA aim to solve this by offering pre-computed global embeddings. These rich embeddings can be used in-place of raw remote sensing data in a powerful “embedding-as-data” approach. For example, a single 128-dimensional TESSERA embedding for a 10-meter point on Earth can substitute for an entire year of optical and radar imagery, representing its temporal and spectral characteristics. While this could democratise access to advanced remote sensing-derived analytics, it also creates a new programming challenge: a lack of tools designed for this new approach.

-- Building a Usable Library for Planetary-Scale Embeddings, Jaffer 2025

Sadiq did a live demo by demonstrating the Geotessera Python library I've been hacking on. He went one step further and coded up a simple classifier that found most of the solar farms on the planet, with a demo that ran in minutes on his laptop!

After this, Andres Zuñiga-Gonzalez showed how he's been using airborne data to uncover socioeconomic stratification of urban nature in England, all via a pipeline that combines all sorts of geospatial algorithms to distill metrics about modern urban life.

Using high-resolution LiDAR (Vegetation Object Model), Sentinel 2 imagery, and open geospatial datasets for over 28 million buildings across England, we integrate raster, vector, and socioeconomic data within a scalable computational framework. Tree segmentation was performed using adaptive local-maximum filtering, canopy cover estimated at 1 m resolution, and park accessibility derived from network-based walking distances.

Inequality in access to nature was quantified via Gini coefficients and modelled with spatial error regressions against socioeconomic deprivation. Our results reveal that while most urban areas meet the 3-tree proximity rule, fewer than 3% achieve 30% canopy cover, and only a minority satisfy all three components simultaneously. -- Airborne assessment uncovers socioeconomic stratification of urban nature in England

You can read more about this in his preprint that explains in detail the programming pipeline (including route finding across tree canopies across the whole of England), and the bigger picture behind 3-30-300 mapping.

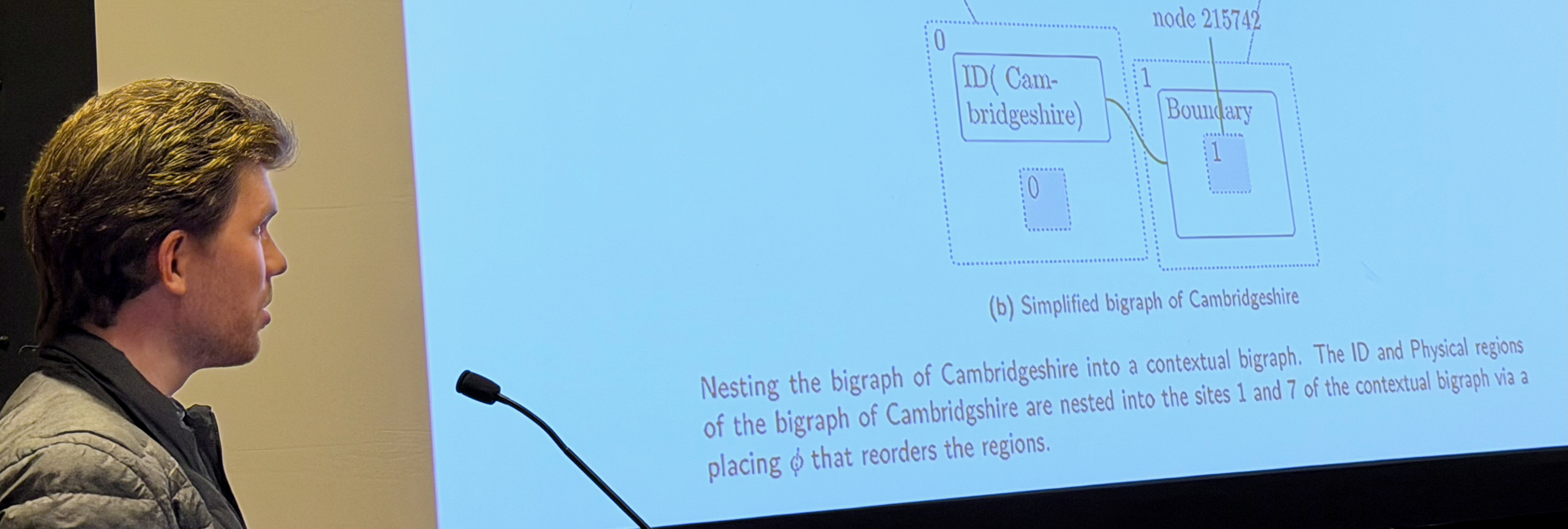

And last but definitely not least, Ryan Gibb presented possibly the most topical talk for a programming language venue: repurposing the classic Robin Milner bigraphs for environmental goodness! Ryan (and Josh Millar) observe that existing distributed computing models poorly capture spatial structure, hindering dynamic collaboration and access control. They argue that that space must be treated as a first-class concept in programming models, and use this to better coordinate the complex sensor systems we need for environmental science.

It was also wonderful to see Roy Ang attend the workshop, as it was his Part II project which imported OpenStreetMap into BigraphER that sparked off the idea in the first place. You may also be interested in our preprint "An Architecture for Spatial Networking" that explores this concept of spatial networking in more detail.

Reflections on the 2nd PROPL

As always, the corridor track of discussions after the conference was the most valuable part of attending PROPL. We had the opportunity to put up some posters during the main banquet session, and it was busy!

One thing that leapt out at me from the discussions was the need for a hosted service with the ergonomics of Docker, the interactive flexibility of Jupyter, the peer community of Wikipedia, and the semantic cleanliness of Hazel. This is overwhelmingly difficult to do in a topdown manner, but we do have now a growing community of practitioners and computer scientists who share a vision of making this happen. I had long conversations with most of the attendees of PROPL about how we might make this a reality, and my plan is spend a good chunk of my sabbatical time this year hacking on this.

The other really energizing thing was seeing all the side hacking going on. I spotted Patrick Ferris porting all the Python Geotessera code over to OCaml in a skunkworks effect of which I heartily approve. I looked over the Hazel UI with Cyrus Omar to figure out what a planetary view might look like. Aadi Seth figured out spatial datastructures for the CoRE stack with Ryan Gibb and Andres Zuñiga-Gonzalez while we stood around in the blazing Singapore heat. Michael Coblenz lead a session at HATRA on user centric approaches to types and reasoning assistants.

All of these strands could weave together powerfully into groundup systems that solve the problems we've been defining at PROPL! I'm feeling energized and tired, and look forward to continuing the discussions started in London (2024) and Singapore (2025) into real systems in 2026!

-

See also in the ICFP25 series: chairing PROPL25, the OxCaml tutorial, multicore at Jane Street and Docker, post-POSIX IO and what I learnt.

↩︎︎