I've just released geotessera 0.7 to pypi for our TESSERA geospatial foundation model, following on from the first release earlier this year. To recap:

TESSERA is a foundation model for Earth observation that processes Sentinel-1 and Sentinel-2 satellite data to generate representation (embedding) maps. It compresses a full year of Sentinel-1 and Sentinel-2 data and learns useful temporal-spectral features. -- Temporal Embeddings of Surface Spectra for Earth Representation and Analysis

With this new release, there's convenient documentation to show how you can freely access 150TB+ of CC-BY-licensed embeddings of the earth's surface. We've been getting a growing influx of requests for diverse regions of the world, and so our focus for the next few months is attaining complete coverage of our v1 model on the whole planet.

1 Generating and storing the world's embeddings

A new coverage map visualiser tracks our progress towards complete global embeddings; to complete the set, we have a giant inferencing task ahead of us. AMD kindly provided us access to an MI300X cluster for us to train the TESSERA models on, and now we are using our in-house Dawn cluster along with machines donated by Tarides and Jane Street to run every tile on earth through our pipeline. The output of this is a very large set of 128 dimensional vectors in numpy format, which we then spatially sort and make available for download. You can browse the tiles on our HTTP server, where you can see one such tile and associated[1] checksums.

All pretty simple stuff -- it's just some floating point numbers! -- except for the sheer number of them. Each tile of vectors is around 160MB in size, and so we need around 250TB of storage per year, and we want them from 2017 through to 2024. And by the time we're done inferring all these, 2026 will be upon us and we'll need to generate another 250TBs in short order for the 2025 seasons. Ultimately, to keep up with this we need to build a scalable, open and federated pipeline to handle this, which the 0.7 geotessera release lays the groundwork for.

Earlier releases of geotessera used the Pooch library as a registry for all the embeddings. Pooch is a very convenient Python library that handles the details of fetching, checksumming and caching, but is really designed for grabbing a few large datasets, for example from Zenodo. The Tessera manifests repository for Pooch now has over a million files and takes minutes to initialise the data structures, which in turn makes using GeoTessera interactively slow.

One lesson Sadiq Jaffer and I learnt from building our giant literature database is how good the Parquet file format is. So in this 0.7 release, we switch from a text format to track all the tiles to a couple of GeoParquet databases. To help visualize this, I've created an avsm/geotessera HuggingFace dataset where you can browse the format, as well as an interactive coverage map.

Now when you initialise GeoTessera 0.7+, it will download the two parquet files instead of using the old git registry. The landmasks parquet rarely changes (it just provides the mapping between ocean/land tiles and the relevant UTM projection). The registry contains the tiles for all years, and weighs in around 100MB so far compressed. In future releases, I'll probably split out them out to be per-year, which will make them smaller again, but they're all in one place for convenience right now as the size of the registry download is about the same as a single embedding tile, so it's not hugely significant to optimise at this stage.

1.1 Towards Zarr format instead of Numpy?

Hot on the heels of the 0.7.0 release, Janne Mäyrä submitted a PR to add support for a file format called Zarr which is a really optimised way to store tensors.

Zarr is a community project to develop specifications and software for storage of large N-dimensional typed arrays, also commonly known as tensors. A particular focus of Zarr is to provide support for storage using distributed systems like cloud object stores, and to enable efficient I/O for parallel computing applications.

Zarr is motivated by the need for a simple, transparent, open, and community-driven format that supports high-throughput distributed I/O on different storage systems. Zarr data can be stored in any storage system that can be represented as a key-value store, including most commonly POSIX file systems and cloud object storage but also zip files as well as relational and document databases.

-- Zarr Homepage, 2025

This is exactly what we need given the giant storage requirements above, so I've fixed up and merged this feature and included it into a 0.7.1 point release. I've not had much chance to actually play with the format yet, but getting it into a release is the best place to start. I got one positive message from Andres Zuñiga-Gonzalez that he's been using Zarr already in his Local Climate Zone experiments.

2 More convenient APIs for sampling

The other feature that's gone into the new library are higher level APIs to use the embeddings. A very common usecase when building downstream tasks is to have to sample embeddings for a set of labels, which are then used to train classifiers. It's quite cumbersome to select these out by hand, particularly with larger regions of interest.

There is now a new sample_embeddings_at_points library call

that extracts embedding values at arbitrary lon/lat coordinates and

groups points by tile for efficient batch processing. You can see this in action

in the new geotessera-examples

repository where we're starting to put sample code for downstream tasks that

use geotessera.

The first one here is the code to detect solar panels worldwide, as demoed by Sadiq Jaffer in his recent PROPL 25 talk. Browse though the source code and give it a spin. The results can visualised using QGIS, and I'm working on a notebook interface for this later.

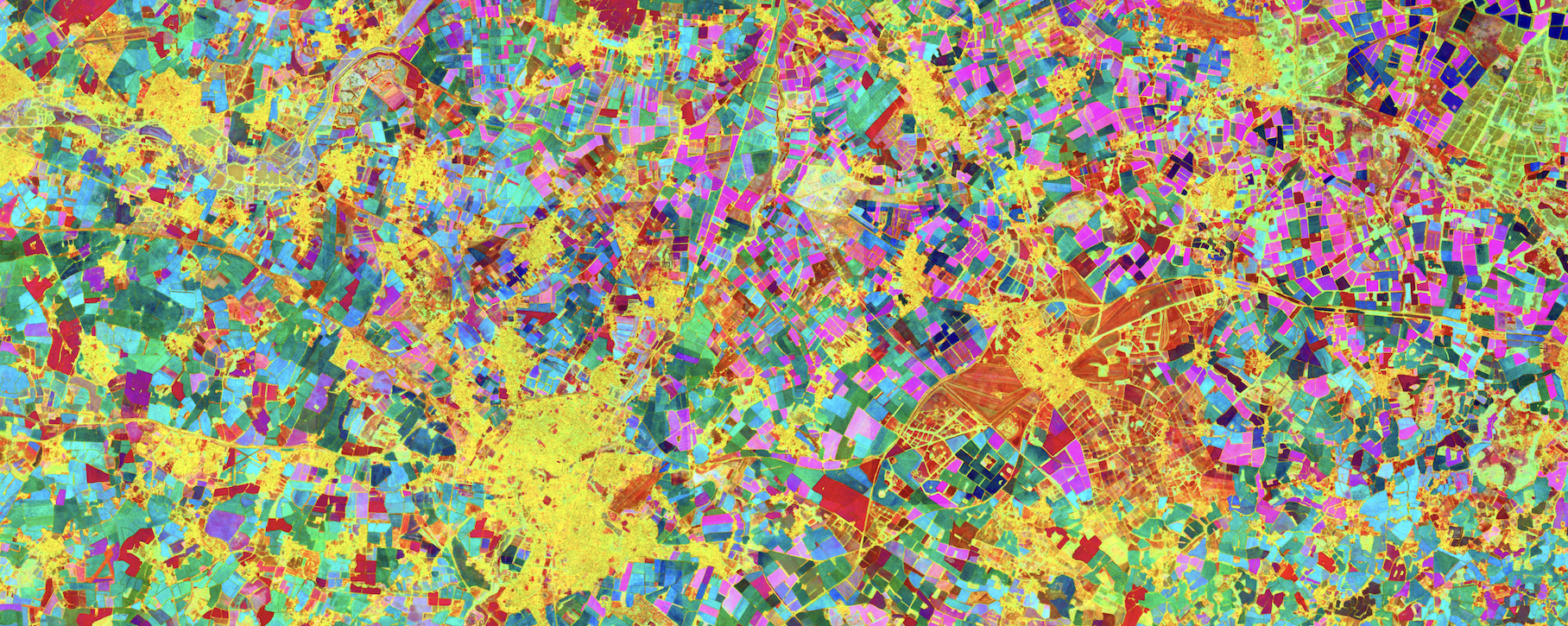

The second one is the parametric UMAP script to do false colour visualizations of any ROI. You can see a high-res "arty version" that has been contour traced using a pomap algorithm which renders as a high-res SVG as well.

3 Keep the embeddings requests coming!

That's it for now with GeoTessera updates. Enjoy the new releases, and if you have any requests for embeddings please get them in our queue.

Mark Elvers is currently syncing our embeddings to Scaleway via a Ceph cluster we setup for this purpose, and I'll be announcing even more federation options for TESSERA over the coming weeks. We're really grateful to the outpouring of offers of help with our computational needs from our users and cloudy friends! And we also know that TESSERA doesn't have a proper homepage yet; we'll work on this right after the immediate embeddings bottleneck is handled for our current users (contributions from interested web designers are very welcome here).

-

There are two numpy files in there because we store the quantized embeddings to save space. To dequantize them, the scales are multiplied to each of the bands in the main file. Geotessera takes care of this for you.

↩︎︎