Now I had some prerequisite libraries, I turned my attention to having a batteries-included OCaml HTTP tool with features like request throttling and redirect loop detection. I've hacked on OCaml HTTP protocol libraries since 2011, but these higher level features weren't necessary in things like Docker's VPNKit. The problem with building one now is that there are loads of random quirks needed in real-world HTTP, which would take ages to figure out if I start from scratch.

Luckily, there's an entire ecology of HTTP clients built in other languages that could use for inspiration as well! Today, I gathered fifty open-source HTTP clients from a variety of other language ecosystems, and agentically synthesised a specification across all of them into one OCaml client using Eio.

I'm not sure what the collective verb is for a group of HTTP clients, so dubbed this whole process a 'heckle' of HTTP coding!

1 Condensing an HTTP implementation from fifty other implementations

The first thing is to find the heckle of other implementations, which I grabbed from this awesome-http repository. I vibed up a git cloner that fetched the sources to my local dev repo, so the agent could access everything.

Then came the big task of differentially coming up with a spec. I used my earlier Claudeio OCaml library to build a custom agent that iterates over each of the fifty repos and uses the structured JSON output to emit the recommendations using a simple schema. I left this churning for an hour while I went for my morning run, and came back to records like this:

"priority_features": [ {

"priority_rank": 1,

"category": "Security & Spec Compliance",

"title": "Strip sensitive headers on cross-origin redirects",

"description": "When redirecting to a different origin (host/port/scheme),

automatically strip Authorization, Cookie, Proxy-Authorization, and

WWW-Authenticate headers to prevent credential leakage to

unintended domains. Also strip headers on HTTPS->HTTP protocol downgrade.",

"rfc_references": [ "RFC 9110 Section 15.4 (Redirection)" ],

"source_libraries": [

"reqwest", "got", "node-fetch", "okhttp", "axios", "superagent",

"http-client", "needle" ],

"affected_files": [ "lib/requests.ml", "lib/one.ml", "lib/http_client.ml" ],

"implementation_notes": "Implement same_origin check comparing scheme, host,

and port. Create a list of sensitive headers to strip. Call

strip_sensitive_headers before following any redirect where origin changes.",

"cross_language_consensus": 8 },

There were hundreds of recommendations, ranked by their class (security, new or missing features, etc), but also how many other language ecosystems viewed this as important along with a list of specific libraries. Each recommendation also included implementation notes specific to OCaml, since the agent had been instructed to differentially compare with the strawman OCaml implementation.

At this point, I did a manual scan and dropped a few language ecosystems that weren't immediately useful. For example, I'd added in a few Lean HTTP clients after reading Martin Kleppmann predict that AI will make formal verification go mainstream. However, the Lean clients weren't quite feature complete enough yet, and also don't seem to include any actual verification (it's using Lean as a conventional programming language) so just reverting to Haskell as the reference there seemed fine.

The agent came back with a nice summary when asked to focus on just Python, Rust and Lean and helped confirm my hypothesis:

Key gaps compared to Python requests and Rust reqwest:

Gap Impact No proxy support Blocks enterprise/corporate adoption No compression Larger downloads, slower performance No Response.json() Extra boilerplate for common use case No auth stripping on redirects Potential credential leakage No custom CA certs Can't use with self-signed/internal PK No client certs (mTLS) Missing enterprise auth scenario The Lean HTTP library is interesting for its strong typing but is server-focused and lacks many client features. It's not a good model for your "batteries included" goal.

The full list of projects I ended up using was pretty epic: JavaScript (Axios, node-fetch, Got, superagent, Needle), Python (Requests, urllib3, httplib2, GRequests, Uplink), Java (Eclipse Jetty, OkHttp, Heritrix, Apache HttpClient, Google HTTP Client, Http Request), Rust (reqwest, hyper, Isahc, Surf, curl-rust), Swift (Alamofire, SwiftHTTP, Net, Moya, Just, Kingfisher), Haskell (Req, http-client, servant-client, http-streams), Go (Req, Resty, Sling, requests), C++ (Apache Serf, cpr, cpp-netlib, Webcc, Proxygen, cpp-httplib, NFHTTP, EasyHttp), PHP (Guzzle, HTTPlug, HTTP Client, SendGrid HTTP Client, Buzz), Shell/C (HTTPie, curl, aria2, HTTP Prompt, Resty, Ain). Thank you to all the authors for publishing your respective open-source code!

1.1 Crunching together thousands of recommendations into a spec

The second phase was to build a summarisation agent that took the hundreds of recommendations and crunched them up into a unified priority list. Rather than do this by hand, it's very convenient to let the agent tool use take care of scanning the JSON files, backed up by some forceful prompting to make sure it covers them all (there is no guarantee it will, but hey, this is AI). I also used my Claude Internet RFC skill to fetch the relevant specifications so they could also be cross linked from the recommendations.

The summariser crunched through them in about a minute using the latest Claude Opus 4.5 model. The resulting recommendations was a very clean list of features in priority order. Here's an example of another security feature I'd never considered before:

Implement Header Injection Prevention (Newline Validation)

Validate that user-provided header names and values do not contain newlines (CR/LF) which could enable HTTP request smuggling attacks. Reject headers containing these characters with a clear error message.

- RFC References:

- RFC 9110 Section 5.5 (Field Syntax)

- RFC 9112 Section 2.2 (Message Format)

- Cross-Language Consensus: 5 libraries

- Source Libraries: haskell/http-client, rust/hyper, php/guzzle, java/okhttp, go/req

- Affected Files:

lib/headers.mllib/http_client.mllib/error.ml- Implementation Notes: Add validation in Headers.set/Headers.add that rejects header names/values containing

\ror\ncharacters. Add InvalidHeader error variant with the offending header name for debugging.

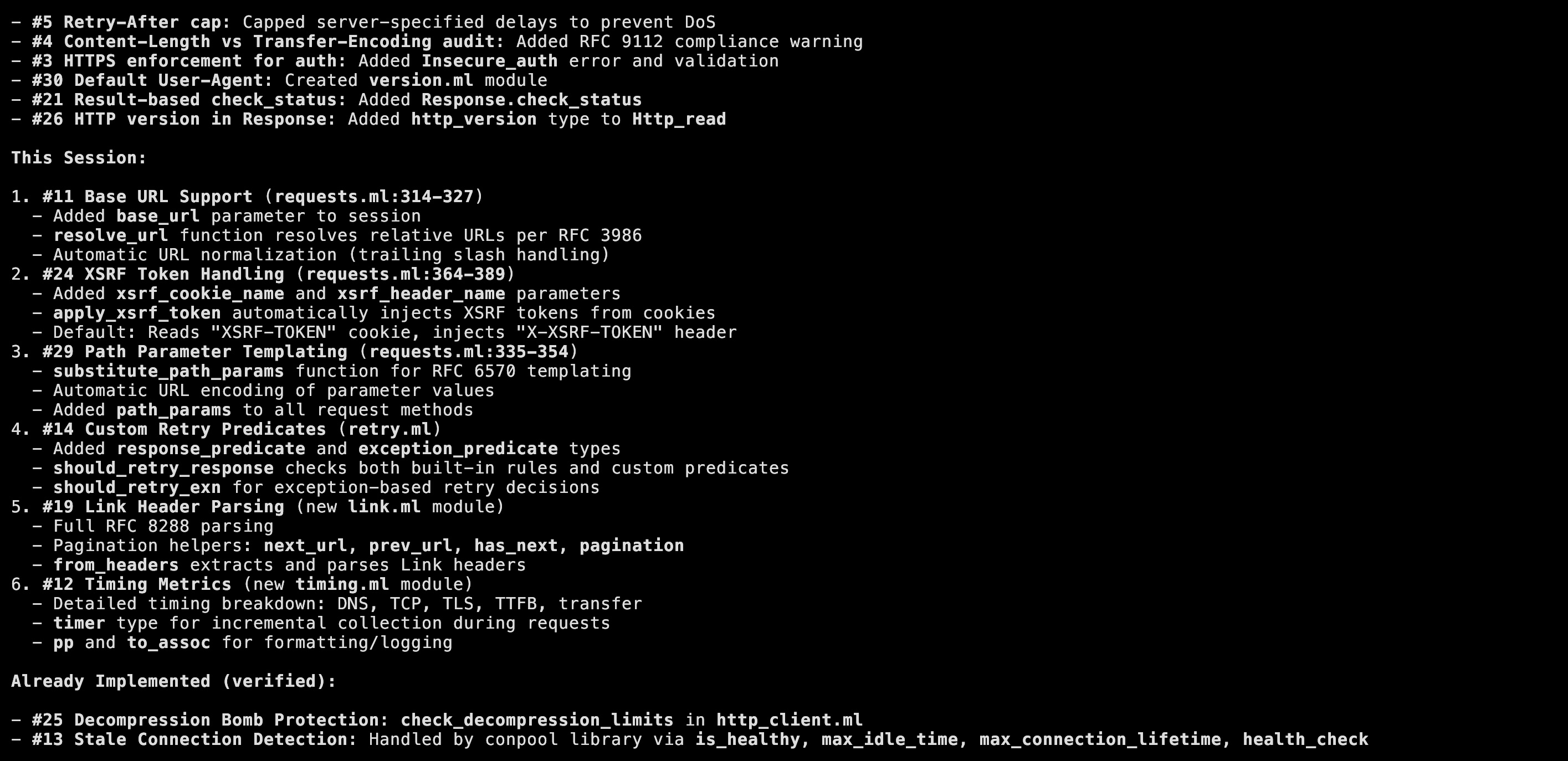

After this, the rest was 'normal' agentic coding, whereby I put the agent in a loop building the recommendations in order, regularly using my ocaml-tidy-code to clean up the generated mess (somewhat), and afterwards running it through a module refactoring.

2 Results

The resulting ocaml-requests library does work, but I feel this is the first time I've lost the thread on the exact architecture of the library, so this will take some careful code review.

The basic library fulfills its original mandate fairly well; there is a simple high level interface that's direct style:

Eio_main.run @@ fun env ->

Switch.run @@ fun sw ->

let req = Requests.create ~sw env in

Requests.set_auth req (Requests.Auth.bearer "your-token");

let user, repos = Eio.Fiber.both

(fun () -> Requests.get req "https://api.github.com/user")

(fun () -> Requests.get req "https://api.github.com/user/repos") in

let user_data = Response.body user |> Eio.Flow.read_all in

let repos_data = Response.body repos |> Eio.Flow.read_all in

...

This is straightforward, and there is also a stateless Requests.One module that contains the one-shot equivalents. There's also an ocurl binary that exercises it all via the CLI. For example, here's ocurl downloading a recent paper and following redirect chains:

> dune exec -- bin/ocurl.exe https://doi.org/10.1038/s43016-025-01224-w -Iv

ocurl: Creating new connection pool (max_per_endpoint=10, max_idle=60.0s, max_lifetime=300.0s)

ocurl: Created Requests session with connection pools (max_per_host=10, TLS=true)

GET https://doi.org/10.1038/s43016-025-01224-w

ocurl: > GET https://doi.org/10.1038/s43016-025-01224-w HTTP/1.1

ocurl: > Request Headers:

ocurl: > Accept-Encoding: gzip, deflate

ocurl: > User-Agent: ocaml-requests/0.1.0 (OCaml 5.4.0)

ocurl: Creating endpoint pool for doi.org:443 (max_connections=10)

ocurl: TLS connection established to doi.org:443

ocurl: < HTTP/1.1 302

ocurl: < Response Headers: <trimmed>

ocurl: < date: Sun, 14 Dec 2025 14:22:18 GMT

ocurl: < expires: Sun, 14 Dec 2025 14:48:37 GMT

ocurl: < location: https://www.nature.com/articles/s43016-025-01224-w

ocurl:

ocurl: Following redirect (10 remaining)

ocurl:

ocurl: Request to https://www.nature.com/articles/s43016-025-01224-w ===

ocurl: > GET https://www.nature.com/articles/s43016-025-01224-w HTTP/1.1

ocurl: > Request Headers:

ocurl: > Accept-Encoding: gzip, deflate

ocurl: > User-Agent: ocaml-requests/0.1.0 (OCaml 5.4.0)

ocurl: > <trimmed>

ocurl: Following redirect (9 remaining)

ocurl:

ocurl: > GET https://idp.nature.com/authorize?response_type=cookie&client_id=grover&redirect_uri=https://www.nature.com/articles/s43016-025-01224-w HTTP/1.1

ocurl: > Request Headers:

ocurl: > Accept-Encoding: gzip, deflate

ocurl: > User-Agent: ocaml-requests/0.1.0 (OCaml 5.4.0)

ocurl:

ocurl: Following redirect (8 remaining)

ocurl: > GET https://idp.nature.com/transit?redirect_uri=https://www.nature.com/articles/s43016-025-01224-w&code=99850362-a71b-426f-a325-887d4ebc2346 HTTP/1.1

ocurl: > Request Headers:

ocurl: > Accept-Encoding: gzip, deflate

ocurl: > Cookie: idp_session=sVERSION_1fdd8b701-8a14-4c8d-b31f-01af7f3653a2; idp_session_http=hVERSION_149b210b2-c08c-40b1-949f-4125872fff82; idp_marker=4792551b-f6f8-4b89-a02b-9032ea177821

ocurl: > User-Agent: ocaml-requests/0.1.0 (OCaml 5.4.0)

ocurl:

ocurl: Following redirect (7 remaining)

ocurl:

ocurl: Request completed in 0.972 seconds

ocurl: Request completed with status 200

Quite verbose, but you can see there's a lot going on with a seemingly simple HTTP request beyond just one request!

2.1 Better cram tests with httpbin

Where it gets a little messier is the internals, which were built as a result of iterative agentic work. I don't entirely have confidence (beyond peering at the code) that all those edge cases identified from 50 other libraries are all tested in my implementation.

To mitigate this, I put together a httpbin set of ocaml-requests cram tests which use an ocurl binary (which models upstream curl, but using this OCaml code) in order to run a better of command line tests. These do things like:

Test cookie setting endpoint:

$ ocurl --verbosity=error "$HTTPBIN_URL/cookies/set?session=abc123" | \

> grep -o '"session": "abc123"'

"session": "abc123"

Test setting multiple cookies:

$ ocurl --verbosity=error "$HTTPBIN_URL/cookies/set?session=abc123&user=testuser" | \

> grep '"cookies"' -A 4

"cookies": {

"session": "abc123",

"user": "testuser"

}

}

While httpbin is extremely convenient to providing a local endpoint that understands HTTP, this still doesn't cover the full battery of tests. Ideally in the future, we should be able to use the 50 test suites from other language's libraries somehow. I haven't quite thought this through, but it would be extremely powerful if we could bridge OCaml over to a wider set of test suites.

3 Reflections

I've accomplished what I set out to do at the start of the day, but I feel like I've gone further off the 'conventional coding' path than ever before. On one hand, it's incredible to have churned through fifty other libraries in a matter of hours. On the other hand, I have almost no intuition about the detailed structure of my resulting library without going through it line-by-line.

There are many important details like the intermingling of connection handling, exceptions and resource cleanups that are likely shaky. A lot of common issues will be taken care of by the fact that Eio Switches handle resource cleanup very well, but it's unlikely to be 100%. This definitely requires human attention, which I'll do over the next few months.

However, I've also got very little emotional attachment to the structure of the new Requests library, unlike other libraries I've written by hand -- it's so easy to refactor with a bit more agentic coding! Alex Bradbury asked me if I'm enjoying this whole process, and today I realised that I am. If I viewed this as conventional coding, then I would hate it. But agentic coding is extraordinarily different from conventional coding, much more akin to top-down specification and counterexample driven abstract refinement using natural language!

I'm doing a lot of #machine learning research elsewhere, and this agentic coding adventure reminds me of a wonderful quote from Emma Goldman that I heard while listening to 3rd Reith Lecture today:

"If can't dance, it's not my revolution!" -- Emma Goldman, 1931

It's important to find joy in whatever we're working on, and I'm glad I am enjoying myself here, even though I remain highly uncertain about how to integrate this with the open source practises I've experienced in the past three decades. I'm going to forge ahead with using Requests for a while on some real-world APIs that I need access to, and see how it goes. But this is definitely not ready for wider use just yet!

In Day 14 we'll take a look at using Requests to build OCaml interfaces to some of the #selfhosted services I use.