I took a break from yesterday's bot hacking to continue the HTML5 parsing in OCaml adventure. Vibespiling seems to have taken off, with Simon Willison reporting that there's a Swift version now as well. I got curious about how far I could push the vibespiling support: could we go beyond "just" parsing to also do complete HTML5 validation? The Nu HTML Validator is where I went next, which is a bunch of Java code used by the W3C to apply some seriously complex rules for HTML5 validation.

I decided to split this work into two days, and started with a simple problem: HTML5 validation includes the need for automated language detection to validate that the lang attribute on HTML elements matches the actual content. This is important for accessibility, as screen readers use language hints to select the correct pronunciation.

The W3C validator uses the Cybozu langdetect algorithm, so I vibespiled this into pure OCaml code as ocaml-langdetect. However, I decided to push harder by compiling this to three different backends: native code OCaml, JavaScript via js_of_ocaml and then into modern WebAssembly using wasm_of_ocaml. As a fun twist, I got the regression tests running as interactive "vibesplained" online notebooks that can do language detection in the browser.

1 The n-gram frequency algorithm

Language detection via n-gram analysis is surprisingly simple. The algorithm first trains profiles for each language, by analysing a corpus of text and counting the frequency of sequences of 1-3 characters. This creates a statistical fingerprint of the language. Then, when given unknown text it extracts its n-grams and compares against all trained profiles using Bayesian probability. The language whose profile best matches the text wins.

It turns out that n-gram frequencies are remarkably stable across different texts in the same language. To pick an obvious example, the word "the" appears frequently in English texts, giving bigrams "th" and "he" high frequencies. Similarly, "qu" is common in French, "sch" in German, etc etc. The algorithm uses multiple trials with randomized sampling to avoid overfitting to any particular part of the text. Each trial adjusts the smoothing parameter slightly using a Gaussian distribution, all of which should be straightforward to implement in OCaml.

2 Implementing langdetect in OCaml

I grabbed the validator/validator/langdetect directory and vibespiled it from Java to OCaml, which is straightforward now with all the earlier Claude skills I've developed this month. The major hurdle to leap that's different from the other libraries is where to stash the precomputed ngram statistics for all the different languages. I wrote ocaml-crunch for Mirage back in 2011 which just generates OCaml modules, but it's still surprisingly difficult to be more efficient and store precomputed data. Jeremy Yallop noted back in March that his modular macros project should support this sort of usecase but it's not quite ready yet. Similarly, using OCaml Marshal requires stashing the marshalled datastructure somewhere, which is hard to do portably.

Without a clear optimisation strategy, I prompted the agent to just precompute the profiles directly into OCaml code. The initial port worked immediately thanks to the clear structure of the Java code it was being vibespiled from. The static library was 115MB, but I didn't really notice as the regression tests all passed. The language profiles contain 172,000 unique n-grams across 47 languages, and the naive approach of generating one OCaml module per language with string literals duplicated n-grams across profiles.

The native code library provides a straightforward interface to query the ngrams via a cmdliner binary:

$ dune exec langdetect

Hello Thomas Gazagnaire, I'm finally learning French! Just kidding, I don't know anything about it.

en 1.0000

$ dune exec langdetect

Bonjour Thomas Gazagnaire, j'apprends enfin le français ! Je plaisante, je n'y connais rien.

fr 1.0000

$ dune exec langdetect

Hello Thomas Gazagnaire, I'm finally learning French! Just kidding, I don't know anything about it.

Bonjour Thomas Gazagnaire, j'apprends enfin le français ! Je plaisante, je n'y connais rien.

en 0.5714

fr 0.4286

3 The 115MB problem for JavaScript

But then, when I compiled it to JavaScript using the dune stanzas the massive size was a little too big, with very long compilation times. The fix was simply to pack everything into a shared data structure across all languages, looking something like this:

(* Shared string table for all 172K unique n-grams *)

let ngram_table = [| "the"; "th"; "he"; ... |]

(* Flat int array: (ngram_index, frequency) pairs for all languages *)

let profile_data = [| 0; 15234; 1; 8921; ... |]

(* Offsets: (lang_code, start_index, num_pairs) *)

let profile_offsets = [|

("en", 0, 4521);

("fr", 9042, 3892);

...

|]

This reduced the binary from 115MB to around 28MB, which is a reasonable reduction without having to resort to compression. Many n-grams appear in multiple languages (consider Latin alphabet characters) so deduplicating into a shared string table eliminated quite a bit of redundancy.

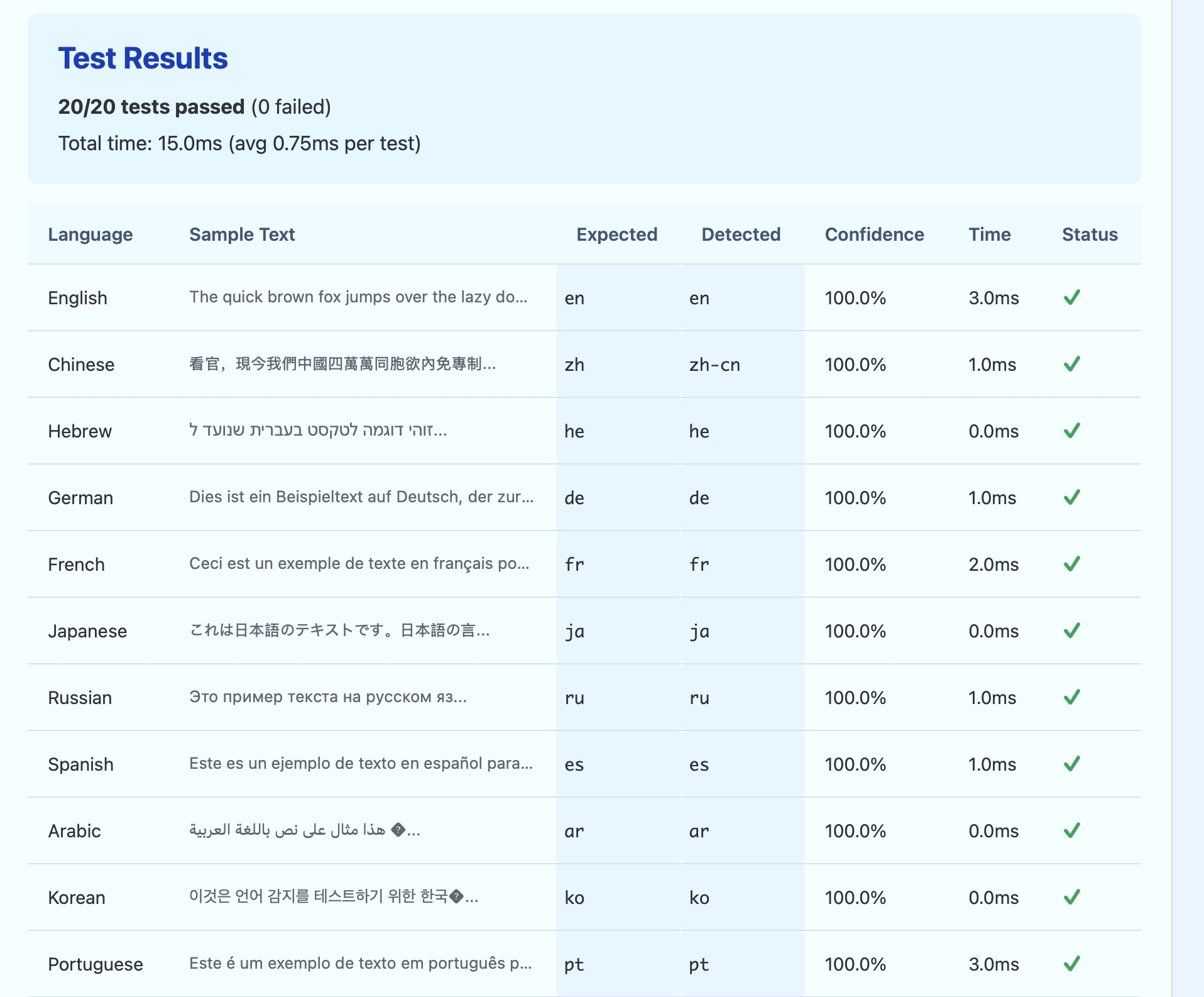

At this point, I prompted the agent to build me a full Javascript based regression test that took the native code, and gave me a browser based version instead.

One minor hiccup was that the regression tests failed due to JavaScript integers overflowing vs native integers, but the fix was simple and the regression tests in the browser made debugging them easy for the agentic loop. Without them, there would have been a lot of human cut-and-pasting which is quite tedious!

4 The WASM array limit

With the JavaScript size under control, I turned to WASM compilation via wasm_of_ocaml. The first attempt failed with a cryptic error about exceeding operands and a parse error. It turns out WASM's array_new_fixed instruction has a limit of 10000 operands, and our profile data array had 662,000 elements.

The solution was to chunk the arrays and concatenate at runtime, which incurs runtime overhead but is a common enough solution. The generated code now includes 74 chunks for the profile data and 20 chunks for the n-gram string table, but clocks in at around 20MB and could probably be reduced further with some browser compression.

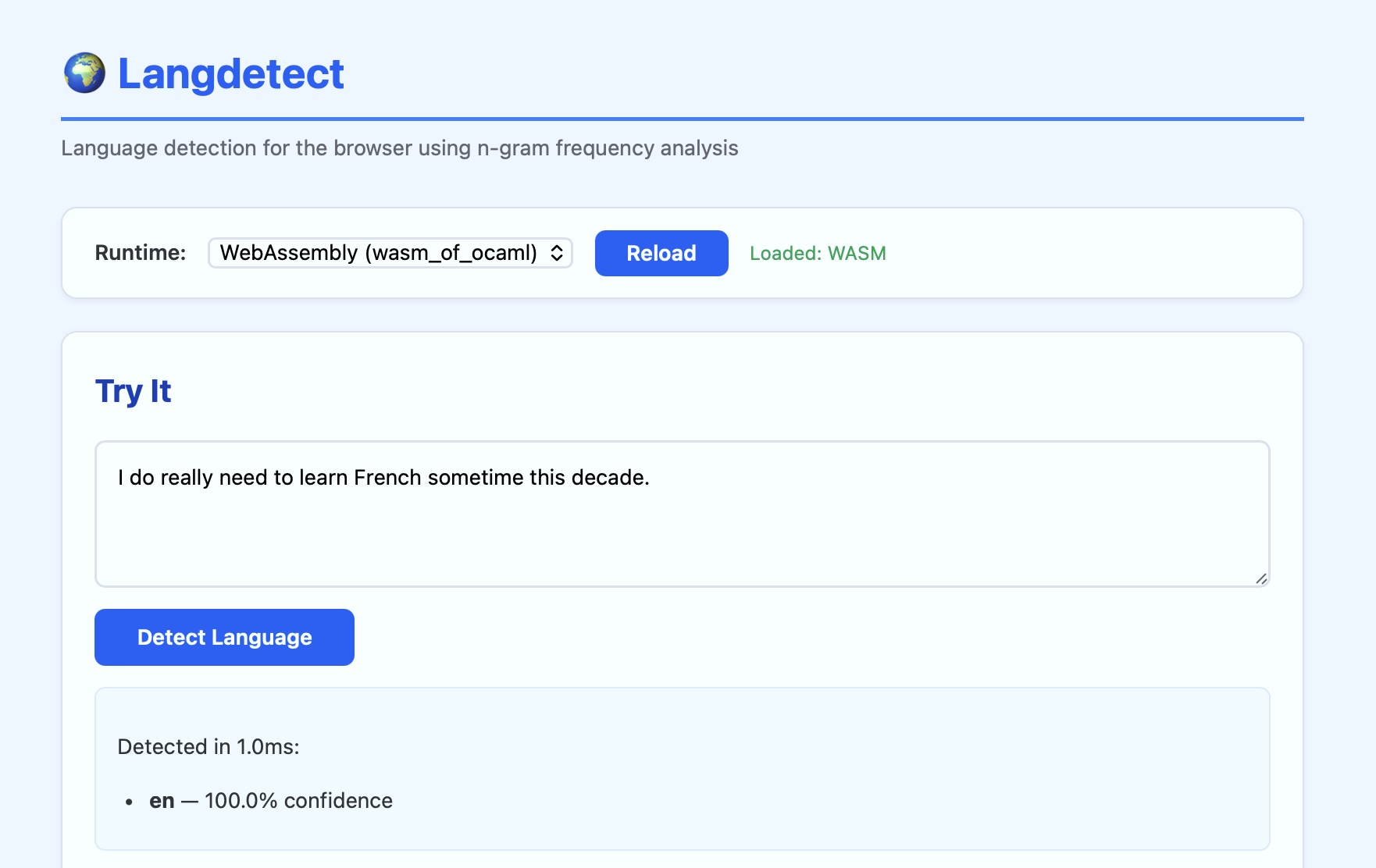

Now, the browser tests include the ability to switch between Wasm and

JavaScript in the same test HTML. There was no real performance difference here, but the dataset is small. The most observable difference is that the wasm needs to be served via a web server and not local filesystem, as otherwise browsers reject it. The browser also must serve .wasm files as mime type application/wasm or it's promptly rejected.

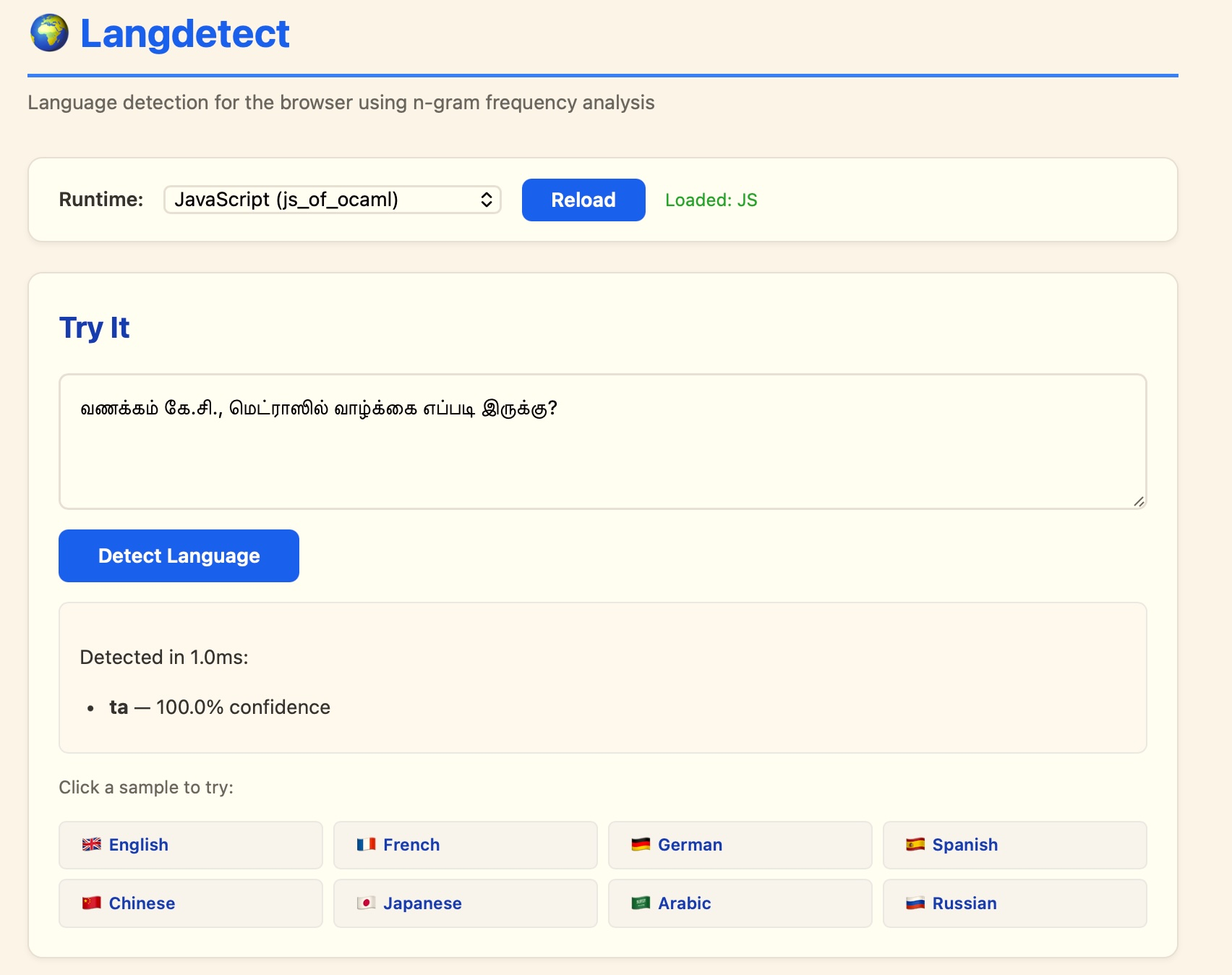

5 Browser demo

The OCaml langdetect-js package provides a browser-ready API using Brr callbacks from HTML to register them with the JavaScript:

// Detect language

const lang = langdetect.detect("Hello, world!"); // "en"

// Get probability scores

const result = langdetect.detectWithProb("Bonjour le monde");

// { lang: "fr", prob: 0.9987 }

// Get all candidates

const all = langdetect.detectAll("这是中文");

// [{ lang: "zh-cn", prob: 0.85 }, { lang: "zh-tw", prob: 0.12 }, ...]

I also published this to npm so that the JavaScript is conveniently available via a CDN like jsDeliver. Publishing to npm required putting a npm branch in the repo with the npm package.json. I followed the convenient guide by Simon Willison to get a minimal package.json for the project.

6 Reflections

This was a good intermediate port to work on since it let me exercise Webassembly a bit more, and understand the tradeoffs in OCaml compilation to these other backends. The process of getting the agent to systematically port first to native code (from Java), and then compile to JavaScript and debug platform-specific issues like the integer overflows, and then go to wasm was quite good.

The agent was particularly helpful for the tedious work of generating the chunked array code and debugging the Unicode normalization edge cases.

For future hacking, there are several language optimisations coming up in OxCaml that should make this even more efficient; support for compile time metaprogramming (so I could for example compute a perfect hash statically for all the ngrams), and also for smaller integer sizes so I dont need to use a full 31-bit range for the ngram values. However, I couldn't quite get the wasm_of_ocaml constraints on the oxcaml branch working so I ran out of time today to get this going. Package management takes me out of the flow zone yet again!

Now that langdetect works, we'll go onto the full HTML5 validator in Day 21!