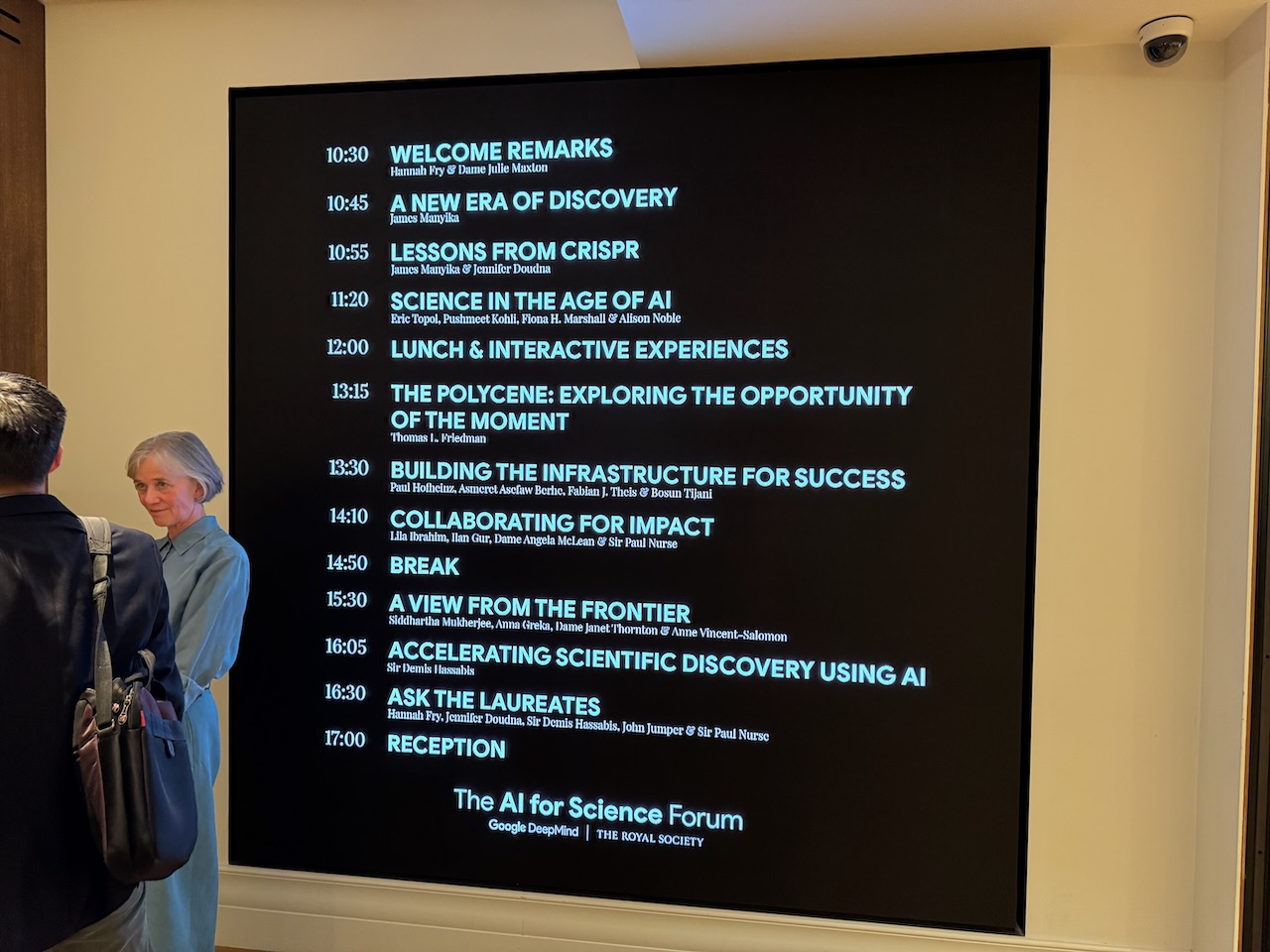

I got invited to join the Royal Society and DeepMind to a summit on how AI is revolutionising scientific discovery and trotted along with Jon Crowcroft. This event is hot on the heels of the excellent RS report on Science in the Age of AI and, of course, the Nobel prize for Demis Hassabis which was the first ever for my department! The event was held at the BAFTA today, and what follows are my quick livenotes as there was just so much to absorb. The RS and Deepmind will have the full sessions online sometime soon, so I'll update this with those more polished outputs when they're out! Update: Proper notes now available from Google and the Royal Society.

The summit was a day-long exploration of how artificial intelligence is transforming science and society, and the overall theme (including four Nobel laureates!) was on how we are in a golden age of scientific discovery, especially in the biological sciences. The emcee for the event was Hannah Fry, who simply dazzled with her rapid summarisation of complex discussions interspersed with very dry humour about the proceedings!

The consistent message was how ongoing synergy between science, technology, and society is essential to setting the stage for an exploration of transformative discoveries powered by AI that would benefit everyone in society. Missing that synergy would lead to unequal benefit or dangerous crossings of boundaries.

1 Lessons from CRISPR

The first session had James Manyika interviewing Jennifer Doudna, Nobel Laureate and co-inventor of CRISPR, shared how gene editing has moved from science fiction to an essential tool for societal improvement. Some key points:

- AI's integration with CRISPR allows scientists to better predict and control genome editing outcomes, advancing efficiency and reducing hypothesis-testing time. Many experiments could potentially be skipped thanks to simulations predicting outcomes without the need for wetlab work.

- Jennifer discussed projects like methane-free cows, where altering cattle genomes could eliminate methane production entirely. These efforts require multidisciplinary collaboration between computer scientists, agricultural experts, and biologists.

- The success of CRISPR emphasises the need for public engagement, policy frameworks, and open databases for international collaboration. Doudna called for knowledge accessibility, including teaching high school educators about genome editing's impact, as a key part of public outreach about how this technology might affect society in the future.

- CRISPR moved really fast: it was published in 2012, and by 2014 scientists were already editing monkey embroyes. This lead to a realisation that it wasnt enought to be head down in the Lab, but needed a whole team that works on public impact and policy (including RS and national academies) to bootstrap international meetings on human genome editing. The most recent was held in London in March of last year which lead to open, transparent conversations and building a worldwide database of work involving genome editing, especially that which impacts human genome or environmental editing which could escape.

2 Science in the Age of AI

The panel next was Eric Topol chairing a discussion with Pushmeet Kohli, Fiona H. Marshall and Alison Noble. The theme was on how huge number of foundation models are coming out for LLLMs (large language life models) at a dizzying pace.

Pushmeet Kohli first explained how deciphering the genome accelerates biological discoveries by orders of magnitude. AI tools like AlphaFold (which just got the Nobel prize) exemplify breakthroughs that transform biology into a predictive science from a wetlab driven discipline. On other fronts, DeepMind's GraphCast model enables near-term weather forecasting in minutes, which previously required days of supercomputer time to do an equivalent forecast (and now NeuralGCM is doing even better with mechanistic models combined with data). Pushmeet then noted how GNNs for materials science identified over 400k (or 200k? couldnt catch it) new stable inorganic crystals, with potential applications like high-temperature superconductors which were just scifi before.

Then Fiona H. Marshall from Novartis emphasized how AI identifies new drug targets using genomic and population-level data. In drug development, predictive safety is absolutely crucial. Pharmaceutical companies have decades’ worth of histological data, such as rodent testing, stored on hundreds of thousands of glass slides that are now being digitized. Once this data is processed, we can use it to make various predictions. Sharing this data across the pharmaceutical industry would benefit everyone. One of their partner companies is developing scanning algorithms, and once these are operational they will be made open, the entire industry will gain from the resulting dataset. Generative chemistry (like AlphaFold) now designs drugs faster while predictive toxicology ensures safer medicines. Interestingly, the data scientists are in the prime author position as they are dictating the experimental procedures vs the wetlab scientists a few decades ago. This change in incentives drives change within a large org towards more data driven methods.

Another valuable source of data is population-level information, such as Our Future Health (a UK-based NHS initiative). Proper management of nomenclature will ensure that this project generates a massive, usable dataset vs what we have anywhere else. Eric noted that they rely heavily on the UK Biobank, which, with its 15,000 participants, is one of the largest in the world and the Our Future Health program is a huge leap forward with 5m participants. The NIH in the United States is hesitant to engage in public-private partnerships, and so the UK is leading the way in this domain (Anil notes: with privacy concerns about the data sharing).

Fiona also noted that drug discovery is also transforming clinical trials, not just the discovery process itself. Typically, it takes around 10 years for a candidate molecule to move to the clinical trial phase. One major bottleneck is patient enrollment. By leveraging vast demographic databases, which include information on global populations, their diseases, medications, and hospital affiliations, we can drastically improve recruitment efficiency. For example, a clinical trial protocol targeting "women aged 30-50 who are not taking drug X or estrogen modifiers" can utilize these databases to identify and enroll patients quickly. This approach can reduce recruitment time from three years to just six months, significantly accelerating the process of getting drugs to market.

Alison Noble discussed how deep learning has revolutionized ultrasound imaging. AI now guides users on probe placement, reducing training requirements for medical professionals. However, we're now moving so fast that we need to be careful; even the notion of what a scientist is is changing. In the RS report on Science in the Age of AI a number of scientists around the UK were consulted and this concern of reproducibility and data access came up loud and clear. When we publish results, we need to make sure theyh are sound and that peer review is possible. Openness is a deliberate choice however and not always appropriate when sensitive data is involved (e.g. healthcare) but requiring a rigor in evaluation is essential for good science. Scientists need to rethink in the age of AI how we present our work, and how we train new scientists in this environment. So we have some wonderful early examples like AlphaFold, but we need to understand the societal/incentive impacts on our new generation of scientists.

Eric also noted that one of the greatest challenges in genomics is understanding variance, and AlphaMissense has tackled this head-on. However, there is a significant data shortage. Without Helen Birman and the creation of the protein data bank, AlphaFold wouldn’t have been possible. This raises the critical question: where do we source the necessary inputs? Pushmeet responded that intelligence doesn’t emerge in isolation; it relies on experiential datasets. Models can derive this experience from real-world input or interactions within simulations. High-fidelity simulations, in particular, provide models with valuable intuition about future outcomes. Experimental data is also critical, as it integrates with simulations to complete the picture. When dealing with unlabeled data, prediction labels generated by the model itself can be used for further training. However, it's essential to discard incorrect labels to ensure accuracy, which makes this technique effective for bridging data gaps. Crucially, a pure and uncontaminated test set is vital to ensure the reliability of the system. For example, AlphaMissense was trained in an unsupervised manner and successfully identified cancer mutations.

The discussion was quite wide ranging, but overall the two key challenges were:

- Reproducibility in science is a growing concern as AI accelerates discovery. Alison Noble emphasized the need for rigorous validation of results.

- Pushmeet noted the importance of testing the "prediction hull" of AI systems to understand their uncertainty and limitations, which was how AlphaFold built up user confidence (by not having false positives).

As AI transforms science, public-private partnerships and interdisciplinary collaboration are essential (like the Our Future Health) program. Transparency and openness are deliberate choices in science, but regulation must keep up with the pace of innovation. Alison Noble also noted there is a culture change going on for these public/private partnerships within academia. While competition drives innovcation, we need to make sure that the academic reward system keeps up; if there are 50 people on a paper then how is this attractive for young scientists to enter a field and make their own name?

3 A view from the frontier (of cell biology)

Siddhartha Mukherjee, a cancer physician and Pulitzer Prize winner (and personally speaking, author of one of my favourite medical books ever), began the discussion with a warning about potential breaches of data privacy and the dangers they pose. He emphasized the risk of AI being weaponized for misinformation, calling it a frontier challenge. These issues highlight the urgent need to develop mitigation strategies while continuing to advance the capabilities of AI in their respective fields. Siddhartha emphasized that data is the critical missing link in advancing AI. Issues of access, quality, integration, equity, and privacy must be addressed. The complexity of data ownership in AI raises ethical and commercial concerns, as data aggregators often benefit disproportionately.

Janet Thornton, from the European Molecular Biology Lab, shared her perspective on protein structures. She highlighted how AI has transformed the field—from modeling just 20 protein structures in the early days to now over 214 million. Structural biologists worldwide are using this data to validate their findings, explore ligand binding, and venture into uncharted territories of protein functionality. Anna delved into her work as a cell biologist studying membrane proteins and their organization within the body. She shared a case from Cyprus, where mysterious kidney disease affected certain families for decades. AI-driven image recognition was used to identify a genetic mutation, leading to a therapeutic solution. The issue stemmed from a misshapen protein caused by a nodal molecule that traps faulty proteins, ultimately causing cell death. This discovery is now being applied to other conditions, such as Alzheimer’s and blindness, offering hope for broader therapeutic applications.

Janet also spoke about her time as the director of the European Bioinformatics Institute, which manages data repositories like the Worldwide Protein Data Bank. She described the cultural shift required to encourage data sharing, noting it took 20 years for crystallographers to agree to mandatory data deposition before publication. She stressed that medical data, particularly in clinical contexts, must undergo a similar transformation. Publicly funded data must eventually reach the commons, especially when such sharing has the potential to save lives. The UK’s NHS, with its secure data environments, provides a strong model for this approach. However, the health sector needs to move faster than the crystallography community did, requiring buy-in from both patients and medical professionals, as emphasized in Kathy Sudlow’s recent report on the UK’s health data landscape.

Anna Greka, a pathologist and head of a new institute focusing on women’s cancer at the Broad Institute, discussed her work on building AI tools to identify and facilitate the development of disease mechanisms. Anna Greka added that millions of human genomes have been sequenced and aggregated into databases usable by scientists worldwide. If pathology labs globally pooled their data, AI training models would benefit immensely. She suggested anonymizing the data while preserving metadata and federating results across countries to protect privacy and enhance global collaboration.

Anne Vincent-Salomon, head of diagnostic and theranostic medicine at the Institute Curie, stressed the importance of multidisciplinary science and effective communication. She emphasized the need to educate the public, reducing fear and fostering understanding of scientific advancements.

Anna concluded by underscoring the importance of understanding the "unit of life" to progress in biology. She argued for the creation of a high-quality perturbation dataset for cells, akin to the Protein Data Bank’s role in AlphaFold. Skeptical of synthetic data, she emphasized the need for experimentally derived data as a foundation for future breakthroughs. She called this effort a moonshot for the next five years -— a grand challenge to deepen our understanding of life itself! (Anil: see also this great TED talk from Anna last year)

4 The Polycene: Exploring the Opportunity of the Moment

The (epic) next speaker was Thomas L. Friedman, who explored the the interplay of three critical "scaling laws" in the modern era:

- Software: The rapid improvement of AI capabilities post-2017 (with transformers and GPUs).

- Carbon: Rising CO2e and methane emissions driving "global weirding" (extreme and destructive climate changes).

- Disorder: Societal and institutional fragmentation in addressing these crises.

Between 2017, with the introduction of transformer algorithms, and 2020, when advancements in microchips and GPUs took off, artificial intelligence has expanded dramatically. This period reflects a "scaling law" in action. Polymathic AI—AI capable of addressing a broad range of problems—now seems within reach. In just three years, AI-driven science has evolved from science fiction to reality and become accessible to many (albeit often with some limitations on free access). To address the challenges AI presents, we need higher-dimensional thinking for higher-dimensional problems.

At the same time, we're seeing a scaling law in carbon emissions. Levels of CO₂ and methane in the atmosphere, including methane from livestock, are causing destructive climate change. The seven warmest years on record occurred between 2015 and 2021, resulting in what’s called "global weirding"—where hot regions grow hotter, wet regions grow wetter, and the effects become increasingly destructive.

In parallel with these scaling points in carbon and silicon (AI hardware), we’re facing a scaling point in disorder—the erosion of civic structures. Governments worldwide have over-promised on the benefits of industrial democracies, such as healthcare, retirement plans, and infrastructure, yet are struggling to deliver. Societies are aging, educational attainment has stagnated, and productivity growth has slowed.

We're also witnessing growing national security challenges and the dissolution of the great power balance that defined the post-Cold War era. Electric transportation, healthcare, and employment systems are under strain, leading to increased migration. Today, there are 56 active conflicts globally—the highest number since World War II—and more displaced people than at any point in history.

We need a game-changer.

To solve these interconnected crises, we must adopt higher-dimensional approaches that blend solutions across disciplines and scales. The future stability of our planet—and the well-being of the next generation—depends on presenting holistic, interconnected solutions. Friedman calls this the "Polycene" era.

Never before has politics needed science more. Science must enable leaps forward in education and provide buffers against disruptive climate change. Similarly, politics must create the frameworks and systems to allow science to thrive and deliver meaningful solutions.

In That Used to Be Us, Friedman argued that "average is over"; the rapid acceleration of technology means the American Dream -- once achievable for many -- is no longer guaranteed.

However, technology can flatten access to resources. For instance, an Indian farmer can now access advanced insights about crop planting, watering schedules, and fertilizers directly on a smartphone. For the first time, those without access to "average" resources now have access to them through AI. Thanks to AI, "average" as a benchmark is over—and that gives Friedman optimism.

However, there’s a caveat: science and politics must work together. The alignment problem between these fields is real and will become more pressing as we approach AGI. As a species, we’ve become more godlike than ever before, creating systems and intelligence that resemble a larger, more powerful brain. How we use this power will determine our future.

5 Building the Infrastructure for Success

The speakers here were Paul Hofheinz, Asmeret Asefaw Berhe, Fabian J. Theis and Bosun Tijani.

Berhe began by noting that we are at an inflection point -- how do we avoid repeating mistakes from the past, ensuring we don’t leave segments of human society behind or widen the digital divide further? In previous programs such as exascale computing, they insisted as part of the program that they must have explicit sustainability goals. While these goals may have seemed unrealistic initially and were criticised, in retrospect they have shown they can be achieved. An example of the next thing theyre working on is the High-Performance Data Facility, recognizing that the Office of Science produces more scientific data than any other entity in the world (e.g particle physics, genomic labs). We need to rethink how we handle such huge amounts of data, addressing concerns around privacy and integrity.

Minister Tijani then talked about how in Nigeria, there is a direct correlation between science and economic growth, yet in the Global South, we often fail to apply science effectively to societal issues. The answers we think we have often got to shift solutions with context; for instance, policies from the UK don’t transplant cleanly to Nigeria where the poplulation is growing hugely faster.

Key points included:

- Dataset Inclusion: Like Indian farmers accessing AI-driven agricultural insights, we need datasets that represent Nigeria, Rwanda, and other African countries. Nigeria’s average age is 16.9, with 5 million new people entering the population each year. The workforce of the future will come from Africa.

- Infrastructure: Establishing certainty in data infrastructure is critical. Countries will need to ensure their data infrastructures allow for global participation rather than stagnation.

- Digitization: Much of Nigeria’s existing knowledge isn't encoded in a form digestible by AI. Smart digitization efforts are necessary to create inputs for widely used models.

To address these challenges, the Nigerian government did a few things. Over the past 30 years, publications and a name library were correlated to identify AI-focused Nigerian scientists. This effort brought 100 of them together to develop a national AI strategy for Nigeria. An AI Trust was created with a community of trustees to support this strategy. And then a Talent Attraction Program was launched, supported by Google and the government, alongside local private investment. This is one of the largest talent accelerators globally. Nigeria aims to become a net exporter of talent, much like India’s success in this area.

Fabien then talked about how many scientists are driven by natural curiosity, and it's vital to nurture that environment. The "holy trinity" of AI consists of data compute and algorithms need to be completed together. Ten years ago, computer vision flourished due to ImageNet’s availability and now we’re entering a new era with foundation models for cell biology. These models require algorithmic innovations to merge datasets and techniques like multiscale modeling mixed with self-supervised learning to succeed.

We're at a tipping point where we can build generalizable models that can be adapted for specific tasks around the world (a reference to the Nigerian usecases earlier)

Some key points discussed were:

- Equitable compute access: Academic/industrial partnerships are essential to make GPUs accessible for foundational research.

- Cell Atlas: Foundation models help academics plan experiments ("lab in the loop") and overlay disease data for deeper insights. The Deep Cell Project for example aims to not only create steady-state models but also add perturbation behaviors, enabling predictions about how cells respond to interventions. Unlike physics, where laws of motion guide us, cell biology lacks such universal equations. Deep Cell integrates image-based observations, tissue contact data, computational morphology, and clinical data to create a comprehensive model that maps healthy states and potential interventions.

- Benchmarks: Gene data is consistent internationally, but we need standardized benchmarks to equalize global talent and foster competition. Benchmarks are powerful tools for collaboration and innovation as they are accessible for anyone (see Kaggle for example).

- Bias: While we have powerful computational systems, the data they rely on is highly biased and incomplete. These flaws risk being perpetuated in future frontier models. To address this we need to invest in rebalancing datasets to prevent historical biases from carrying over. Cooperative investments are essential to develop homegrown talent in the global south.

Bosun Tijani also noted that the Global South isnt a charity case when it comes to AI. By the end of this century, Nigeria’s population will be half a billion, making it crucial in a highly connected world. There's a strong business case for not missing out. Nigeria is investing $2 billion to deploy dark fiber infrastructure nationwide. This connectivity will empower people to contribute meaningfully to the global digital economy. Governments in the Global South must expand their capacity to think strategically about AI and its potential. Unlike academic institutions, which often drive innovation, governments in these regions need to strengthen their ability to lead and cant rely on a large university pool like Europe does.

6 Collaborating for Impact

The speakers here were Lila Ibrahim, Ilan Gur, Dame Angela McLean and Sir Paul Nurse.

The first question about around how the speakers have experienced changes in science and how it have evolved?

Paul Nurse noted that we live in an increasingly complex scientific world. The expansion of science has led to greater complexity, which, in turn, has created more silos across disciplines. To address this, we need more interaction —- not necessarily immediate collaboration -— but increasing permeability between fields. There are also important social science aspects to consider, such as how to structure training and foster interdisciplinary to work effectively.

Angela McClean: we’ve transitioned from an era of "big science" projects -— large, centrally organized efforts with clear, command-and-control structures -— towards distributed collectives. These collectives pool together the necessary data to tackle significant questions, such as those addressed by AlphaFold. Unlike a single centralized project, AlphaFold’s success came from a clear, well-defined question that encouraged competition and fostered winning strategies.

Today, we need the big research projects to define what success looks like and then innovate towards new ways for people to contribute collectively without a big central structure.

Disciplines can generally be divided into two categories. Those with "countable questions"; for example, solving the Riemann hypothesis might win you a Nobel Prize! Then we have unstructured disciplines: fields like biology, where there isn’t a single list of questions to solve. As Paul Nurse put it, "biology is a bunch of anarchist scientists!". He contined that we need more unfocused research organizations that keep track of unstructured problems and help refine them into focused scientific questions. This kind of work is essential for achieving progress in disciplines that don't have clear or countable goals.

Ilan Gur then introduced ARIA, the Advanced Research Intelligence Agency, that has a mission to build on the UK’s rich scientific ecosystem by pursuing breakthroughs that may not yet have immediate or obvious consequences. ARIA focuses on identifying the right people, their environments, their incentives, and how their work can ultimately benefit society. ARIA’s method begins by gathering program manager scientists to develop a thesis about where to focus efforts. This doesn’t involve just one research project but rather a constellation of efforts that can cross technology readiness levels and organizational boundaries to achieve a focused target. Examples of ARIA initiatives include scaling AI via compute inspired by nature, and another project observing that formal mathematics should be better integrated into AI research to create more robust models. By guaranteeing bounds on inputs, we could use AI in critical applications with confidence in its outcomes.

Angela McClean then talked about how the UK government (under Labour) has outlined missions for addressing key societal challenges, such as growth, safer streets, opportunities for all, clean energy, and better health. These missions are a great starting point for research and problem-solving. However, the structure of Whitehall (government departments) tends to remain siloed. To succeed, we need to draw expertise from across departments to address these challenges.

Paul Nurse noted that science operates on a spectrum between discovery and application. Discovery is anarchic and unpredictable but applications are more directed and goal-oriented. We need end-to-end funding that supports the entire spectrum, from discovery to application, while embracing diversity in approaches. A one-size-fits-all method won’t work. At the Francis Crick Institute, departments were abolished, allowing disciplines to mix; turnover was encouraged after a limit of tenure to keep staffing dynamic (including at senior levels) and a high level of technical expertise was made available to everyone. Mixing people from different disciplines and using social scientists to understand the cultural structures within organizations is key to fostering innovation.

At the Crick Institute, the space encourages serendipitous conversations* This included inviting external guests and creating opportunities for unexpected interactions. Tools like Slack’s "lunch roulette" feature could similarly encourage serendipity and collaboration. (Anil note: Cambridge Colleges do a great job here, but our departments are more siloed but events like Rubik's 50 are a great example of how different disciplines come together)

Angela McClean also noted that we need to find ways to communicate the importance of breakthroughs like AlphaFold outside of the scientific community. For example, when AlphaFold was introduced, the Ministry of Defence (MoD) didn’t grasp why the science mattered -— they lacked the necessary context. Even among highly educated people in the UK, there's a gap in understanding just how much AI is transforming society. By better connecting experts and amplifying their insights, we can and must help bridge this gap.

Paul Nurse also noted that the public must be informed about science advances; e.g. the fiasco around GM crops happened because noone trying to introduce GM crops had bothered to infrm the public explainign what the issues are and getting feedback. The main answer from the public smaple about "why not GM crops" is because they didnt want to eat food with genes in it, and thats what bothered them. So when we're thinking about AI and its implications, lets go out and talk to the public and find out what worries them and then think about how to communicate.

6.1 Accelerating Scientific Discovery Using AI

Demis Hassabis then reflected on AlphaFold and the future of AI-Driven science. AlphaFold, which has been cited over 28,000 times already and by open-sourcing it (including AlphaFold 3 with open weights for non-commercial use), its impact has been profound. Some standout applications include:

- Determining the structure of the nuclear pore complex, which regulates nutrients entering and exiting the cell nucleus.

- Developing a molecular syringe for delivering drugs to hard-to-reach parts of the body.

- Discovering plastic-eating enzymes to address environmental challenges.

AlphaFold is described as a "root node" problem within Deepmind -— once solved, it unlocks entirely new branches of scientific discovery. Its success in determining protein structures has validated this potential. What's next?

Material Design (Gnome Project) is the next frontier of material design, which shares characteristics with protein folding:

- A massive combinatorial search space.

- The need for models that integrate physics and chemistry to optimize solutions.

- Potential breakthroughs include developing new batteries or room-temperature superconductors. Already, 200,000 new crystals have been published -— previously unknown to science -— marking significant progress toward a usable Gnome system.

Applying AI to mathematics also offers exciting possibilities, including solving major conjectures that have eluded mathematicians.

Inspired by mentorship from Paul Nurse, the aim is to simulate an entire virtual cell -— a "Mount Everest" of biology. AlphaFold 2 solves static protein structures, while AlphaFold 3 models interactions, taking us closer to simulating an entire cell (e.g., a yeast cell as the model organism). Within 5–10 years, this ambitious goal may well be achievable.

Quantum Computing is accelerating and offers exciting intersections with AI; simulating quantum systems to generate synthetic data or addressing challenges like error-correcting codes. However, classical Turing machines have proven more capable than initially thought:

- Projects like AlphaGo and AlphaFold show how new algorithms outperform brute force by precomputing models before tasks like making a move in Go or folding a protein.

- Classical systems, when used effectively, can model even quantum systems.

David Deutsch called this approach "crazy, but the right kind of crazy" when Demis talked to him about it. Demis thinks that every natural phenomenon has inherent structure, which machine learning can model to efficiently search for optimal solutions. So quantum may not be necessary for this, and classical computing used with machine learning sufficient to solve the hugely complex underlying problem.

Meanwhile they also launched Isomorphic Labs to rethinking the drug discovery process from the ground up, leveraging AI for one of the most impactful use cases: curing diseases. AlphaFold is a powerful tool for fundamental research, and Isomorphic works on adjacent usecases need for practical drug discovery (helping design chemical compounds, test for toxicity, and minimize side effects, etc). Isomorphic aims to cure diseases with AI, and generate revenue to reinvest in fundamental research, so striking a balance between societal impact and profitability.

Demis then commented that we stand on the brink of a golden era of scientific discovery, driven by interdisciplinary collaboration with domain experts and limitless possibilities for applying AI to new fields and improving AI itself (approaching exponential improvement). The scientific method is humanity's greatest invention and remains the foundation of modern civilization. In an era of transformative AI, its useful to go beyond simple A/B testing and treat AI development as a scientific method test. We need to understand the emergent properties of AI systems and improve interpretability. Techniques from neuroscience (e.g fMRI for studying brains) could inspire ways to study neural networks and make them explainable rather than just being black boxes. The approach is to build the artifact of interest first, then decompose it through targeted experiments to understand it once it has proven useful. Artificial systems like neural networks can be as complex as natural systems, requiring similar rigor to understand.

Science is increasingly expensive and complex, leading to slower progress in certain areas. However, interdisciplinary work will drive significant advances in the next decade. DeepMind, originally founded at the intersection of neuroscience and computer science, exemplifies how crossing disciplines accelerates innovation.

To support these efforts, Google.org just announced a $20 million fund for interdisciplinary research, further enabling breakthroughs at the intersection of fields. (Anil's note: let's hope that sustainability is on the list here!)

6.2 Ask the Nobel Laureates

The last panel had all four Laureates on stage to answer questions, moderated by Hannah Fry: Jennifer Doudna, Sir Demis Hassabis, John Jumper and Sir Paul Nurse.

The discussion opened by asking the panelists how they first felt when they made their prize winning discoveries.

John Jumper: when you release groundbreaking work, it’s fascinating to see the immediate responses. I remember refreshing Twitter and seeing graduate students exclaiming, “How did they get my structure? It hasn’t even been published!” There was a special issue of Science related to the nuclear pore complex, and three out of four studies had heavily used AlphaFold without me even knowing it. It was amazing to see how our tools are empowering researchers.

Jennifer Doudna: In the fall of 2011, while working on CRISPR (a bacterial immune system), we realized it was an RNA-guided system that targets DNA for cleaving. It was one of those "aha" moments—bacteria had figured out how to do this, and now we could understand and manipulate DNA using the same principle. A year later, when we published our findings, we could feel the momentum building in the scientific community.

Paul Nurse: In 1985 (much older than the others!), I was working on yeast and my lab had identified the genes responsible for the cell cycle—how one cell reproduces into two. We wondered whether these findings could apply to humans, even though this was well before human genome mapping. Using the first human cDNA library ever made, we introduced human genes into defective yeast cells. If a human gene could replace the defective yeast gene and restore function, it meant the discovery was transferable. Remarkably, 1.5 billion years of evolutionary divergence didn’t stop this experiment from working.

7 Q&A

Q: What would you say to your 18-year-old self?

Demis Hassabis: I actually had this plan when I was 18! The amazing thing is that it worked out, but I’d tell myself to enjoy the journey a bit more.

John Jumper: My career has been more of a random walk, driven by doing good science in the moment and being open to new opportunities. My advice is to focus on doing good science now and let the interesting paths unfold naturally. It’s almost the opposite of Demis’s advice.

Jennifer Doudna: Follow your passion, never give up, and don’t listen to naysayers.

Paul Nurse: Coming from a non-academic background, I couldn’t believe that I could be paid to follow my curiosity. Even now, it still feels like a privilege.

Q: AI gives answers but struggles with mechanistic insights. How big a barrier is this to public trust, and when can we expect true mechanistic insights?

Demis Hassabis: AI is an engineering science. First, we need to build systems that are worthy of study. Once built, we can break them down and understand them mechanistically over time. Early systems weren’t worth this effort, but now we’re developing tools that are, and they’re improving themselves. Unlike physics, biology can’t always be explained by universal laws, but simulations that can be tested and probed are better suited. Neuroscience techniques, like those used to study real brains, can also help us understand artificial neural networks.

Q: Is attention still all we need?

John Jumper: AlphaFold isn’t just an off-the-shelf transformer. While attention is an important component, many other innovations were added to change the structure of the network significantly. Fundamental research continues to unlock insights into both new data and previously unexamined data. AlphaFold has revealed new knowledge about data that had been available for years.

Demis Hassabis: The transformer architecture has been incredible but isn’t sufficient on its own. We’ll need several more breakthroughs of that scale to reach full AGI.

Q: What are the current challenges in biology data?

Jennifer Doudna: Biology faces issues with both the quality and quantity of data for training AI models. We need to educate researchers on how to collect data both sparsely and smartly. Sparse but broad data is critical to creating robust platforms for training. This ultimately comes down to asking the right questions.

Q: What about people who are skeptical of these breakthroughs? Could society reject them?

Paul Nurse: Keeping the public on board is critical. This isn’t the first time new technology has faced resistance, and every time it happens, there’s concern. Special interest groups often hijack these conversations, so we need to find better ways to engage with the public and explain the science behind the breakthroughs.

Q: Africa will have the largest population of young adults by 2050. How can Africans be included in this global scientific revolution?

Jennifer Doudna: The Innovative Genomics Institute has an ongoing effort in Kenya to work with scientists and help them understand CRISPR. This initiative has fostered a network of researchers, and I’d like to see more of that happening.

Demis Hassabis: DeepMind has been actively working in Africa, with events like the Deep Indaba conference serving as key convening points for African talent. There’s still a lot more to be done, but it’s a hugely important area of focus.

Q: How do we encourage the next generation of scientists?

Paul Nurse: In today’s world, journals are dominated by big data studies. While there’s value in this, we must ensure that creativity doesn’t get lost. There’s enormous potential in big data if approached with creativity, and we need to foster this mindset in our colleagues and students.

Demis Hassabis: Encouraging the next generation is crucial. One of my heroes is Richard Feynman. Every schoolchild should read Surely You’re Joking, Mr. Feynman! It shows how exhilarating it is to work at the frontier of knowledge. Science is incredible and fun, and we need to expose people to that joy.

These conclude my live notes! Beyond the notes here, the corridor conversations were incredibly useful for me: I have lots of connections to make next. Any errors in these notes are all mine, of course; I mainly took them for myself, but I hope it's useful for you to have put them online as well.